The Problem

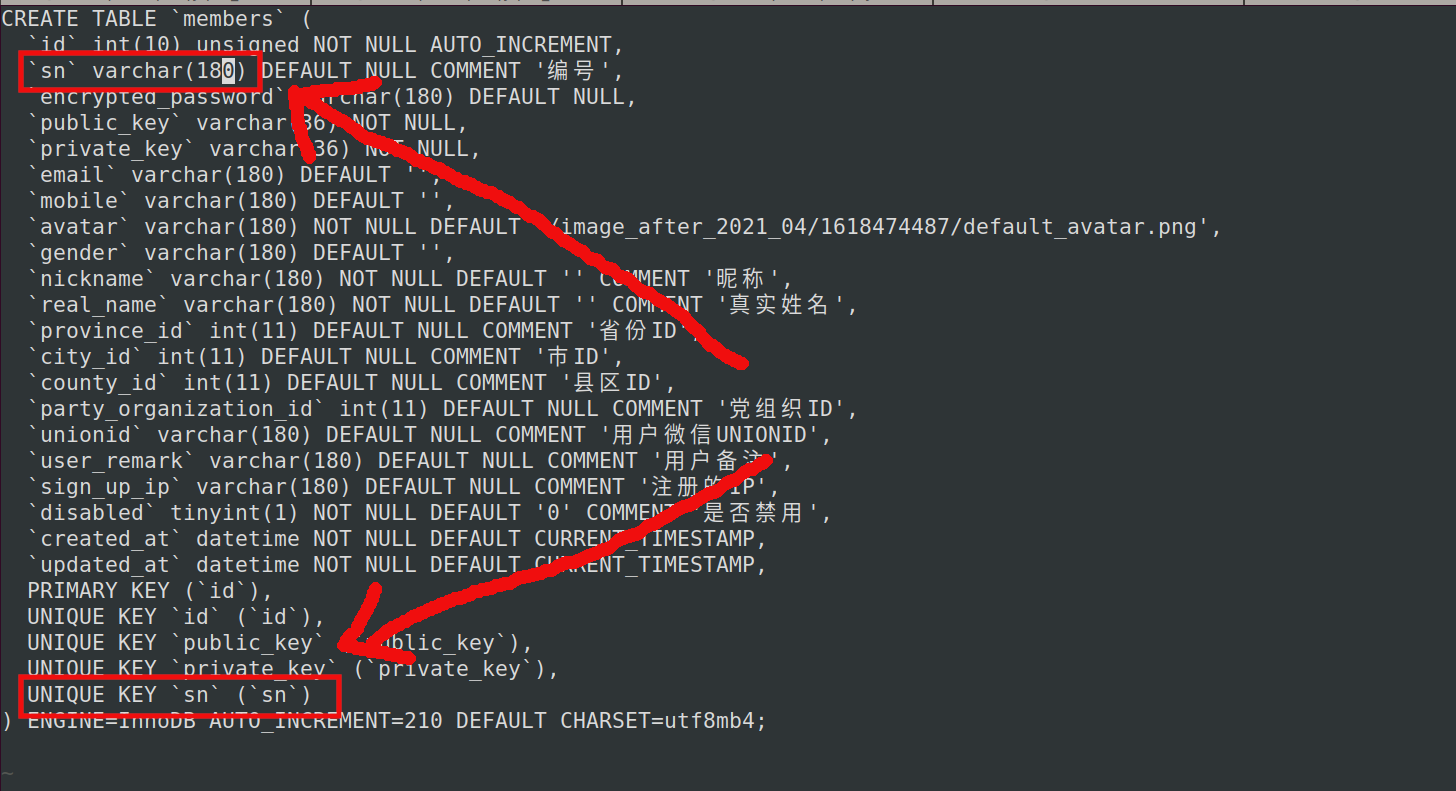

There are max key length limits in MySQL.

- InnoDB — max key length is 1,536 bytes (for 8kb page size) and 768 (for 4kb page size) (Source: Dev.MySQL.com).

- MyISAM — max key length is 1,000 bytes (Source Dev.MySQL.com).

These are counted in bytes! So, a UTF-8 character may take more than one byte to be stored into the key.

Therefore, you have only two immediate solutions:

- Index only the first n'th characters of the text type.

- Create a

FULL TEXT search — Everything will be Searchable within the Text, in a fashion similar to ElasticSearch

Indexing the First N'th Characters of a Text Type

If you are creating a table, use the following syntax to index some field's first 255 characters: KEY sometextkey (SomeText(255)). Like so:

CREATE TABLE `MyTable` (

`id` int(11) NOT NULL auto_increment,

`SomeText` TEXT NOT NULL,

PRIMARY KEY (`id`),

KEY `sometextkey` (`SomeText`(255))

);

If you already have the table, then you can add a unique key to a field with: ADD UNIQUE(ConfigValue(20));. Like so:

ALTER TABLE

MyTable

ADD UNIQUE(`ConfigValue`(20));

If the name of the field is not a reserved MySQL keyword, then the backticks (```) are not necessary around the fieldname.

Creating a FULL TEXT Search

A Full Text search will allow you to search the entirety of the value of your TEXT field. It will do whole-word matching if you use NATURAL LANGUAGE MODE, or partial word matching if you use one of the other modes. See more on the options for FullText here: Dev.MySQL.com

Create your table with the text, and add the Full text index...

ALTER TABLE

MyTable

ADD FULLTEXT INDEX

`SomeTextKey` (`SomeTextField` DESC);

Then search your table like so...

SELECT

MyTable.id, MyTable.Title,

MATCH

(MyTable.Text)

AGAINST

('foobar' IN NATURAL LANGUAGE MODE) AS score

FROM

MyTable

HAVING

score > 0

ORDER BY

score DESC;