The short answer is "No. Any performance impact will be negligible".

The correct answer is "It depends."

A better question is, "Should I use uint when I'm certain I don't need a sign?"

The reason you cannot give a definitive "yes" or "no" with regards to performance is because the target platform will ultimately determine performance. That is, the performance is dictated by whatever processor is going to be executing the code, and the instructions available. Your .NET code compiles down to Intermediate Language (IL or Bytecode). These instructions are then compiled to the target platform by the Just-In-Time (JIT) compiler as part of the Common Language Runtime (CLR). You can't control or predict what code will be generated for every user.

So knowing that the hardware is the final arbiter of performance, the question becomes, "How different is the code .NET generates for a signed versus unsigned integer?" and "Does the difference impact my application and my target platforms?"

The best way to answer these questions is to run a test.

class Program

{

static void Main(string[] args)

{

const int iterations = 100;

Console.WriteLine($"Signed: {Iterate(TestSigned, iterations)}");

Console.WriteLine($"Unsigned: {Iterate(TestUnsigned, iterations)}");

Console.Read();

}

private static void TestUnsigned()

{

uint accumulator = 0;

var max = (uint)Int32.MaxValue;

for (uint i = 0; i < max; i++) ++accumulator;

}

static void TestSigned()

{

int accumulator = 0;

var max = Int32.MaxValue;

for (int i = 0; i < max; i++) ++accumulator;

}

static TimeSpan Iterate(Action action, int count)

{

var elapsed = TimeSpan.Zero;

for (int i = 0; i < count; i++)

elapsed += Time(action);

return new TimeSpan(elapsed.Ticks / count);

}

static TimeSpan Time(Action action)

{

var sw = new Stopwatch();

sw.Start();

action();

sw.Stop();

return sw.Elapsed;

}

}

The two test methods, TestSigned and TestUnsigned, each perform ~2 million iterations of a simple increment on a signed and unsigned integer, respectively. The test code runs 100 iterations of each test and averages the results. This should weed out any potential inconsistencies. The results on my i7-5960X compiled for x64 were:

Signed: 00:00:00.5066966

Unsigned: 00:00:00.5052279

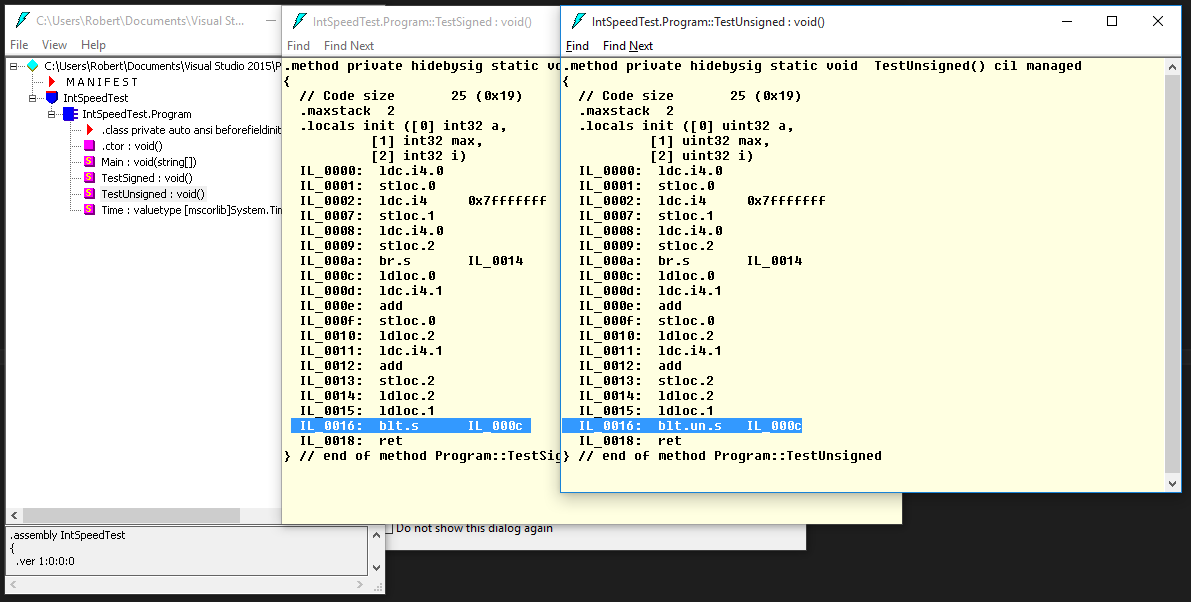

These results are nearly identical, but to get a definitive answer, we really need to look at the bytecode generated for the program. We can use ILDASM as part of the .NET SDK to inspect the code in the assembly generated by the compiler.

![Bytecode]()

Here, we can see that the C# compiler favors signed integers and actually performs most operations natively as signed integers and only ever treats the value in-memory as unsigned when comparing for the branch (a.k.a jump or if). Despite the fact that we're using an unsigned integer for both the iterator AND the accumulator in TestUnsigned, the code is nearly identical to the TestSigned method except for a single instruction: IL_0016. A quick glance at the ECMA spec describes the difference:

blt.un.s :

Branch to target if less than (unsigned or unordered), short form.

blt.s :

Branch to target if less than, short form.

Being such a common instruction, it's safe to assume that most modern high-power processors will have hardware instructions for both operations and they'll very likely execute in the same number of cycles, but this is not guaranteed. A low-power processor may have fewer instructions and not have a branch for unsigned int. In this case, the JIT compiler may have to emit multiple hardware instructions (A conversion first, then a branch, for instance) to execute the blt.un.s IL instruction. Even if this is the case, these additional instructions would be basic and probably wouldn't impact the performance significantly.

So in terms of performance, the long answer is "It is unlikely that there will be a performance difference at all between using a signed or an unsigned integer. If there is a difference, it is likely to be negligible."

So then if the performance is identical, the next logical question is, "Should I use an unsigned value when I'm certain I don't need a sign?"

There are two things to consider here: first, unsigned integers are NOT CLS-compliant, meaning that you may run into issues if you're exposing an unsigned integer as part of an API that another program will consume (such as if you're distributing a reusable library). Second, most operations in .NET, including the method signatures exposed by the BCL (for the reason above), use a signed integer. So if you plan on actually using your unsigned integer, you'll likely find yourself casting it quite a bit. This is going to have a very small performance hit and will make your code a little messier. In the end, it's probably not worth it.

TLDR; back in my C++ days, I'd say "Use whatever is most appropriate and let the compiler sort the rest out." C# is not quite as cut-and-dry, so I would say this for .NET: There's really no performance difference between a signed and unsigned integer on x86/x64, but most operations require a signed integer, so unless you really NEED to restrict the values to positive ONLY or you really NEED the extra range that the sign bit eats, stick with a signed integer. Your code will be cleaner in the end.