I wonder if that would be possible to temporary stop the worker VM instances so they are not running at night time when I am not working on a cluster development. So far the only way I am aware of to "stop" the instances from running is to delete the cluster itself which I don't want to do. Any suggestions are highly appreciated.

P.S. Edited later

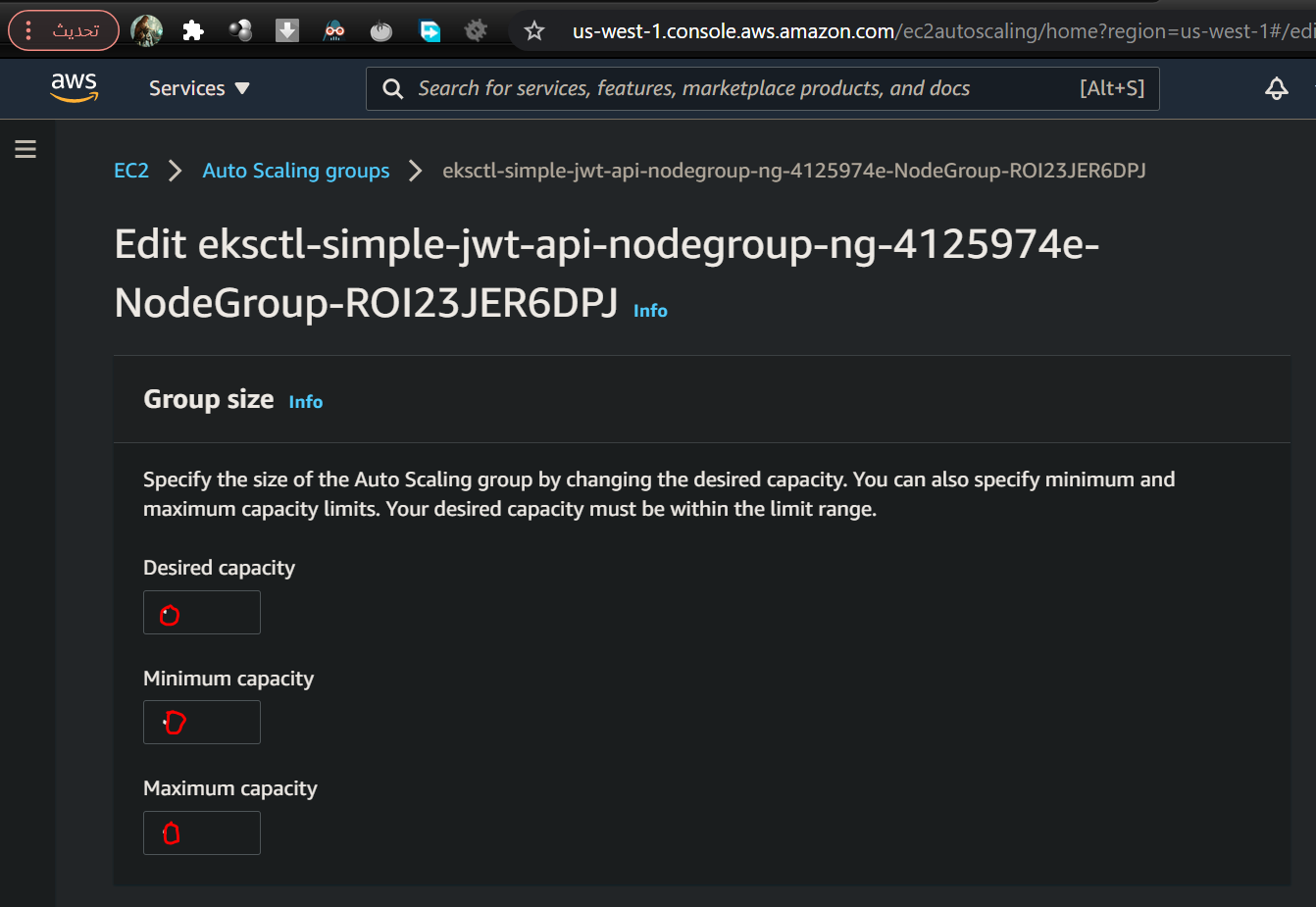

The cluster was created following steps outlined in this guide.