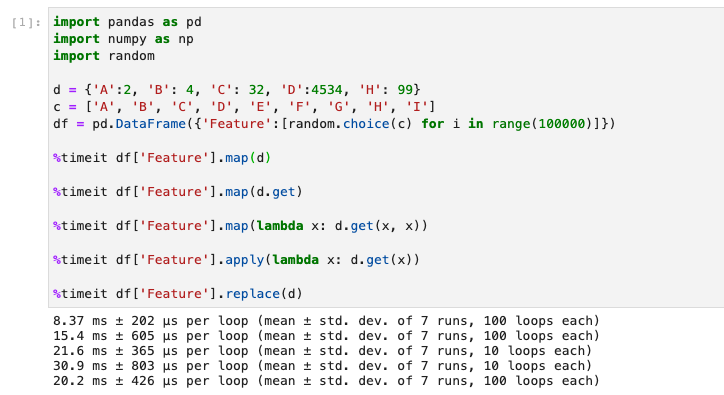

Please help me understand why this "replace from dictionary" operation is slow in Python/Pandas:

# Series has 200 rows and 1 column

# Dictionary has 11269 key-value pairs

series.replace(dictionary, inplace=True)

Dictionary lookups should be O(1). Replacing a value in a column should be O(1). Isn't this a vectorized operation? Even if it's not vectorized, iterating 200 rows is only 200 iterations, so how can it be slow?

Here is a SSCCE demonstrating the issue:

import pandas as pd

import random

# Initialize dummy data

dictionary = {}

orig = []

for x in range(11270):

dictionary[x] = 'Some string ' + str(x)

for x in range(200):

orig.append(random.randint(1, 11269))

series = pd.Series(orig)

# The actual operation we care about

print('Starting...')

series.replace(dictionary, inplace=True)

print('Done.')

Running that command takes more than 1 second on my machine, which is 1000's of times longer than expected to perform <1000 operations.