My intent is to understand the “cooperative thread pool” used by Swift 5.5’s async-await, and how task groups automatically constrain the degree of concurrency: Consider the following task group code, doing 32 calculations in parallel:

func launchTasks() async {

await withTaskGroup(of: Void.self) { group in

for i in 0 ..< 32 {

group.addTask { [self] in

let value = doSomething(with: i)

// do something with `value`

}

}

}

}

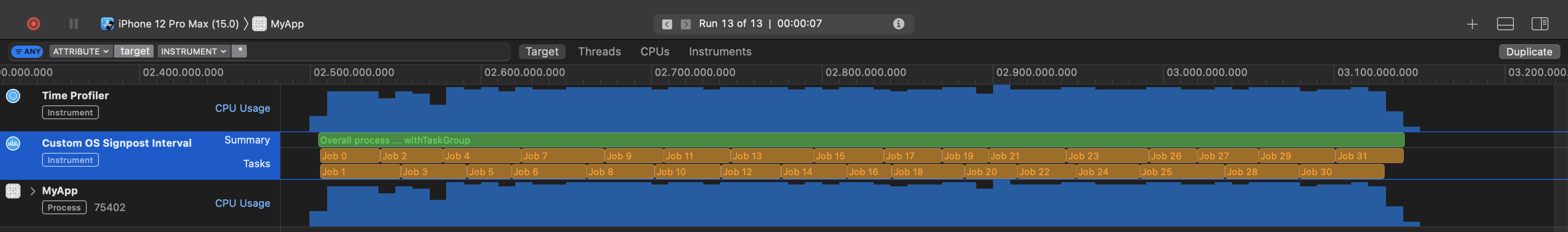

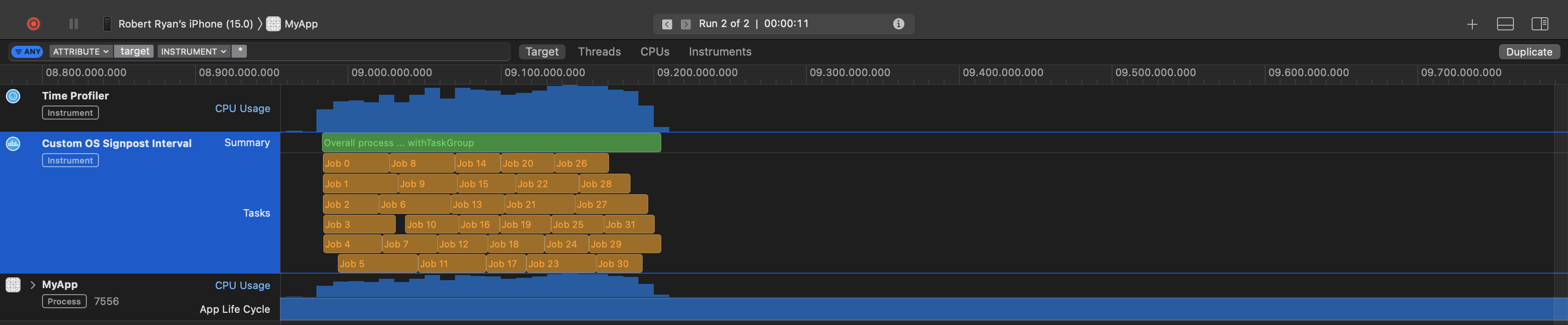

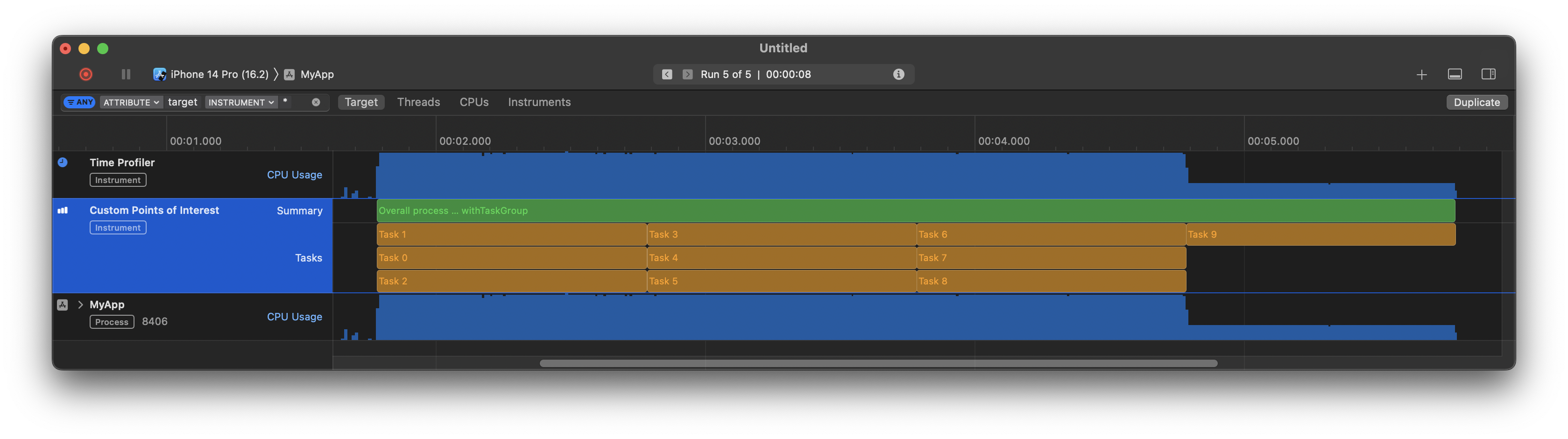

While I hoped it would constrain the degree of concurrency, as advertised, I'm only getting two (!) concurrent tasks at a time. That is far more constrained than I would have expected:

If I use the old GCD concurrentPerform ...

func launchTasks2() {

DispatchQueue.global().async {

DispatchQueue.concurrentPerform(iterations: 32) { [self] i in

let value = doSomething(with: i)

// do something with `value`

}

}

}

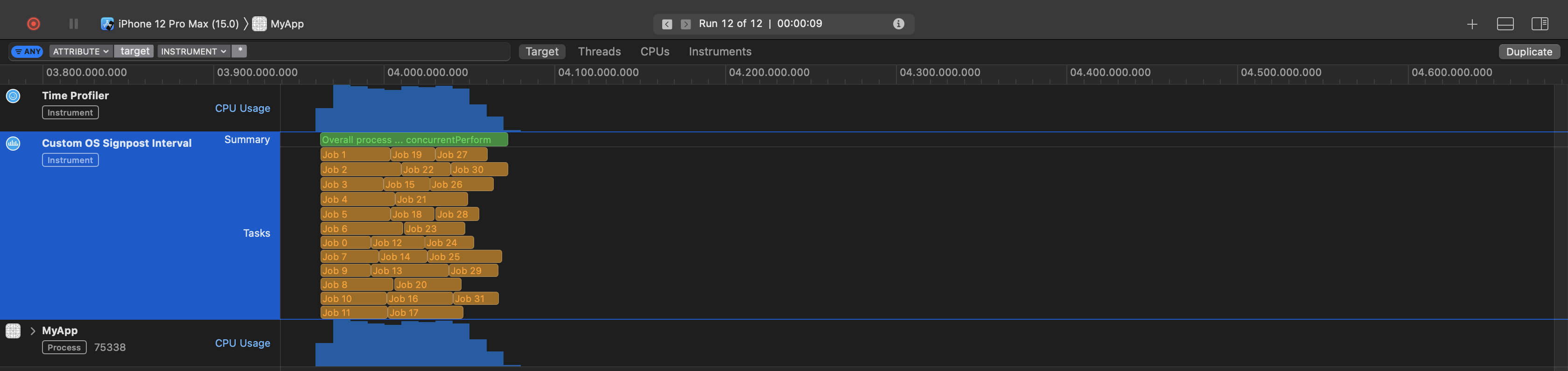

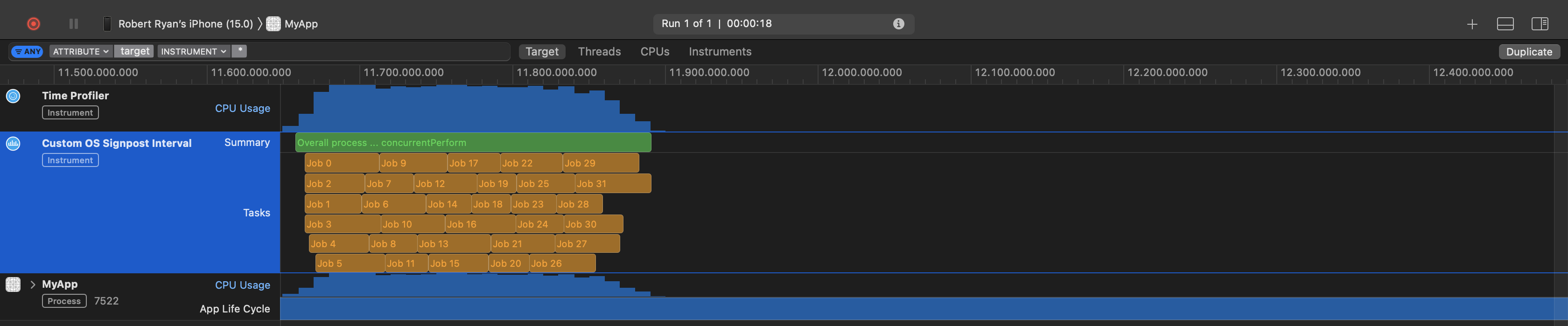

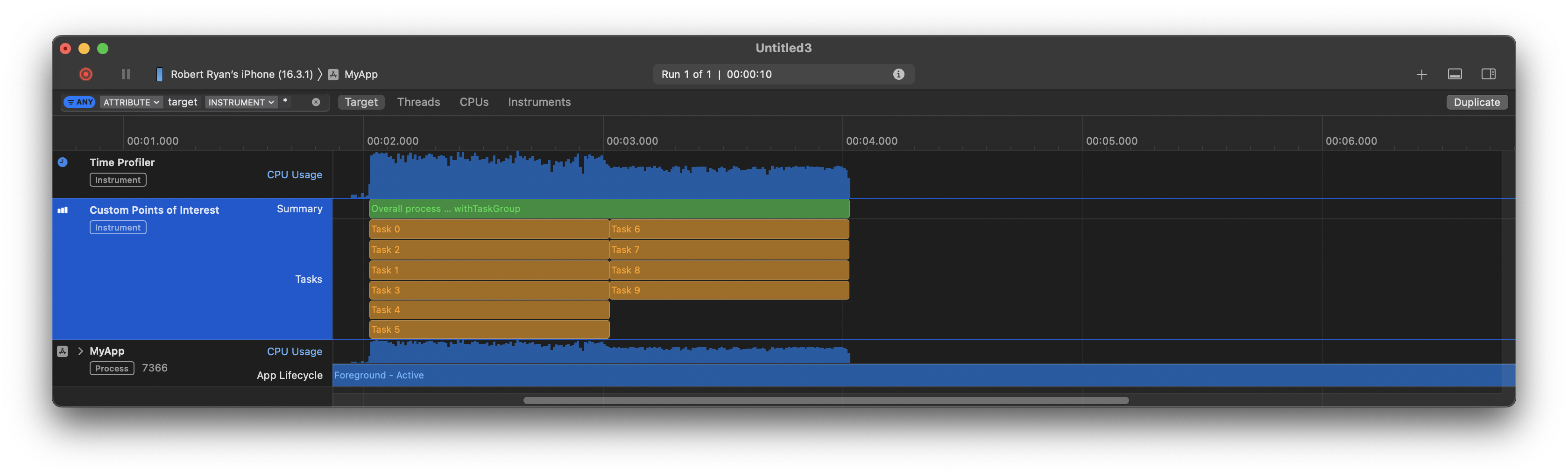

... I get twelve at a time, taking full advantage of the device (iOS 15 simulator on my 6-core i9 MacBook Pro) while avoiding thread-explosion:

(FWIW, both of these were profiled in Xcode 13.0 beta 1 (13A5154h) running on Big Sur. And please disregard the minor differences in the individual “jobs” in these two runs, as the function in question is just spinning for a random duration; the key observation is the degree of concurrency is what we would have expected.)

It is excellent that this new async-await (and task groups) automatically limits the degree of parallelism, but the cooperative thread pool of async-await is far more constrained than I would have expected. And I see of no way to adjust these parameters of that pool. How can we better take advantage of our hardware while still avoiding thread explosion (without resorting to old techniques like non-zero semaphores or operation queues)?

concurrentPerform-solution it looks like the GCD optimized in exactly the same way. Is it so? – Linked