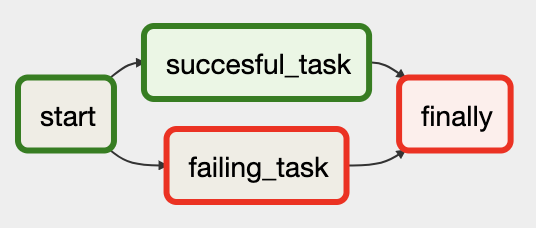

I have the following DAG with 3 tasks:

start --> special_task --> end

The task in the middle can succeed or fail, but end must always be executed (imagine this is a task for cleanly closing resources). For that, I used the trigger rule ALL_DONE:

end.trigger_rule = trigger_rule.TriggerRule.ALL_DONE

Using that, end is properly executed if special_task fails. However, since end is the last task and succeeds, the DAG is always marked as SUCCESS.

How can I configure my DAG so that if one of the tasks failed, the whole DAG is marked as FAILED?

Example to reproduce

import datetime

from airflow import DAG

from airflow.operators.bash_operator import BashOperator

from airflow.utils import trigger_rule

dag = DAG(

dag_id='my_dag',

start_date=datetime.datetime.today(),

schedule_interval=None

)

start = BashOperator(

task_id='start',

bash_command='echo start',

dag=dag

)

special_task = BashOperator(

task_id='special_task',

bash_command='exit 1', # force failure

dag=dag

)

end = BashOperator(

task_id='end',

bash_command='echo end',

dag=dag

)

end.trigger_rule = trigger_rule.TriggerRule.ALL_DONE

start.set_downstream(special_task)

special_task.set_downstream(end)

This post seems to be related, but the answer does not suit my needs, since the downstream task end must be executed (hence the mandatory trigger_rule).

on_failure_callbackto get notified about failed task. – Upsideon_failure_callbackto get notified, but I would like my DAG marked asfailedin the Web UI. – Currenspecial_taskI'd expect failure to propagate. It is more of a bandage than a solution though. – Upside