Solution Overview

Okay, I would approach the problem from multiple directions. There are some great suggestions here and if I were you I would use an ensemble of those approaches (majority voting, predicting label which is agreed upon by more than 50% of classifiers in your binary case).

I'm thinking about following approaches:

- Active learning (example approach provided by me below)

- MediaWiki backlinks provided as an answer by @TavoGC

- SPARQL ancestral categories provided as a comment to your question by @Stanislav Kralin and/or parent categories provided by @Meena Nagarajan (those two could be an ensemble on their own based on their differences, but for that you would have to contact both creators and compare their results).

This way 2 out of three would have to agree a certain concept is a medical one, which minimizes chance of an error further.

While we're at it I would argue against approach presented by @ananand_v.singh in this answer, because:

- distance metric should not be euclidean, cosine similarity is much better metric (used by, e.g. spaCy) as it does not take into account magnitude of the vectors (and it shouldn't, that's how word2vec or GloVe were trained)

- many artificial clusters would be created if I understood correctly, while we only need two: medicine and non-medicine one. Furthermore, centroid of medicine is not centered on the medicine itself. This poses additional problems, say centroid is moved far away from the medicine and other words like, say,

computer or human (or any other not-fitting in your opinion into medicine) might get into the cluster.

- it's hard to evaluate results, even more so, the matter is strictly subjective. Furthermore word vectors are hard to visualize and understand (casting them into lower dimensions [2D/3D] using PCA/TSNE/similar for so many words, would give us totally non-sensical results [yeah, I have tried to do it, PCA gets around 5% explained variance for your longer dataset, really, really low]).

Based on the problems highlighted above I have come up with solution using active learning, which is pretty forgotten approach to such problems.

Active Learning approach

In this subset of machine learning, when we have a hard time coming up with an exact algorithm (like what does it mean for a term to be a part of medical category), we ask human "expert" (doesn't actually have to be expert) to provide some answers.

Knowledge encoding

As anand_v.singh pointed out, word vectors are one of the most promising approach and I will use it here as well (differently though, and IMO in a much cleaner and easier fashion).

I'm not going to repeat his points in my answer, so I will add my two cents:

- Do not use contextualized word-embeddings as currently available state of the art (e.g. BERT)

- Check how many of your concepts have no representation (e.g. is represented as a vector of zeros). It should be checked (and is checked in my code,, there will be further discussion when the time comes) and you may use the embedding which has most of them present.

Measuring similarity using spaCy

This class measures similarity between medicine encoded as spaCy's GloVe word vector and every other concept.

class Similarity:

def __init__(self, centroid, nlp, n_threads: int, batch_size: int):

# In our case it will be medicine

self.centroid = centroid

# spaCy's Language model (english), which will be used to return similarity to

# centroid of each concept

self.nlp = nlp

self.n_threads: int = n_threads

self.batch_size: int = batch_size

self.missing: typing.List[int] = []

def __call__(self, concepts):

concepts_similarity = []

# nlp.pipe is faster for many documents and can work in parallel (not blocked by GIL)

for i, concept in enumerate(

self.nlp.pipe(

concepts, n_threads=self.n_threads, batch_size=self.batch_size

)

):

if concept.has_vector:

concepts_similarity.append(self.centroid.similarity(concept))

else:

# If document has no vector, it's assumed to be totally dissimilar to centroid

concepts_similarity.append(-1)

self.missing.append(i)

return np.array(concepts_similarity)

This code will return a number for each concept measuring how similar it is to centroid. Furthermore, it records indices of concepts missing their representation. It might be called like this:

import json

import typing

import numpy as np

import spacy

nlp = spacy.load("en_vectors_web_lg")

centroid = nlp("medicine")

concepts = json.load(open("concepts_new.txt"))

concepts_similarity = Similarity(centroid, nlp, n_threads=-1, batch_size=4096)(

concepts

)

You may substitute you data in place of new_concepts.json.

Look at spacy.load and notice I have used en_vectors_web_lg. It consists of 685.000 unique word vectors (which is a lot), and may work out of the box for your case. You have to download it separately after installing spaCy, more info provided in the links above.

Additionally you may want to use multiple centroid words, e.g. add words like disease or health and average their word vectors. I'm not sure whether that would affect positively your case though.

Other possibility might be to use multiple centroids and calculate similiarity between each concept and multiple of centroids. We may have a few thresholds in such case, this is likely to remove some false positives, but may miss some terms which one could consider to be similar to medicine. Furthermore it would complicate the case much more, but if your results are unsatisfactory you should consider two options above (and only if those are, don't jump into this approach without previous thought).

Now, we have a rough measure of concept's similarity. But what does it mean that a certain concept has 0.1 positive similarity to medicine? Is it a concept one should classify as medical? Or maybe that's too far away already?

Asking expert

To get a threshold (below it terms will be considered non medical), it's easiest to ask a human to classify some of the concepts for us (and that's what active learning is about). Yeah, I know it's a really simple form of active learning, but I would consider it such anyway.

I have written a class with sklearn-like interface asking human to classify concepts until optimal threshold (or maximum number of iterations) is reached.

class ActiveLearner:

def __init__(

self,

concepts,

concepts_similarity,

max_steps: int,

samples: int,

step: float = 0.05,

change_multiplier: float = 0.7,

):

sorting_indices = np.argsort(-concepts_similarity)

self.concepts = concepts[sorting_indices]

self.concepts_similarity = concepts_similarity[sorting_indices]

self.max_steps: int = max_steps

self.samples: int = samples

self.step: float = step

self.change_multiplier: float = change_multiplier

# We don't have to ask experts for the same concepts

self._checked_concepts: typing.Set[int] = set()

# Minimum similarity between vectors is -1

self._min_threshold: float = -1

# Maximum similarity between vectors is 1

self._max_threshold: float = 1

# Let's start from the highest similarity to ensure minimum amount of steps

self.threshold_: float = 1

samples argument describes how many examples will be shown to an expert during each iteration (it is the maximum, it will return less if samples were already asked for or there is not enough of them to show).step represents the drop of threshold (we start at 1 meaning perfect similarity) in each iteration.change_multiplier - if an expert answers concepts are not related (or mostly unrelated, as multiple of them are returned), step is multiplied by this floating point number. It is used to pinpoint exact threshold between step changes at each iteration.- concepts are sorted based on their similarity (the more similar a concept is, the higher)

Function below asks expert for an opinion and find optimal threshold based on his answers.

def _ask_expert(self, available_concepts_indices):

# Get random concepts (the ones above the threshold)

concepts_to_show = set(

np.random.choice(

available_concepts_indices, len(available_concepts_indices)

).tolist()

)

# Remove those already presented to an expert

concepts_to_show = concepts_to_show - self._checked_concepts

self._checked_concepts.update(concepts_to_show)

# Print message for an expert and concepts to be classified

if concepts_to_show:

print("\nAre those concepts related to medicine?\n")

print(

"\n".join(

f"{i}. {concept}"

for i, concept in enumerate(

self.concepts[list(concepts_to_show)[: self.samples]]

)

),

"\n",

)

return input("[y]es / [n]o / [any]quit ")

return "y"

Example question looks like this:

Are those concepts related to medicine?

0. anesthetic drug

1. child and adolescent psychiatry

2. tertiary care center

3. sex therapy

4. drug design

5. pain disorder

6. psychiatric rehabilitation

7. combined oral contraceptive

8. family practitioner committee

9. cancer family syndrome

10. social psychology

11. drug sale

12. blood system

[y]es / [n]o / [any]quit y

... parsing an answer from expert:

# True - keep asking, False - stop the algorithm

def _parse_expert_decision(self, decision) -> bool:

if decision.lower() == "y":

# You can't go higher as current threshold is related to medicine

self._max_threshold = self.threshold_

if self.threshold_ - self.step < self._min_threshold:

return False

# Lower the threshold

self.threshold_ -= self.step

return True

if decision.lower() == "n":

# You can't got lower than this, as current threshold is not related to medicine already

self._min_threshold = self.threshold_

# Multiply threshold to pinpoint exact spot

self.step *= self.change_multiplier

if self.threshold_ + self.step < self._max_threshold:

return False

# Lower the threshold

self.threshold_ += self.step

return True

return False

And finally whole code code of ActiveLearner, which finds optimal threshold of similiarity accordingly to expert:

class ActiveLearner:

def __init__(

self,

concepts,

concepts_similarity,

samples: int,

max_steps: int,

step: float = 0.05,

change_multiplier: float = 0.7,

):

sorting_indices = np.argsort(-concepts_similarity)

self.concepts = concepts[sorting_indices]

self.concepts_similarity = concepts_similarity[sorting_indices]

self.samples: int = samples

self.max_steps: int = max_steps

self.step: float = step

self.change_multiplier: float = change_multiplier

# We don't have to ask experts for the same concepts

self._checked_concepts: typing.Set[int] = set()

# Minimum similarity between vectors is -1

self._min_threshold: float = -1

# Maximum similarity between vectors is 1

self._max_threshold: float = 1

# Let's start from the highest similarity to ensure minimum amount of steps

self.threshold_: float = 1

def _ask_expert(self, available_concepts_indices):

# Get random concepts (the ones above the threshold)

concepts_to_show = set(

np.random.choice(

available_concepts_indices, len(available_concepts_indices)

).tolist()

)

# Remove those already presented to an expert

concepts_to_show = concepts_to_show - self._checked_concepts

self._checked_concepts.update(concepts_to_show)

# Print message for an expert and concepts to be classified

if concepts_to_show:

print("\nAre those concepts related to medicine?\n")

print(

"\n".join(

f"{i}. {concept}"

for i, concept in enumerate(

self.concepts[list(concepts_to_show)[: self.samples]]

)

),

"\n",

)

return input("[y]es / [n]o / [any]quit ")

return "y"

# True - keep asking, False - stop the algorithm

def _parse_expert_decision(self, decision) -> bool:

if decision.lower() == "y":

# You can't go higher as current threshold is related to medicine

self._max_threshold = self.threshold_

if self.threshold_ - self.step < self._min_threshold:

return False

# Lower the threshold

self.threshold_ -= self.step

return True

if decision.lower() == "n":

# You can't got lower than this, as current threshold is not related to medicine already

self._min_threshold = self.threshold_

# Multiply threshold to pinpoint exact spot

self.step *= self.change_multiplier

if self.threshold_ + self.step < self._max_threshold:

return False

# Lower the threshold

self.threshold_ += self.step

return True

return False

def fit(self):

for _ in range(self.max_steps):

available_concepts_indices = np.nonzero(

self.concepts_similarity >= self.threshold_

)[0]

if available_concepts_indices.size != 0:

decision = self._ask_expert(available_concepts_indices)

if not self._parse_expert_decision(decision):

break

else:

self.threshold_ -= self.step

return self

All in all, you would have to answer some questions manually but this approach is way more accurate in my opinion.

Furthermore, you don't have to go through all of the samples, just a small subset of it. You may decide how many samples constitute a medical term (whether 40 medical samples and 10 non-medical samples shown, should still be considered medical?), which let's you fine-tune this approach to your preferences. If there is an outlier (say, 1 sample out of 50 is non-medical), I would consider the threshold to still be valid.

Once again: This approach should be mixed with others in order to minimalize the chance for wrong classification.

Classifier

When we obtain the threshold from expert, classification would be instantenous, here is a simple class for classification:

class Classifier:

def __init__(self, centroid, threshold: float):

self.centroid = centroid

self.threshold: float = threshold

def predict(self, concepts_pipe):

predictions = []

for concept in concepts_pipe:

predictions.append(self.centroid.similarity(concept) > self.threshold)

return predictions

And for brevity, here is the final source code:

import json

import typing

import numpy as np

import spacy

class Similarity:

def __init__(self, centroid, nlp, n_threads: int, batch_size: int):

# In our case it will be medicine

self.centroid = centroid

# spaCy's Language model (english), which will be used to return similarity to

# centroid of each concept

self.nlp = nlp

self.n_threads: int = n_threads

self.batch_size: int = batch_size

self.missing: typing.List[int] = []

def __call__(self, concepts):

concepts_similarity = []

# nlp.pipe is faster for many documents and can work in parallel (not blocked by GIL)

for i, concept in enumerate(

self.nlp.pipe(

concepts, n_threads=self.n_threads, batch_size=self.batch_size

)

):

if concept.has_vector:

concepts_similarity.append(self.centroid.similarity(concept))

else:

# If document has no vector, it's assumed to be totally dissimilar to centroid

concepts_similarity.append(-1)

self.missing.append(i)

return np.array(concepts_similarity)

class ActiveLearner:

def __init__(

self,

concepts,

concepts_similarity,

samples: int,

max_steps: int,

step: float = 0.05,

change_multiplier: float = 0.7,

):

sorting_indices = np.argsort(-concepts_similarity)

self.concepts = concepts[sorting_indices]

self.concepts_similarity = concepts_similarity[sorting_indices]

self.samples: int = samples

self.max_steps: int = max_steps

self.step: float = step

self.change_multiplier: float = change_multiplier

# We don't have to ask experts for the same concepts

self._checked_concepts: typing.Set[int] = set()

# Minimum similarity between vectors is -1

self._min_threshold: float = -1

# Maximum similarity between vectors is 1

self._max_threshold: float = 1

# Let's start from the highest similarity to ensure minimum amount of steps

self.threshold_: float = 1

def _ask_expert(self, available_concepts_indices):

# Get random concepts (the ones above the threshold)

concepts_to_show = set(

np.random.choice(

available_concepts_indices, len(available_concepts_indices)

).tolist()

)

# Remove those already presented to an expert

concepts_to_show = concepts_to_show - self._checked_concepts

self._checked_concepts.update(concepts_to_show)

# Print message for an expert and concepts to be classified

if concepts_to_show:

print("\nAre those concepts related to medicine?\n")

print(

"\n".join(

f"{i}. {concept}"

for i, concept in enumerate(

self.concepts[list(concepts_to_show)[: self.samples]]

)

),

"\n",

)

return input("[y]es / [n]o / [any]quit ")

return "y"

# True - keep asking, False - stop the algorithm

def _parse_expert_decision(self, decision) -> bool:

if decision.lower() == "y":

# You can't go higher as current threshold is related to medicine

self._max_threshold = self.threshold_

if self.threshold_ - self.step < self._min_threshold:

return False

# Lower the threshold

self.threshold_ -= self.step

return True

if decision.lower() == "n":

# You can't got lower than this, as current threshold is not related to medicine already

self._min_threshold = self.threshold_

# Multiply threshold to pinpoint exact spot

self.step *= self.change_multiplier

if self.threshold_ + self.step < self._max_threshold:

return False

# Lower the threshold

self.threshold_ += self.step

return True

return False

def fit(self):

for _ in range(self.max_steps):

available_concepts_indices = np.nonzero(

self.concepts_similarity >= self.threshold_

)[0]

if available_concepts_indices.size != 0:

decision = self._ask_expert(available_concepts_indices)

if not self._parse_expert_decision(decision):

break

else:

self.threshold_ -= self.step

return self

class Classifier:

def __init__(self, centroid, threshold: float):

self.centroid = centroid

self.threshold: float = threshold

def predict(self, concepts_pipe):

predictions = []

for concept in concepts_pipe:

predictions.append(self.centroid.similarity(concept) > self.threshold)

return predictions

if __name__ == "__main__":

nlp = spacy.load("en_vectors_web_lg")

centroid = nlp("medicine")

concepts = json.load(open("concepts_new.txt"))

concepts_similarity = Similarity(centroid, nlp, n_threads=-1, batch_size=4096)(

concepts

)

learner = ActiveLearner(

np.array(concepts), concepts_similarity, samples=20, max_steps=50

).fit()

print(f"Found threshold {learner.threshold_}\n")

classifier = Classifier(centroid, learner.threshold_)

pipe = nlp.pipe(concepts, n_threads=-1, batch_size=4096)

predictions = classifier.predict(pipe)

print(

"\n".join(

f"{concept}: {label}"

for concept, label in zip(concepts[20:40], predictions[20:40])

)

)

After answering some questions, with threshold 0.1 (everything between [-1, 0.1) is considered non-medical, while [0.1, 1] is considered medical) I got the following results:

kartagener s syndrome: True

summer season: True

taq: False

atypical neuroleptic: True

anterior cingulate: False

acute respiratory distress syndrome: True

circularity: False

mutase: False

adrenergic blocking drug: True

systematic desensitization: True

the turning point: True

9l: False

pyridazine: False

bisoprolol: False

trq: False

propylhexedrine: False

type 18: True

darpp 32: False

rickettsia conorii: False

sport shoe: True

As you can see this approach is far from perfect, so the last section described possible improvements:

Possible improvements

As mentioned in the beginning using my approach mixed with other answers would probably leave out ideas like sport shoe belonging to medicine out and active learning approach would be more of a decisive vote in case of a draw between two heuristics mentioned above.

We could create an active learning ensemble as well. Instead of one threshold, say 0.1, we would use multiple of them (either increasing or decreasing), let's say those are 0.1, 0.2, 0.3, 0.4, 0.5.

Let's say sport shoe gets, for each threshold it's respective True/False like this:

True True False False False,

Making a majority voting we would mark it non-medical by 3 out of 2 votes. Furthermore, too strict threshold would me mitigated as well if thresholds below it out-vote it (case if True/False would look like this: True True True False False).

Final possible improvement I came up with: In the code above I'm using Doc vector, which is a mean of word vectors creating the concept. Say one word is missing (vectors consisting of zeros), in such case, it would be pushed further away from medicine centroid. You may not want that (as some niche medical terms [abbreviations like gpv or others] might be missing their representation), in such case you could average only those vectors which are different from zero.

I know this post is quite lengthy, so if you have any questions post them below.

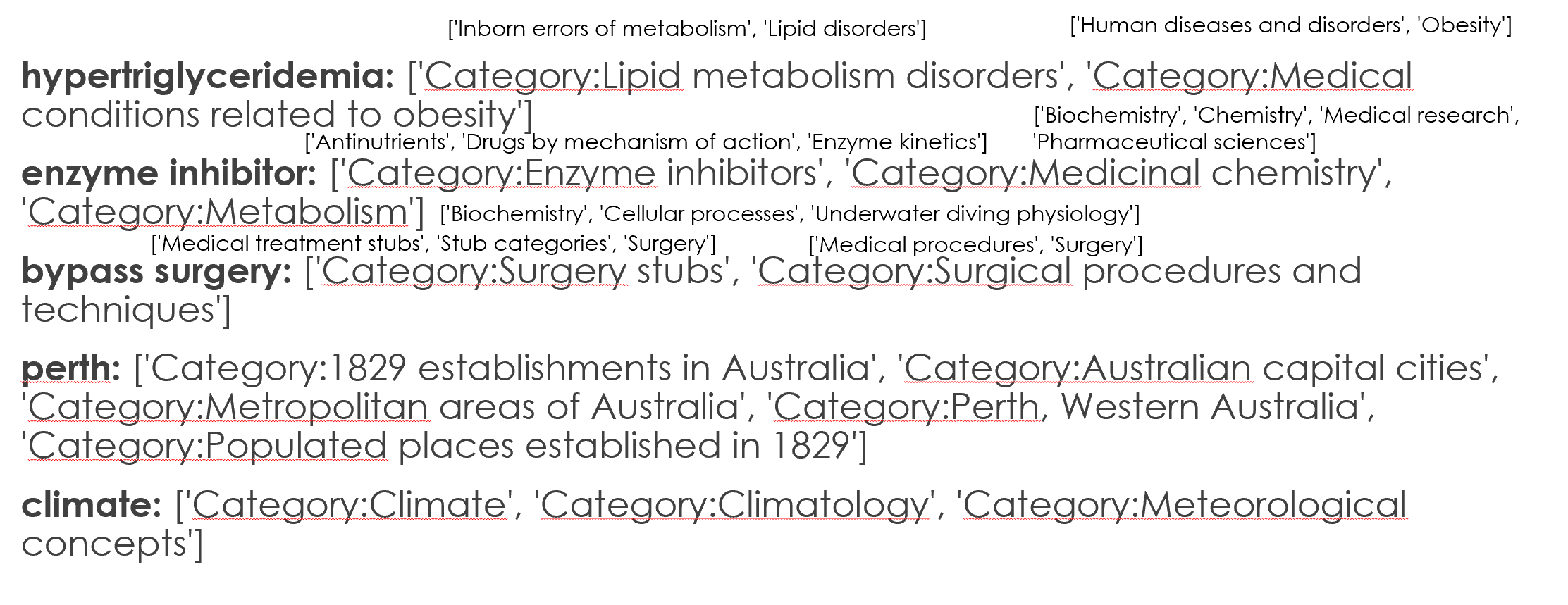

dbc, the issue maybe that I have preprocessed my data and all of them are lowercased and without symbols. Is there a way to get nearly equal concepts fromdbc? For example givenmarine_oil, it returns the correspondingdbcconcept of it without giving an error in the code? :) – Oridbc:-s are Wikipedia categories you have extracted. I've proposed to check if these categories havedbc:Medicineas an ancestral category. If more than, say, half of concept categories havedbc:Medicineas an ancestral category, you could consider this concept to be 'medical'. – Tevisskos:broader{1,7}? :) – Ori{m,n}are Virtuoso-specific extensions of SPARQL 1.1 property paths. You could also try "unqualified"skos:broader+orskos:broader+. – Tevissparql.setQuery(" ASK { dbc:Lipid_metabolism_disorders "unqualified" skos:broader+ dbc:Medicine } ")? Please correct me if I am wrong :) – Ori" ASK { dbc:Lipid_metabolism_disorders skos:broader+ dbc:Medicine } "– Tevis+denotes inskos:broder? :) – Ori{1,7}is this mean that it recursively go upto 7 hierarchical levels? I still do not understand what is meant by+. In the link you have mentioned above it saysA path that connects the subject and object of the path by one or more matches of elt.. What does this mean? :) – Ori+meansone or more,*meanszero or more.{1,7}meansfrom one to seven hops, but supported only by Virtuoso SPARQL endpoint. – Tevis