Quick Answer:

- They are software constructs that follows the definition by NIC hardware so both understands and could talk to each other.

- They could get filled either way, depending on the contract defined by the vendor. Possible scenarios could include but are not limited to:

- By driver (e.g. For empty buffer prepared by driver to be received by hardware Rx; For packet buffer prepared by driver to be transmitted by hardware Tx)

- By NIC (e.g. For packet buffer written back by hardware for completed Rx packet; For finished Tx packet buffer indicated by hardware that it's transmitted)

More Architectural Details:

Note: I assume you have knowledge of ring data structure, concept of DMA.

https://en.wikipedia.org/wiki/Circular_buffer

https://en.wikipedia.org/wiki/Direct_memory_access

Descriptor, as its name implies, describes a packet. It doesn't directly contain packet data (for NICs as far as I know), but rather describes the packet, i.e. where is packet bytes stored, length of the packet, etc.

I will use RX path as an example to illustrate why it's useful. Upon receiving a packet, the NIC translates the electronic/optical/radio signal on the wire to binary data bytes. Then NIC need to inform the OS that it has received something. In the old days, this is done by interrupts and OS would read bytes from a pre-defined location on the NIC to RAM. However this is slow since 1) CPU are required to participate in data transfer from NIC to RAM 2) there could be lots of packets, thus lots of interrupts which could be too many to handle for CPU. Then DMA came along and solves the first problem. Also, folks designed poll mode driver (or hybrid mode, as in Linux NAPI) so CPU could be freed from interruption handling and poll many packets at once thus solving the 2nd problem.

The NIC finishes signal translation to bytes and would like to do a DMA to RAM. But before that, NIC needs to know where to DMA to, as it can't randomly put data in RAM which CPU won't know where and isn't secure.

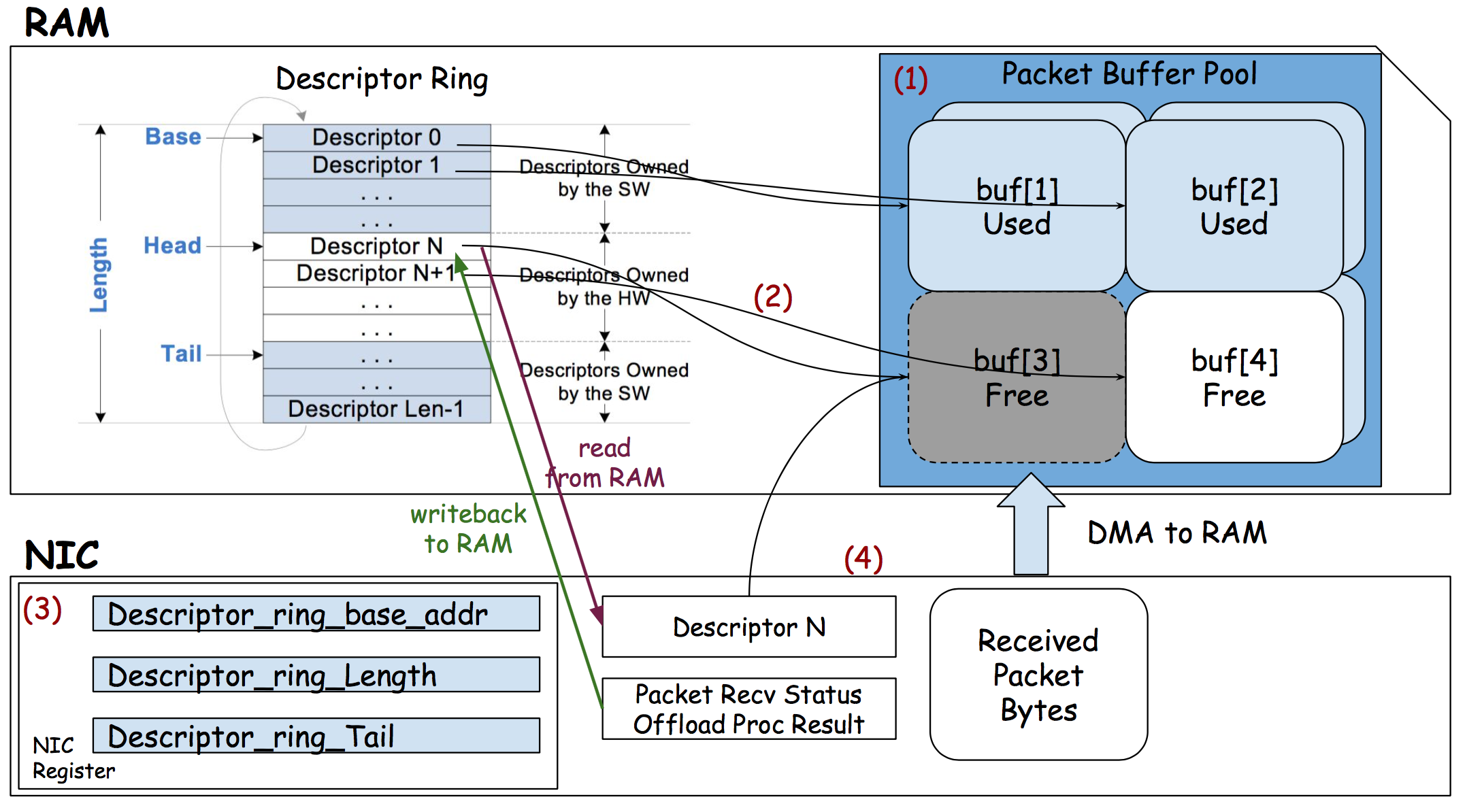

So during initialization of RX queue, NIC driver pre-allocates some packet buffer, as well as an array of packet descriptors. It initializes each packet descriptor according to the NIC definition.

Below is the convention used by Intel XL710 NIC (names have been simplified for better understanding):

![XL710 RX read Descriptor]()

![XL710 Rx Writeback Descriptor]()

/*

Rx descriptor used by XL710 is filled by both driver and NIC,

* but at different stage of operations. Thus to save space, it's

* defined as a union of read (by NIC) and writeback (by NIC).

*

* It must follow the description from the data sheet table above.

*

* __leXX below means little endian XX bit field.

* The endianness and length has to be explicit, the NIC can be used by different CPU with different word size and endianness.

*/

union rx_desc {

struct {

__le64 pkt_addr; /* Packet buffer address, points to a free packet buffer in packet_buffer_pool */

__le64 hdr_addr; /* Header buffer address, normally isn't used */

} read; /* initialized by driver */

struct {

struct {

struct {

union {

__le16 mirroring_status;

__le16 fcoe_ctx_id;

} mirr_fcoe;

__le16 l2tag1;

} lo_dword;

union {

__le32 rss; /* RSS Hash */

__le32 fd_id; /* Flow director filter id */

__le32 fcoe_param; /* FCoE DDP Context id */

} hi_dword;

} qword0;

struct {

/* ext status/error/pktype/length */

__le64 status_error_len;

} qword1;

} wb; /* writeback by NIC */

};

/*

* Rx Queue defines a circular ring of Rx descriptors

*/

struct rx_queue {

volatile rx_desc rx_ring[RING_SIZE]; /* RX ring of descriptors */

struct packet_buffer_pool *pool; /* packet pool */

struct packet_buffer *pkt_addr_backup; /* save a copy of packet buffer address for writeback descriptor reuse */

....

}

![RX Descriptor Data Structure]()

![enter image description here]()

Driver allocates some number of packet buffer in RAM (stored in packet_buffer_pool data structure).

pool = alloc_packet_buffer_pool(buffer_size=2048, num_buffer=512);

Driver put each packet buffer's address in the descriptor field, like

rx_ring[i]->read.pkt_addr = pool.get_free_buffer();

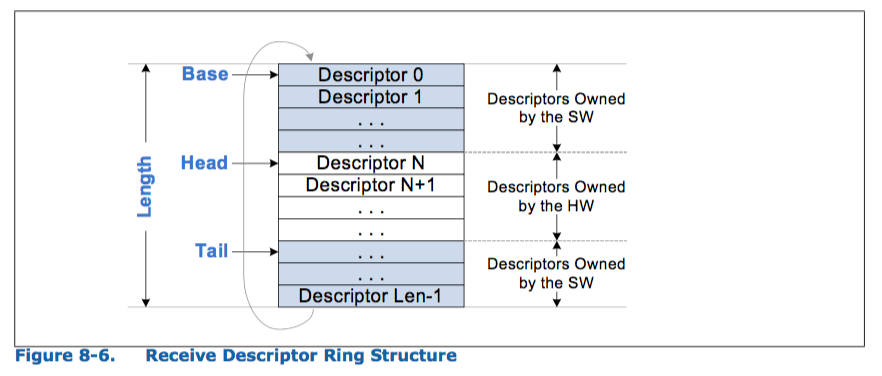

Driver tells NIC the starting location of rx_ring, its length and head/tail. So NIC would know which descriptors are free (thus packet buffer pointed by those descriptors are free). This process is done by driver writing those info into NIC registers (fixed, could be found in NIC datasheet).

rx_ring_addr_reg = &rx_ring;

rx_ring_len_reg = sizeof(rx_ring);

rx_ring_head = 0; /* meaning all free at start */

/* rx_ring_tail is a register in NIC as NIC updates it */

Now NIC knows that descriptor rx_ring[{x,y,z}] are free and {x,y,z}.pkt_addr could be put new packet data. It go ahead and DMA new packets into {x,y,z}.pkt_addr. In the meantime, NIC could pre-process (offload) the packet processing (like check sum validation, extract VLAN tag) so it would also need some place to leave those info for software. Here, descriptors are reused for this purpose on writeback (see the second struct in descriptor union). Then NIC advances rx_ring tail pointer offset, indicating a new descriptor has been written back by NIC.[A gotcha here is that, since descriptors are re-used for pre-process results, driver has to save {x,y,z}.pkt_addr in a backup data structure].

/* below is done in hardware, shown just for illustration purpose */

if (rx_ring_head != rx_ring_tail) { /* ring not full */

copy(rx_ring[rx_ring_tail].read.pkt_addr, raw_packet_data);

result = do_offload_procesing();

if (pre_processing(raw_packet_data) & BAD_CHECKSUM))

rx_ring[rx_ring_tail].writeback.qword1.stats_error_len |= RX_BAD_CHECKSUM_ERROR;

rx_ring_tail++; /* actually driver sets a Descriptor done indication flag */

/* along in writeback descriptor so driver can figure out */

/* current HEAD, thus saving a PCIe write message */

}

Driver reads the new tail pointer offset and found {x,y,z} are with new packets. It would read off the packet from pkt_addr_backup[{x,y,z}] and related pre-precessing result.

When upper layer software is done with packets, {x,y,z} would be put back to rx_ring and ring head pointer would be updated to indicate free descriptors.

This concludes the RX path. TX path is pretty much the reverse: upper layer produces packet, driver copy packet data into packet_buffer_pool and let tx_ring[x].buffer_addr points to it. Driver also prepares some TX offload flags (such as hardware checksumming, TSO) in the TX descriptor. NIC reads TX descriptor and DMA tx_ring[x].buffer_addr from RAM to NIC.

This information normally appears in NIC datasheet, such as Intel XL710 xl710-10-40-controller-datasheet, Chapter 8.3 & 8.4 LAN RX/TX Data Path.

http://www.intel.com/content/www/us/en/embedded/products/networking/xl710-10-40-controller-datasheet.html

Also you can check the open source driver code (either Linux kernel or some user space library like DPDK PMD), which would contain descriptor struct definition.

-- Edit 1 --

For your additional question regarding Realtek driver:

Yes, those bits are NIC specific. A hint is lines like

desc->opts1 = cpu_to_le32(DescOwn | RingEnd | cp->rx_buf_sz);

DescOwn is a bit flag which by setting it tells NIC that it now owns this descriptor and associated buffer. Also it need to convert from CPU endianness(might be power CPU, which is BE) to Little Endian that NIC agrees to understand.

You can find relevant info in http://realtek.info/pdf/rtl8139cp.pdf (e.g. Page 70 for DescOwn), though it's not as through as XL710 but at least contains all register/descriptor info.

-- Edit 2 --

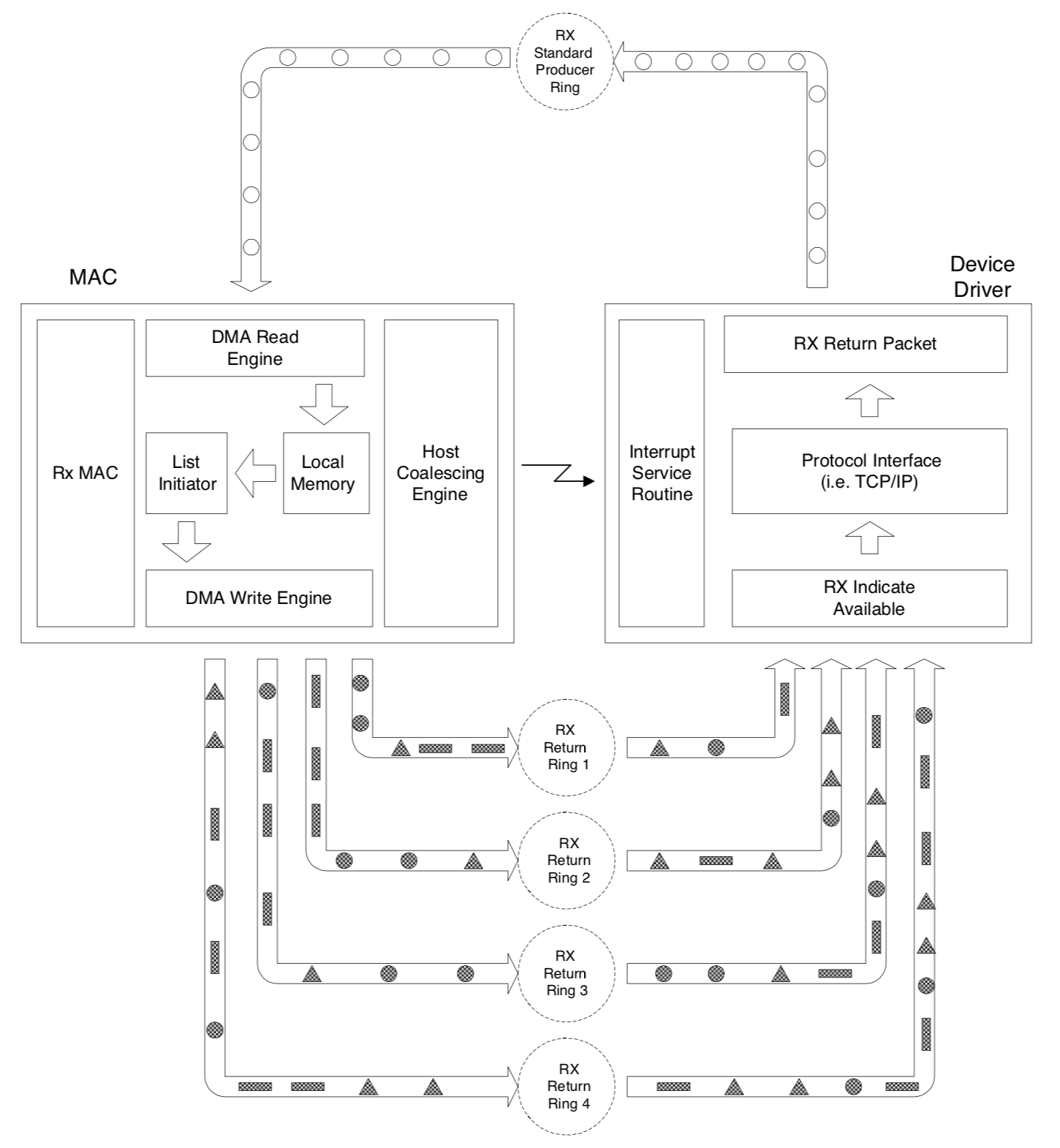

The NIC descriptor is a very vendor dependent definition. As shown above, Intel's NIC descriptor use the same RX descriptor ring to provide NIC buffers to write to, and for the NIC to write back RX info. There are other implementations like split RX submission/completion queue (more prevalent in NVMe technology). For instance, some of Broadcom's NICs have a single submission ring (to give buffer to NIC) and multiple completion ring. It's designed for NIC decide and put packets in different ring for e.g. different traffic class priority, so that driver can get most important packets first.

![enter image description here]() (From BCM5756M NIC Programmer’s Guide)

(From BCM5756M NIC Programmer’s Guide)

--Edit 3--

Intel usually make NIC datasheet open to public download, while other vendors might disclose to ODMs only. A very brief summary of Tx/Rx flow is described in their Intel 82599 family datasheet, section 1.8 Architecture and Basic Operations.