I am working on an OCR system. A challenge that I'm facing for recognizing the text within ROI is due to the shakiness or motion effect shot or text that is not focus due to angle positions. Please consider the following demo sample

If you notice the texts (for ex. the mark as a red), in such cases the OCR system couldn't properly recognize the text. However, this scenario can also come on with no angle shot where the image is too blurry that the OCR system can't recognize or partially recognize the text. Sometimes they are blurry or sometimes very low resolution or pixelated. For example

Methods we've tried

Firstly we've tried various methods available on SO. But sadly no luck.

- How to improve image quality to extract text from image using Tesseract

- How to improve image quality? [closed]

- Image quality improvement in Opencv

Next, we've tried the following three most promising methods as below.

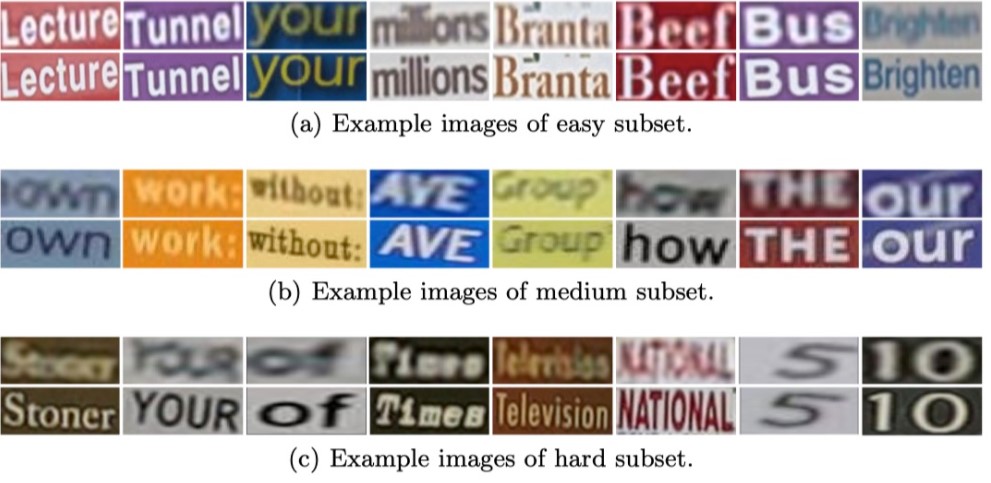

1.TSRN

A recent research work (TSRN) mainly focuses on such cases. The main intuitive of it is to introduce super-resolution (SR) techniques as pre-processing. This implementation looks by far the most promising. However, it fails to do magic on our custom dataset (for example the second images above, the blue text). Here are some example from their demonstration:

2. Neural Enhance

After looking at its illustration on its page, we believed it might work. But sadly it also couldn't address the problem. However, I was a bit confusing even with their showed example because I couldn't reproduce them too. I've raised an issue on github where I demonstrated this more in detail. Here are some example from their demonstration:

3. ISR

The last choice with minimum hope with this implementation. No luck either.

Update 1

[Method]: Apart from the above, we also tried some traditional approaches such as Out-of-focus Deblur Filter (Wiener filter and also unsupervised Weiner filter). We also checked the Richardson-Lucy method. but no improvement with this approach either.

[Method]: We’ve checked out a GAN based DeBlur solution. DeblurGAN I have tried this network. What attracted me was the approach of the Blind Motion Deblurring mechanism.

Lastly, from this discussion we encounter this research work which seems really good enough. Didn't try this yet.

Update 2

[Method]: Real-World Super-Resolution via Kernel Estimation and Noise Injection Tried this method. Promising. However, didn't work in our case. Code.

[Method]: Photo Restoration Comparative to the above all methods, it performs the best surprisingly in super text resolution for OCR. It greatly removes noise, blurriness, etc., and makes the image much clearer and which enhance model generalization better. Code.

My Query

Is there any effective workaround to tackle such cases? Any methods that could improve such blurry or low-resolution pixels whether the texts are in front or far away due to the camera angle?