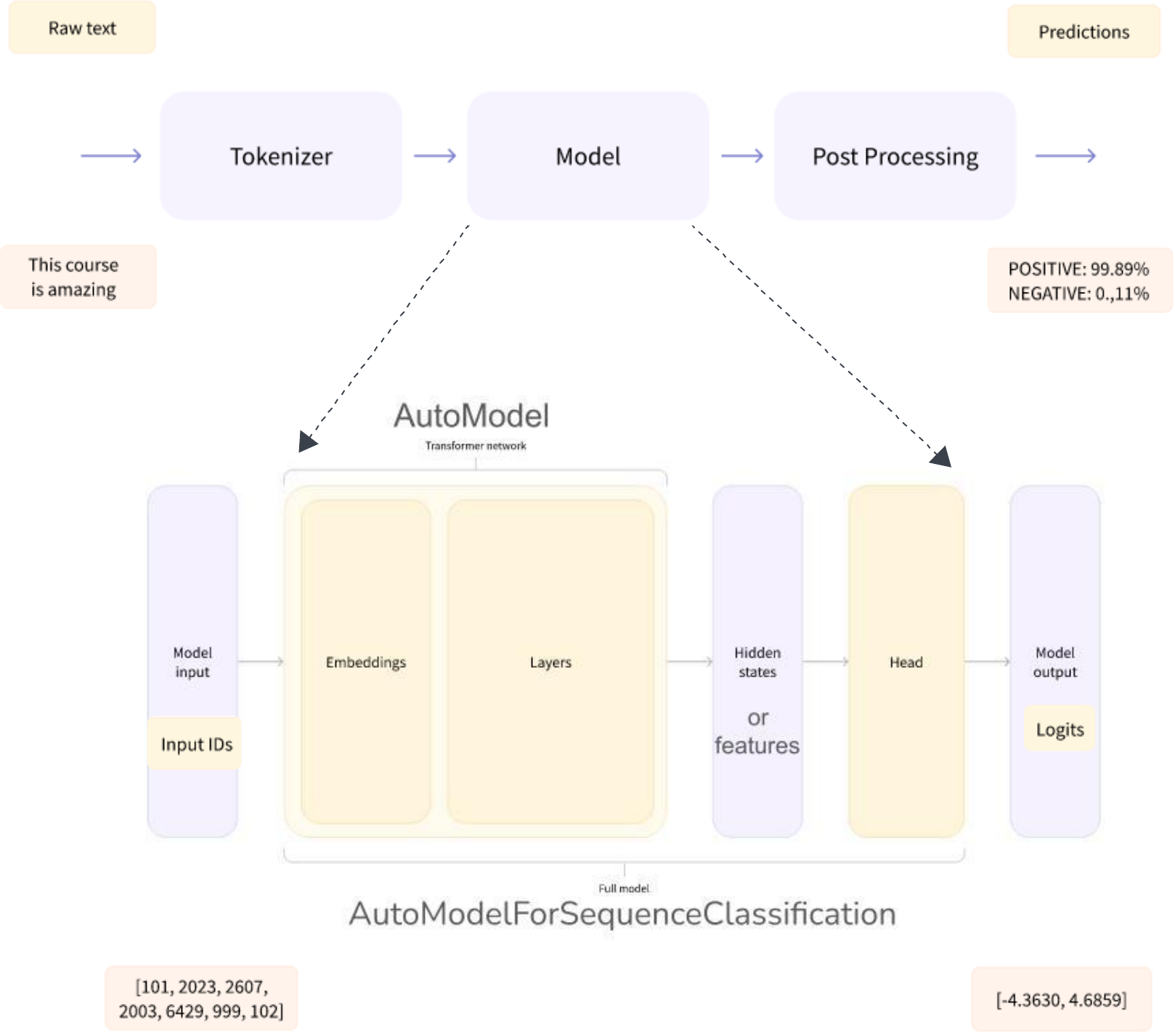

We can create a model from AutoModel(TFAutoModel) function:

from transformers import AutoModel

model = AutoModel.from_pretrained('distilbert-base-uncase')

In other hand, a model is created by AutoModelForSequenceClassification(TFAutoModelForSequenceClassification):

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification('distilbert-base-uncase')

As I know, both models use distilbert-base-uncase library to create models. From name of methods, the second class( AutoModelForSequenceClassification ) is created for Sequence Classification.

But what are really differences in 2 classes? And how to use them correctly?

(I searched in huggingface but it is not clear)