I am trying to get audio capture from the microphone working on Safari on iOS11 after support was recently added

However, the onaudioprocess callback is never called. Here's an example page:

<html>

<body>

<button onclick="doIt()">DoIt</button>

<ul id="logMessages">

</ul>

<script>

function debug(msg) {

if (typeof msg !== 'undefined') {

var logList = document.getElementById('logMessages');

var newLogItem = document.createElement('li');

if (typeof msg === 'function') {

msg = Function.prototype.toString(msg);

} else if (typeof msg !== 'string') {

msg = JSON.stringify(msg);

}

var newLogText = document.createTextNode(msg);

newLogItem.appendChild(newLogText);

logList.appendChild(newLogItem);

}

}

function doIt() {

var handleSuccess = function (stream) {

var context = new AudioContext();

var input = context.createMediaStreamSource(stream)

var processor = context.createScriptProcessor(1024, 1, 1);

input.connect(processor);

processor.connect(context.destination);

processor.onaudioprocess = function (e) {

// Do something with the data, i.e Convert this to WAV

debug(e.inputBuffer);

};

};

navigator.mediaDevices.getUserMedia({audio: true, video: false})

.then(handleSuccess);

}

</script>

</body>

</html>

On most platforms, you will see items being added to the messages list as the onaudioprocess callback is called. However, on iOS, this callback is never called.

Is there something else that I should do to try and get it called on iOS 11 with Safari?

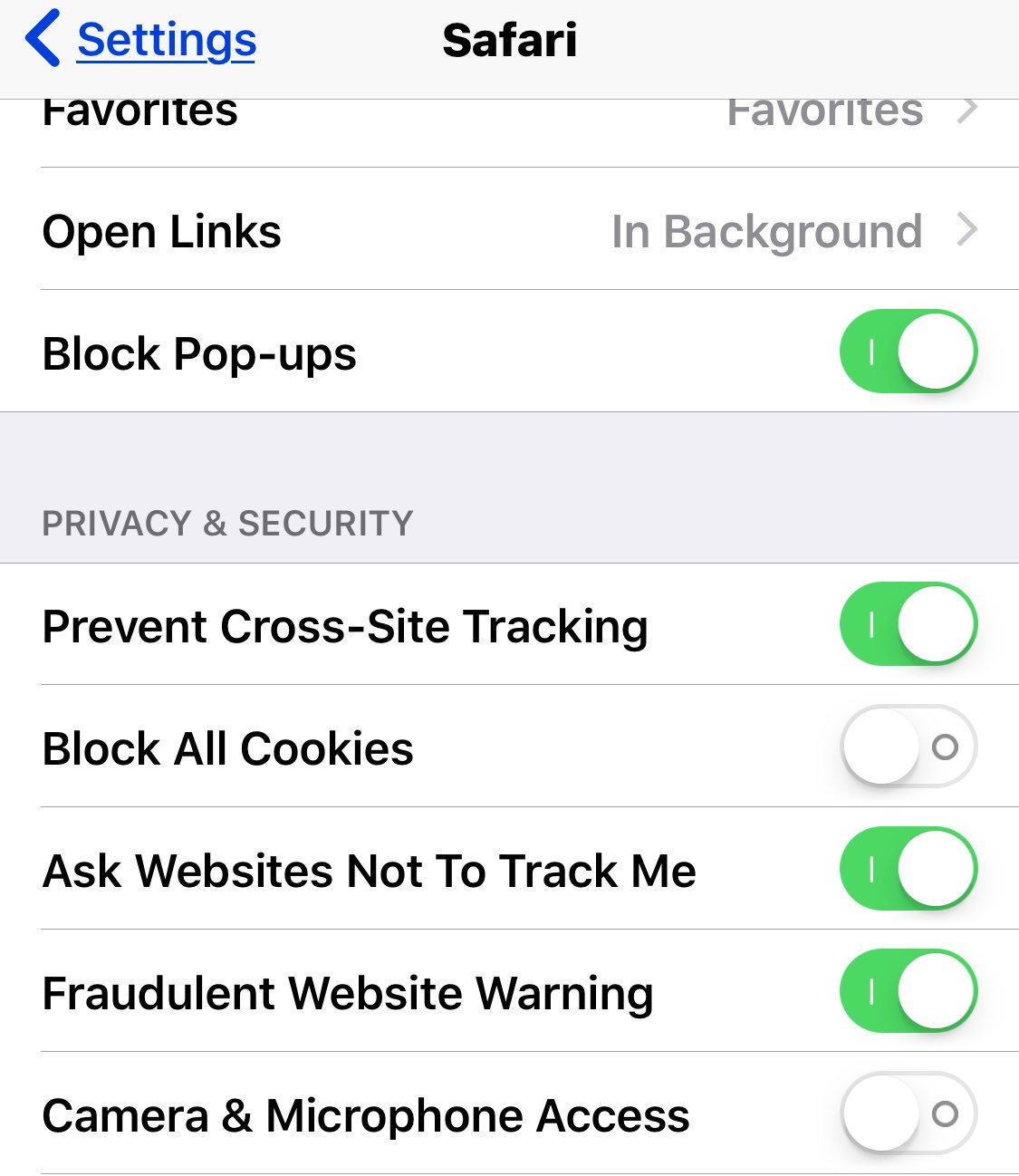

webkitAudioContext()or else call.resume()on it in direct response to a tap, instead of when you get the stream. See my answer below for a fully working version of your code. – Snowdrift