Lets start with three arrays of dtype=np.double. Timings are performed on a intel CPU using numpy 1.7.1 compiled with icc and linked to intel's mkl. A AMD cpu with numpy 1.6.1 compiled with gcc without mkl was also used to verify the timings. Please note the timings scale nearly linearly with system size and are not due to the small overhead incurred in the numpy functions if statements these difference will show up in microseconds not milliseconds:

arr_1D=np.arange(500,dtype=np.double)

large_arr_1D=np.arange(100000,dtype=np.double)

arr_2D=np.arange(500**2,dtype=np.double).reshape(500,500)

arr_3D=np.arange(500**3,dtype=np.double).reshape(500,500,500)

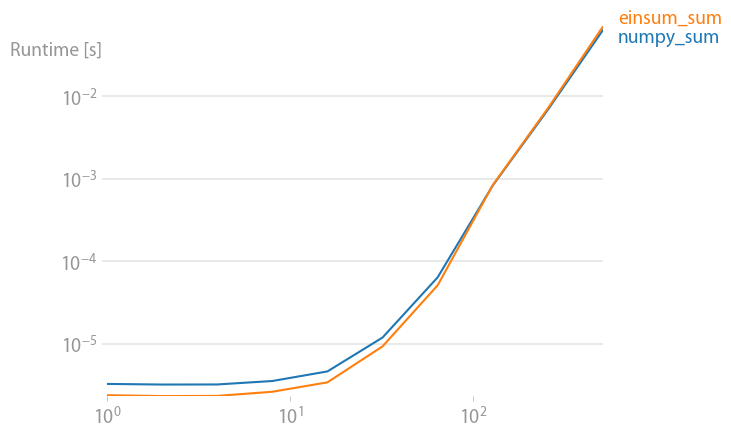

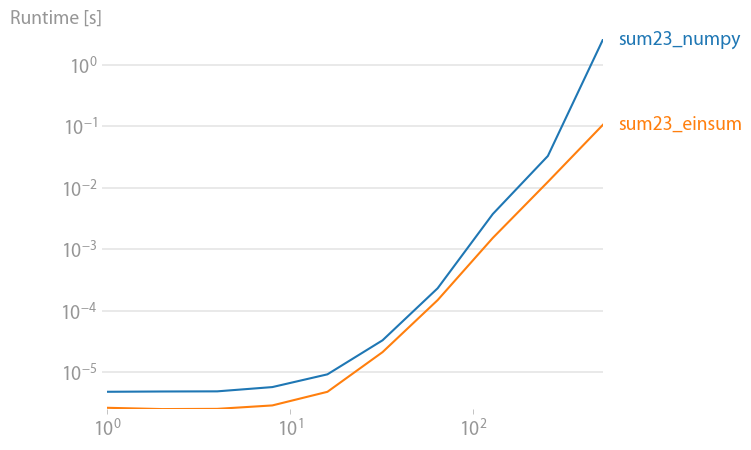

First lets look at the np.sum function:

np.all(np.sum(arr_3D)==np.einsum('ijk->',arr_3D))

True

%timeit np.sum(arr_3D)

10 loops, best of 3: 142 ms per loop

%timeit np.einsum('ijk->', arr_3D)

10 loops, best of 3: 70.2 ms per loop

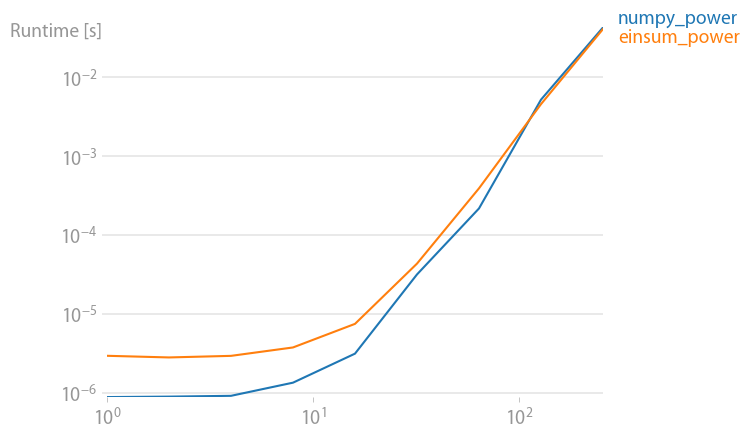

Powers:

np.allclose(arr_3D*arr_3D*arr_3D,np.einsum('ijk,ijk,ijk->ijk',arr_3D,arr_3D,arr_3D))

True

%timeit arr_3D*arr_3D*arr_3D

1 loops, best of 3: 1.32 s per loop

%timeit np.einsum('ijk,ijk,ijk->ijk', arr_3D, arr_3D, arr_3D)

1 loops, best of 3: 694 ms per loop

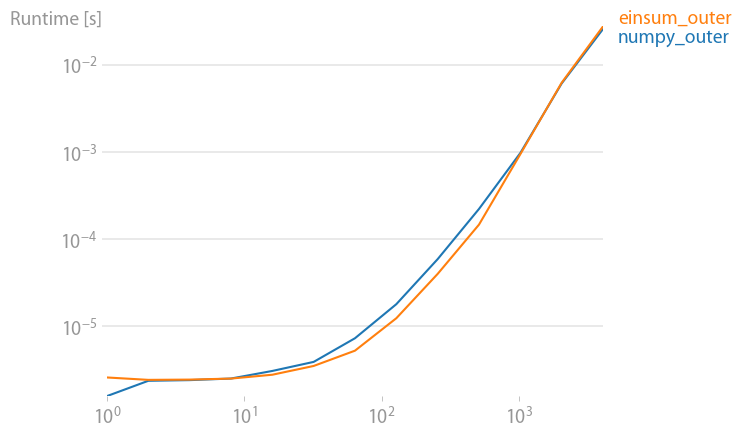

Outer product:

np.all(np.outer(arr_1D,arr_1D)==np.einsum('i,k->ik',arr_1D,arr_1D))

True

%timeit np.outer(arr_1D, arr_1D)

1000 loops, best of 3: 411 us per loop

%timeit np.einsum('i,k->ik', arr_1D, arr_1D)

1000 loops, best of 3: 245 us per loop

All of the above are twice as fast with np.einsum. These should be apples to apples comparisons as everything is specifically of dtype=np.double. I would expect the speed up in an operation like this:

np.allclose(np.sum(arr_2D*arr_3D),np.einsum('ij,oij->',arr_2D,arr_3D))

True

%timeit np.sum(arr_2D*arr_3D)

1 loops, best of 3: 813 ms per loop

%timeit np.einsum('ij,oij->', arr_2D, arr_3D)

10 loops, best of 3: 85.1 ms per loop

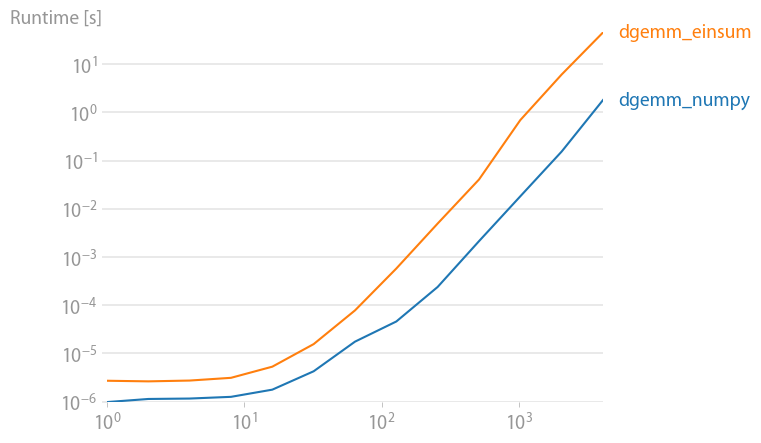

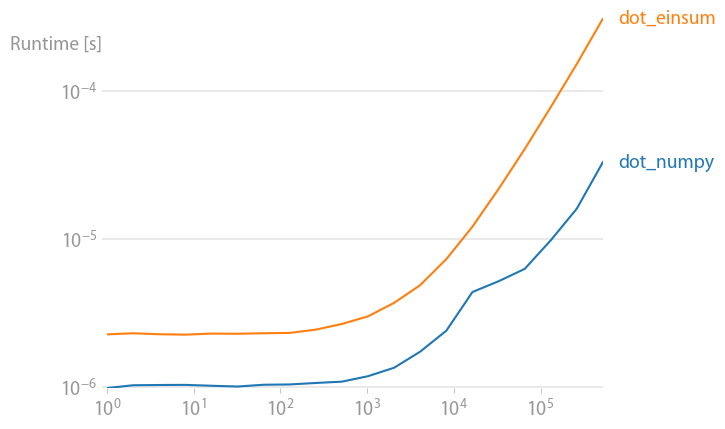

Einsum seems to be at least twice as fast for np.inner, np.outer, np.kron, and np.sum regardless of axes selection. The primary exception being np.dot as it calls DGEMM from a BLAS library. So why is np.einsum faster that other numpy functions that are equivalent?

The DGEMM case for completeness:

np.allclose(np.dot(arr_2D,arr_2D),np.einsum('ij,jk',arr_2D,arr_2D))

True

%timeit np.einsum('ij,jk',arr_2D,arr_2D)

10 loops, best of 3: 56.1 ms per loop

%timeit np.dot(arr_2D,arr_2D)

100 loops, best of 3: 5.17 ms per loop

The leading theory is from @sebergs comment that np.einsum can make use of SSE2, but numpy's ufuncs will not until numpy 1.8 (see the change log). I believe this is the correct answer, but have not been able to confirm it. Some limited proof can be found by changing the dtype of input array and observing speed difference and the fact that not everyone observes the same trends in timings.

sum), but I'm surprised thateinsumis consistently ~2x faster thanouter,inner,kron, etc. It would be interesting to know where the difference come from. – Screamernp.sumshould be equally as fast, if not faster, thennp.einsum(of course ignoring the 3-30 us penalty that Jaime showed), but its not. – Scour