Firstly, I find that when writing machine learning code, it's best NOT to use complex list comprehension because anything that you can iterate,

- it's easier to read if written when normal loops and indentation and/or

- it can be done with numpy broadcasting

And using proper variable names can help you better understand the code. Using Xs, Ys, Ws as short hand is nice only if you're good at math. Personally, I don't use them in the code, especially when writing in python. From import this: explicit is better than implicit.

My rule of thumb is to remember that if I write code I can't read 1 week later, it's bad code.

First, let's decide what is the input parameters for gradient descent, you will need:

- feature_matrix (The

X matrix, type: numpy.array, a matrix of N * D size, where N is the no. of rows/datapoints and D is the no. of columns/features)

- output (The

Y vector, type: numpy.array, a vector of size N)

- initial_weights (type:

numpy.array, a vector of size D).

Additionally, to check for convergence you will need:

- step_size (the magnitude of change when iterating through to change the weights; type:

float, usually a small number)

- tolerance (the criteria to break the iterations, when the gradient magnitude is smaller than tolerance, assume that your weights have convereged, type:

float, usually a small number but much bigger than the step size).

Now to the code.

def regression_gradient_descent(feature_matrix, output, initial_weights, step_size, tolerance):

converged = False # Set a boolean to check for convergence

weights = np.array(initial_weights) # make sure it's a numpy array

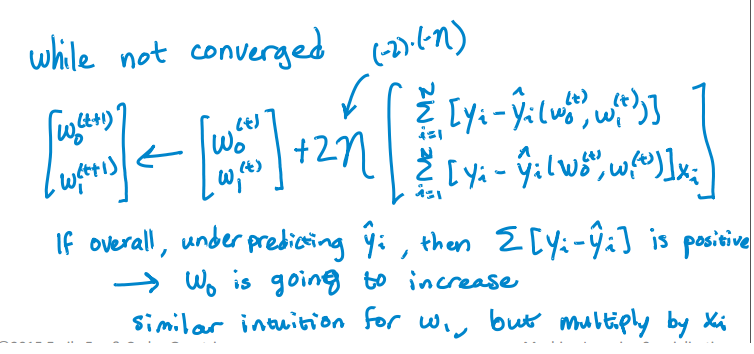

while not converged:

# compute the predictions based on feature_matrix and weights.

# iterate through the row and find the single scalar predicted

# value for each weight * column.

# hint: a dot product can solve this easily

predictions = [??? for row in feature_matrix]

# compute the errors as predictions - output

errors = predictions - output

gradient_sum_squares = 0 # initialize the gradient sum of squares

# while we haven't reached the tolerance yet, update each feature's weight

for i in range(len(weights)): # loop over each weight

# Recall that feature_matrix[:, i] is the feature column associated with weights[i]

# compute the derivative for weight[i]:

# Hint: the derivative is = 2 * dot product of feature_column and errors.

derivative = 2 * ????

# add the squared value of the derivative to the gradient magnitude (for assessing convergence)

gradient_sum_squares += (derivative * derivative)

# subtract the step size times the derivative from the current weight

weights[i] -= (step_size * derivative)

# compute the square-root of the gradient sum of squares to get the gradient magnitude:

gradient_magnitude = ???

# Then check whether the magnitude is lower than the tolerance.

if ???:

converged = True

# Once it while loop breaks, return the loop.

return(weights)

I hope the extended pseudo-code helps you better understand the gradient descent. I won't fill in the ??? so as to not spoil your homework.

Note that your RSS code is also unreadable and unmaintainable. It's easier to do just:

>>> import numpy as np

>>> prediction = np.array([1,2,3])

>>> output = np.array([1,1,5])

>>> residual = output - prediction

>>> RSS = sum(residual * residual)

>>> RSS

5

Going through numpy basics will go a long way to machine learning and matrix-vector manipulation without going nuts with iterations: http://docs.scipy.org/doc/numpy-1.10.1/user/basics.html