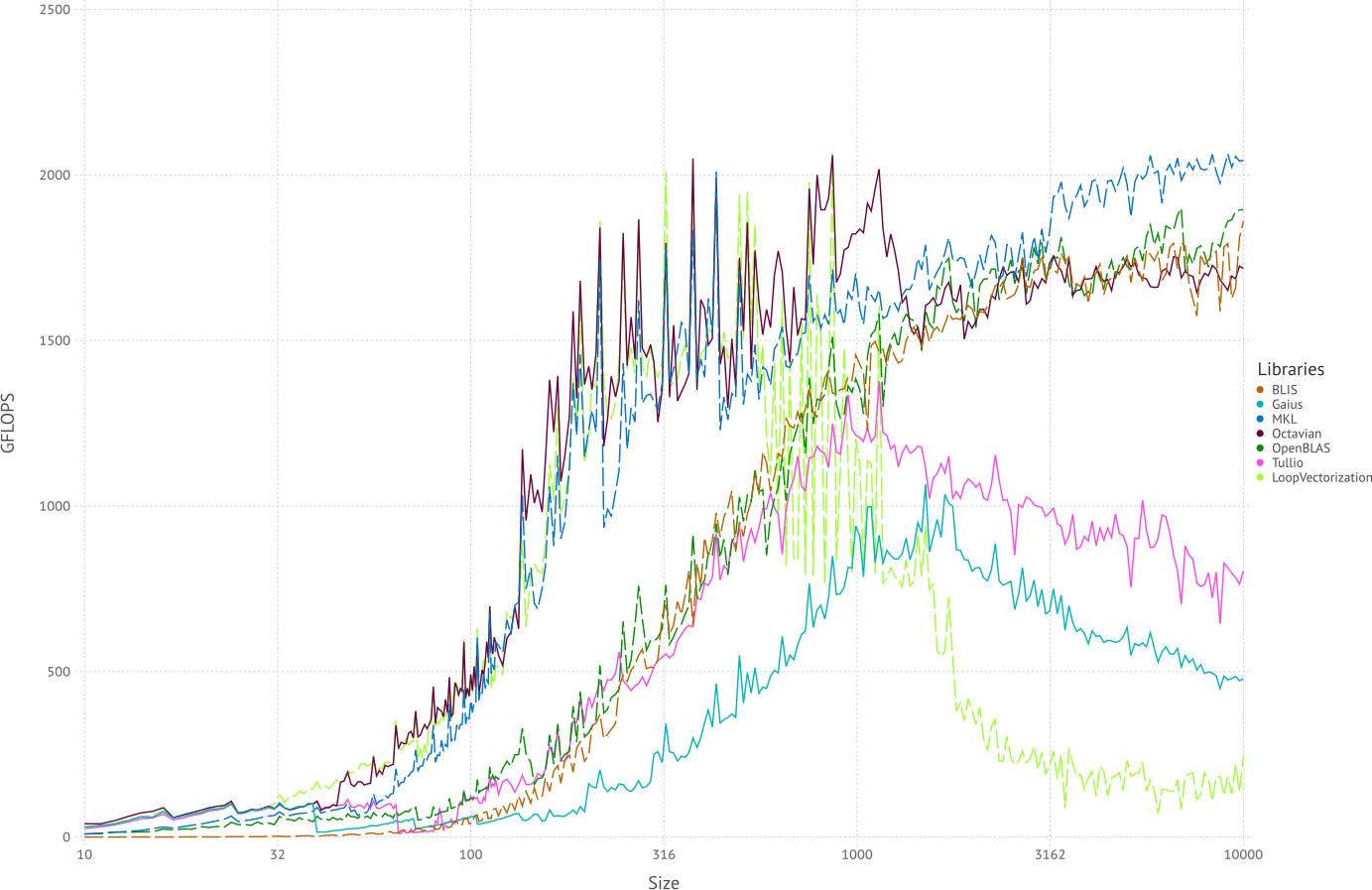

You can write an optimized loop using Tullio.jl, doing this all in one sweep. But I think it's not going to beat BLAS significantly:

Chained multiplication is also very slow, because it doesn't know there's a better algorithm.

julia> # a, b, x are the same as in Bogumił's answer

julia> @btime dot($a, $x, $b);

82.305 ms (0 allocations: 0 bytes)

julia> f(a, x, b) = @tullio r := a[i] * x[i,j] * b[j]

f (generic function with 1 method)

julia> @btime f($a, $x, $b);

80.430 ms (1 allocation: 16 bytes)

Adding LoopVectorization.jl may be worth it:

julia> using LoopVectorization

julia> f3(a, x, b) = @tullio r := a[i] * x[i,j] * b[j]

f3 (generic function with 1 method)

julia> @btime f3($a, $x, $b);

73.239 ms (1 allocation: 16 bytes)

But I don't know about how to go about the symmetric case.

julia> @btime dot($a, $(Symmetric(x)), $b);

42.896 ms (0 allocations: 0 bytes)

although there might be linear algebra tricks to cut that down intelligently with Tullio.jl.

In this kind of problem, benchmarking trade-offs is everything.