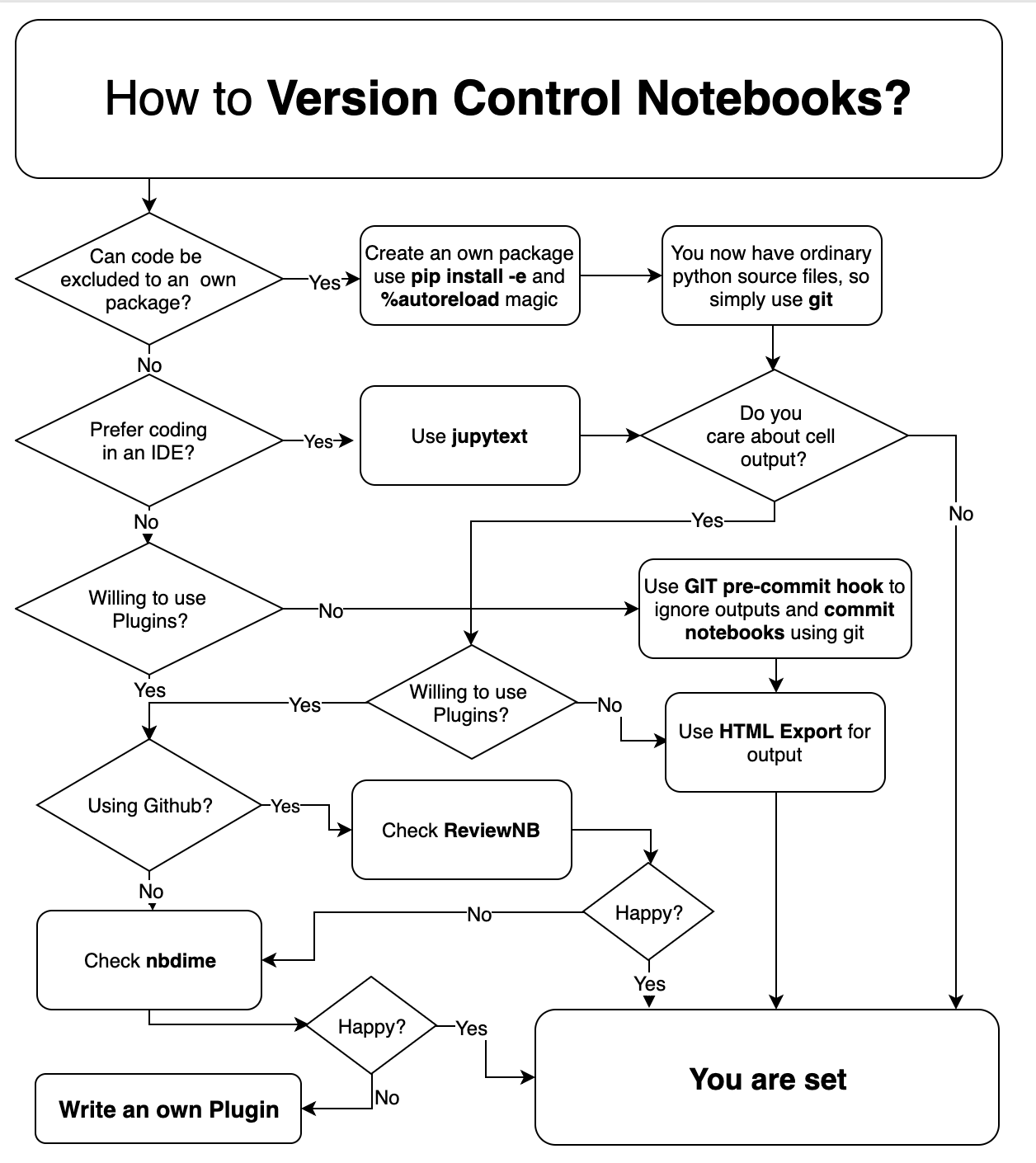

What is a good strategy for keeping IPython notebooks under version control?

The notebook format is quite amenable for version control: if one wants to version control the notebook and the outputs then this works quite well. The annoyance comes when one wants only to version control the input, excluding the cell outputs (aka. "build products") which can be large binary blobs, especially for movies and plots. In particular, I am trying to find a good workflow that:

- allows me to choose between including or excluding output,

- prevents me from accidentally committing output if I do not want it,

- allows me to keep output in my local version,

- allows me to see when I have changes in the inputs using my version control system (i.e. if I only version control the inputs but my local file has outputs, then I would like to be able to see if the inputs have changed (requiring a commit). Using the version control status command will always register a difference since the local file has outputs.)

- allows me to update my working notebook (which contains the output) from an updated clean notebook. (update)

As mentioned, if I chose to include the outputs (which is desirable when using nbviewer for example), then everything is fine. The problem is when I do not want to version control the output. There are some tools and scripts for stripping the output of the notebook, but frequently I encounter the following issues:

- I accidentally commit a version with the the output, thereby polluting my repository.

- I clear output to use version control, but would really rather keep the output in my local copy (sometimes it takes a while to reproduce for example).

- Some of the scripts that strip output change the format slightly compared to the

Cell/All Output/Clearmenu option, thereby creating unwanted noise in the diffs. This is resolved by some of the answers. - When pulling changes to a clean version of the file, I need to find some way of incorporating those changes in my working notebook without having to rerun everything. (update)

I have considered several options that I shall discuss below, but have yet to find a good comprehensive solution. A full solution might require some changes to IPython, or may rely on some simple external scripts. I currently use mercurial, but would like a solution that also works with git: an ideal solution would be version-control agnostic.

This issue has been discussed many times, but there is no definitive or clear solution from the user's perspective. The answer to this question should provide the definitive strategy. It is fine if it requires a recent (even development) version of IPython or an easily installed extension.

Update: I have been playing with my modified notebook version which optionally saves a .clean version with every save using Gregory Crosswhite's suggestions. This satisfies most of my constraints but leaves the following unresolved:

- This is not yet a standard solution (requires a modification of the ipython source. Is there a way of achieving this behaviour with a simple extension? Needs some sort of on-save hook.

- A problem I have with the current workflow is pulling changes. These will come in to the

.cleanfile, and then need to be integrated somehow into my working version. (Of course, I can always re-execute the notebook, but this can be a pain, especially if some of the results depend on long calculations, parallel computations, etc.) I do not have a good idea about how to resolve this yet. Perhaps a workflow involving an extension like ipycache might work, but that seems a little too complicated.

Notes

Removing (stripping) Output

- When the notebook is running, one can use the

Cell/All Output/Clearmenu option for removing the output. - There are some scripts for removing output, such as the script nbstripout.py which remove the output, but does not produce the same output as using the notebook interface. This was eventually included in the ipython/nbconvert repo, but this has been closed stating that the changes are now included in ipython/ipython,but the corresponding functionality seems not to have been included yet. (update) That being said, Gregory Crosswhite's solution shows that this is pretty easy to do, even without invoking ipython/nbconvert, so this approach is probably workable if it can be properly hooked in. (Attaching it to each version control system, however, does not seem like a good idea — this should somehow hook in to the notebook mechanism.)

Newsgroups

Issues

- 977: Notebook feature requests (Open).

- 1280: Clear-all on save option (Open). (Follows from this discussion.)

- 3295: autoexported notebooks: only export explicitly marked cells (Closed). Resolved by extension 11 Add writeandexecute magic (Merged).

Pull Requests

- 1621: clear In[] prompt numbers on "Clear All Output" (Merged). (See also 2519 (Merged).)

- 1563: clear_output improvements (Merged).

- 3065: diff-ability of notebooks (Closed).

- 3291: Add the option to skip output cells when saving. (Closed). This seems extremely relevant, however was closed with the suggestion to use a "clean/smudge" filter. A relevant question what can you use if you want to strip off output before running git diff? seems not to have been answered.

- 3312: WIP: Notebook save hooks (Closed).

- 3747: ipynb -> ipynb transformer (Closed). This is rebased in 4175.

- 4175: nbconvert: Jinjaless exporter base (Merged).

- 142: Use STDIN in nbstripout if no input is given (Open).

--scriptoption, but that has been removed. I am waiting until post-save hooks are implemented (which are planned) at which point I think I will be able to provide an acceptable solution combining several of the techniques. – Eugene