I'm connecting to an external websocket API using the node ws library (node 10.8.0 on Ubuntu 16.04). I've got a listener which simply parses the JSON and passes it to the callback:

this.ws.on('message', (rawdata) => {

let data = null;

try {

data = JSON.parse(rawdata);

} catch (e) {

console.log('Failed parsing the following string as json: ' + rawdata);

return;

}

mycallback(data);

});

I now receive errors in which the rawData looks as follows (I formatted and removed irrelevant contents):

�~A

{

"id": 1,

etc..

}�~�

{

"id": 2,

etc..

I then wondered; what are these characters? Seeing the structure I initially thought that the first weird sign must be an opening bracket of an array ([) and the second one a comma (,) so that it creates an array of objects.

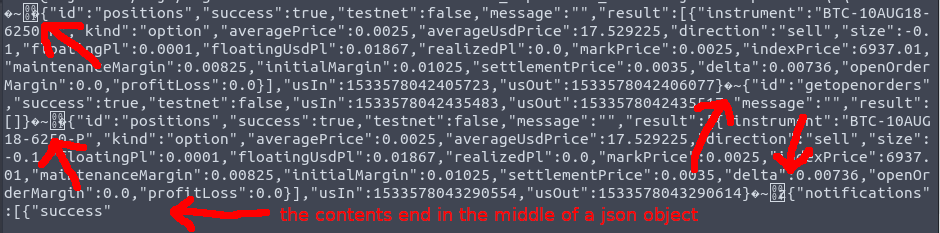

I then investigated the problem further by writing the rawdata to a file whenever it encounters a JSON parsing error. In an hour or so it has saved about 1500 of these error files, meaning this happens a lot. I cated a couple of these files in the terminal, of which I uploaded an example below:

A few things are interesting here:

- The files always start with one of these weird signs.

- The files appear to exist out of multiple messages which should have been received separately. The weird signs separate those individual messages.

- The files always end with an unfinished JSON object.

- The files are of varying lengths. They are not always the same size and are thus not cut off on a specific length.

I'm not very experience with websockets, but could it be that my websocket somehow receives a stream of messages that it concatenates together, with these weird signs as separators, and then randomly cuts off the last message? Maybe because I'm getting a constant very fast stream of messages?

Or could it be because of an error (or functionality) server side in that it combines those individual messages?

What's going on here?

Edit

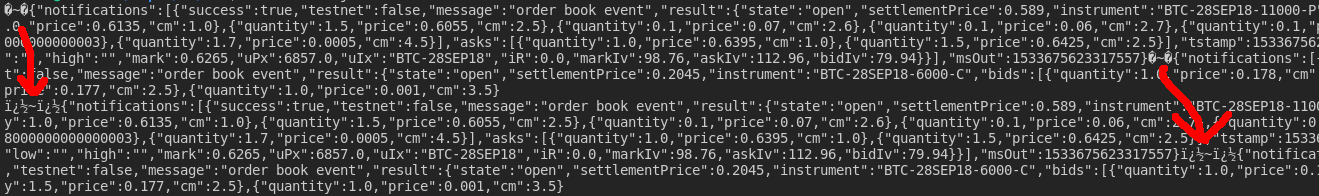

@bendataclear suggested to interpret it as utf8. So I did, and I pasted a screenshot of the results below. The first print is as it is, and the second one interpreted as utf8. To me this doesn't look like anything. I could of course convert to utf8, and then split by those characters. Although the last message is always cut off, this would at least make some of the messages readable. Other ideas still welcome though.

npm install utf8) then convert the string (utf8.encode(string))? – Carbolatedrequire('websocket').client)? – Carbolatedmessage.typewhich returns the encoding: github.com/theturtle32/WebSocket-Node - Binary streaming is foreign to me but it seems like the object you are getting back is a UTF8 string with buffer data interspersed, it might need to be buffered before being read. – Carbolatednpm install --save-optional utf-8-validateto check for spec compliance. Have you done that? – Everedcated text in a Stack Overflow code box, I wanna examine them. thanks. – Livvi