While this is quite a bit later to the party, I recently had this error and nothing I found searching this problem gave me any insight other than connection leaks -- which was not my problem.

Incase this may help anyone else, I'll explain the problem, the solution, and some helpful things I found along the way since I couldn't find it anywhere else.

In my case, the underlying issue was due to not annotating my model properties for key columns which were strings. Take for example:

public class InventoryItem

{

public string StateCode {get;set;}

public string CompanyId {get;set;}

public int Id {get;set;}

// more properties removed for simplicity

}

in my DbContext, I configured the key column to be a compound key.

protected override void OnModelCreating(ModelBuilder modelBuilder)

{

modelBuilder.Entity<InventoryItem>().HasKey(x => new { x.StateCode, x.CompanyId, x.Id });

}

SQL Table definition

CREATE TABLE [Example].[InventoryItem] (

[Id] INT NOT NULL,

[StateCode] VARCHAR (2) NOT NULL,

[CompanyId] VARCHAR (50) NOT NULL,

CONSTRAINT [PK_InventoryItems] PRIMARY KEY CLUSTERED ([StateCode] ASC, [CompanyId] ASC, [Id] ASC)

)

C# querying code

public List<InventoryItem> GetAllCompanyInventory(string stateCode, string companyId)

{

using (var context = ContextFactory.CreateContext())

{

return await context.InventoryItems.Where(x => x.StateCode == stateCode && x.CompanyId == companyId).ToListAsync().ConfigureAwait(false);

}

}

In this case, what was happening, is the stateCode and companyId were being parameterized as NVARCHAR(450) and the query was using CONVERT_IMPLICIT which effectively caused the system to not be able to use indexes correctly. My CPU and worker time went to 100% and somehow that translated into connection pool issues. After adding annotations to these properties, my CPU never went above 5% and never saw a connection pool issue again. Here are some of the things that helped me identify this issue.

The fix was to annotate these properties

public class InventoryItem

{

[System.ComponentModel.DataAnnotations.Schema.Column(TypeName = "varchar(2)")]

public string StateCode {get;set;}

[System.ComponentModel.DataAnnotations.Schema.Column(TypeName = "varchar(50)")]

public string CompanyId {get;set;}

public int Id {get;set;}

// more properties removed for simplicity

}

After I made this change, the server was pretty much sleeping. Running sp_who showed a much smaller number of connections and almost all of them were sleeping.

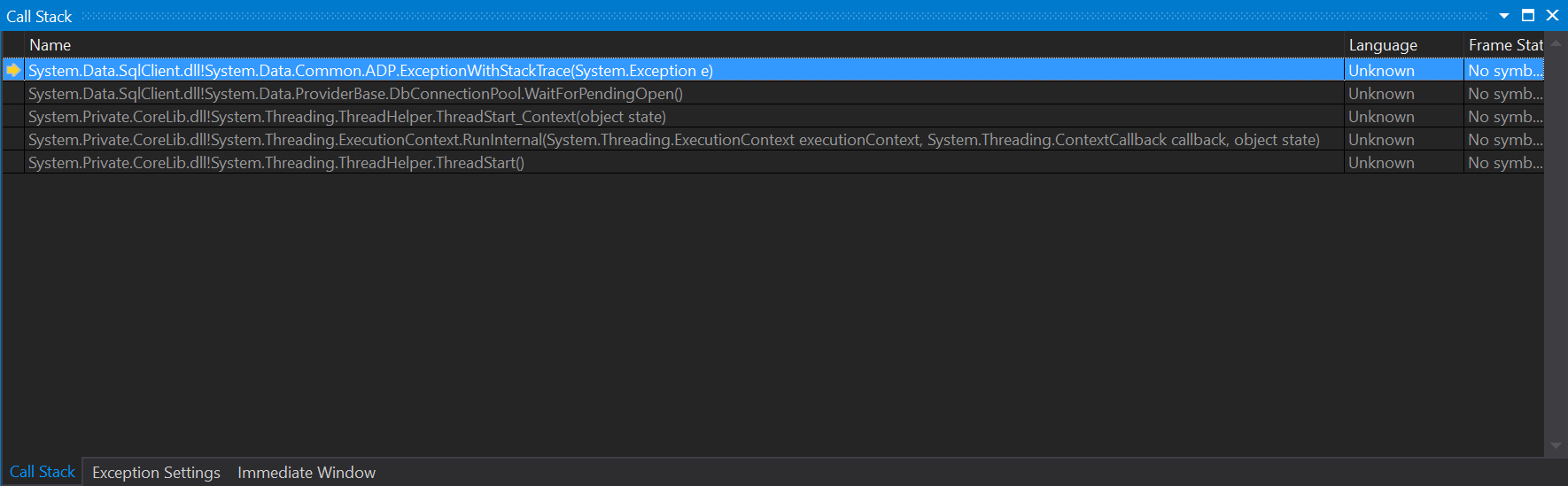

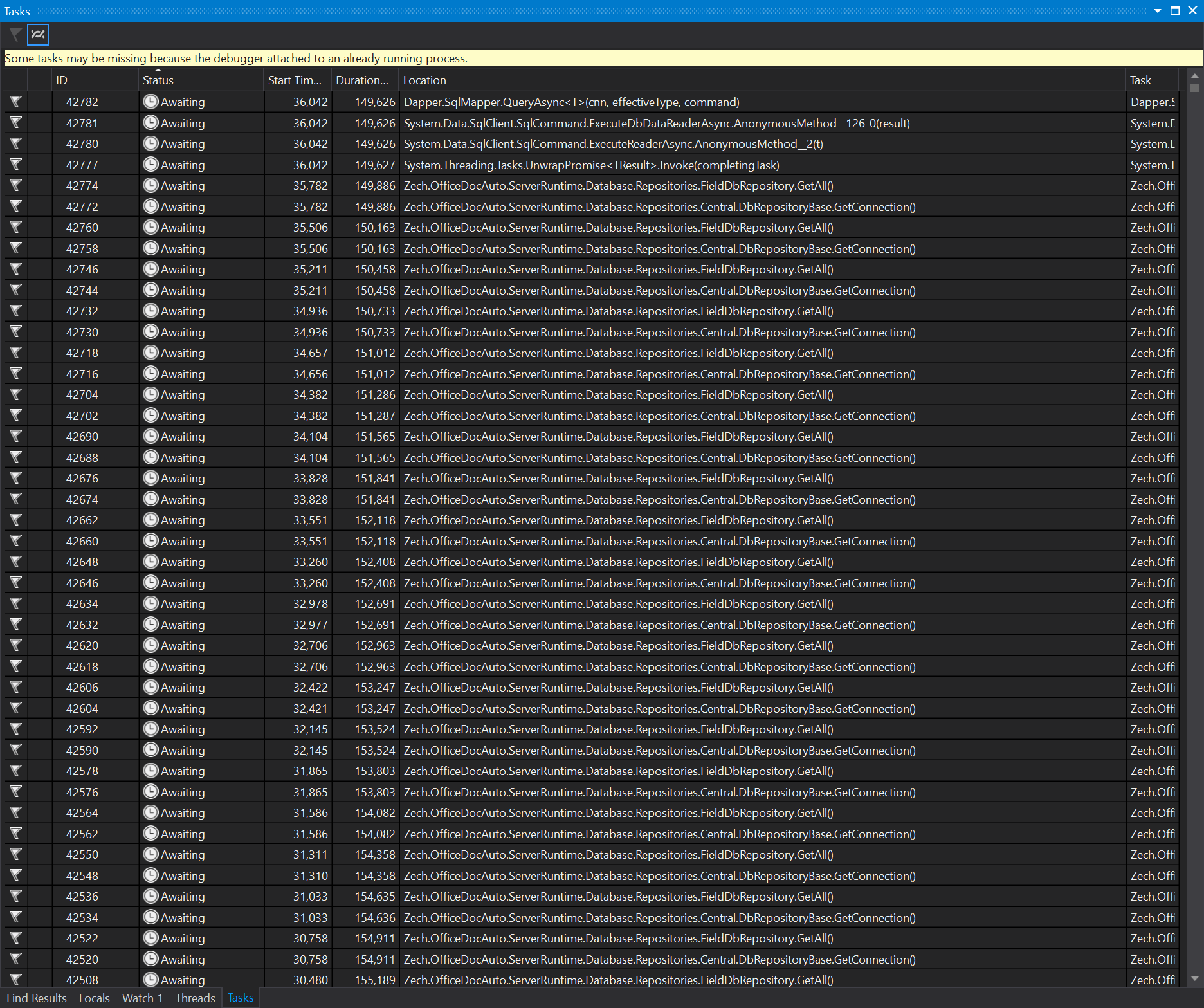

Somewhere along the way, someone mentioned using sp_who to see the connections active. I could see connections go from 0 to max allowed as soon as I started running my code locally all listing my hostname and were running status. So I thought my code had an error, but literally every single place we create a context was wrapped properly by a using statement. Was losing my mind. I then shifted my thinking to maybe this is a symptom and not the actual problem. Which leads me to the path to finding the problem.

I found this article that eventually lead to this SQL statement which REALLY helped me identify what was happening.

SELECT TOP 20

(total_logical_reads/execution_count) AS avg_logical_reads,

(total_logical_writes/execution_count) AS avg_logical_writes,

(total_physical_reads/execution_count) AS avg_phys_reads,

(total_worker_time/execution_count) AS avg_cpu_over_head,

total_logical_reads, total_logical_writes, total_physical_reads,

total_worker_time, execution_count, total_elapsed_time AS Duration,

plan_generation_num AS num_recompiles,

statement_start_offset AS stmt_start_offset,

(SELECT SUBSTRING(text, statement_start_offset/2 + 1,

(CASE WHEN statement_end_offset = -1

THEN LEN(CONVERT(nvarchar(MAX),text)) * 2

ELSE statement_end_offset

END - statement_start_offset)/2)

FROM sys.dm_exec_sql_text(sql_handle)) AS query_text,

(SELECT query_plan FROM sys.dm_exec_query_plan(plan_handle)) AS query_plan

FROM sys.dm_exec_query_stats a

--JUST CHANGE THE ORDER BY TO GET THE OTHER RESOURCES

ORDER BY (total_logical_reads + total_logical_writes)/execution_count DESC

From here, I could get the query text and query plans that were having problems. I noticed that some of the output had total worker time of > 4 Billion compared to other heavy queries which were around 300 Million. These super high worker times were not expected because they had exactly the index key in the search predicate. Looking at the actual query plan, I was able to see a few things:

- Extremely high estimated number of rows to be read (eg, > 10 Million when estimated rows per execution was only 1)

- Use of

CONVERT_IMPLICIT

- Recommendation to create a different index, but effectively the one that I already had in place (somewhat complicated to explain with the simplified example, but the actual index has more columns and it was omitting the first two columns to avoid the conversion)

This article helped me understand why my estimated number of rows was so high even when there was an index using the exact keys I was searching for.

This article helped me understand the CONVERT_IMPLICIT calls in the query plans I was reviewing.

I found the above article because it was was mentioned in this post.

This helped me figure out how to change the default from nvarchar to varchar.

I'm still not positive why this was causing the connection pool timeout issue. I had some tests running that would execute one state code + company id at a time and it was still hitting this error pretty much right away. Once I changed it to have the annotations, everything cleared up even when processing many requests simultaneously.