As I mentioned in my comment Intel OpenGL drivers has problems with direct rendering to texture and I do not know of any workaround that is working. In such case the only way around this is use glReadPixels to copy screen content into CPU memory and then copy it back to GPU as texture. Of coarse that is much much slower then direct rendering to texture. So here is the deal:

set low res view

do not change resolution of your window just the glViewport values. Then render your scene in the low res (in just a fraction of screen space)

copy rendered screen into texture

- set target resolution view

render the texture

do not forget to use GL_NEAREST filter. The most important thing is that you swap buffers only after this not before !!! otherwise you would have flickering.

Here C++ source for this:

void gl_draw()

{

// render resolution and multiplier

const int xs=320,ys=200,m=2;

// [low res render pass]

glViewport(0,0,xs,ys);

glClearColor(0.0,0.0,0.0,1.0);

glClear(GL_COLOR_BUFFER_BIT);

glMatrixMode(GL_PROJECTION);

glLoadIdentity();

glMatrixMode(GL_MODELVIEW);

glLoadIdentity();

glDisable(GL_DEPTH_TEST);

glDisable(GL_TEXTURE_2D);

// 50 random lines

RandSeed=0x12345678;

glColor3f(1.0,1.0,1.0);

glBegin(GL_LINES);

for (int i=0;i<100;i++)

glVertex2f(2.0*Random()-1.0,2.0*Random()-1.0);

glEnd();

// [multiply resiolution render pass]

static bool _init=true;

GLuint txrid=0; // texture id

BYTE map[xs*ys*3]; // RGB

// init texture

if (_init) // you should also delte the texture on exit of app ...

{

// create texture

_init=false;

glGenTextures(1,&txrid);

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D,txrid);

glPixelStorei(GL_UNPACK_ALIGNMENT, 4);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S,GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T,GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER,GL_NEAREST); // must be nearest !!!

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER,GL_NEAREST);

glTexEnvf(GL_TEXTURE_ENV, GL_TEXTURE_ENV_MODE,GL_COPY);

glDisable(GL_TEXTURE_2D);

}

// copy low res screen to CPU memory

glReadPixels(0,0,xs,ys,GL_RGB,GL_UNSIGNED_BYTE,map);

// and then to GPU texture

glEnable(GL_TEXTURE_2D);

glBindTexture(GL_TEXTURE_2D,txrid);

glTexImage2D(GL_TEXTURE_2D, 0, GL_RGB, xs, ys, 0, GL_RGB, GL_UNSIGNED_BYTE, map);

// set multiplied resolution view

glViewport(0,0,m*xs,m*ys);

glClear(GL_COLOR_BUFFER_BIT);

// render low res screen as texture

glBegin(GL_QUADS);

glTexCoord2f(0.0,0.0); glVertex2f(-1.0,-1.0);

glTexCoord2f(0.0,1.0); glVertex2f(-1.0,+1.0);

glTexCoord2f(1.0,1.0); glVertex2f(+1.0,+1.0);

glTexCoord2f(1.0,0.0); glVertex2f(+1.0,-1.0);

glEnd();

glDisable(GL_TEXTURE_2D);

glFlush();

SwapBuffers(hdc); // swap buffers only here !!!

}

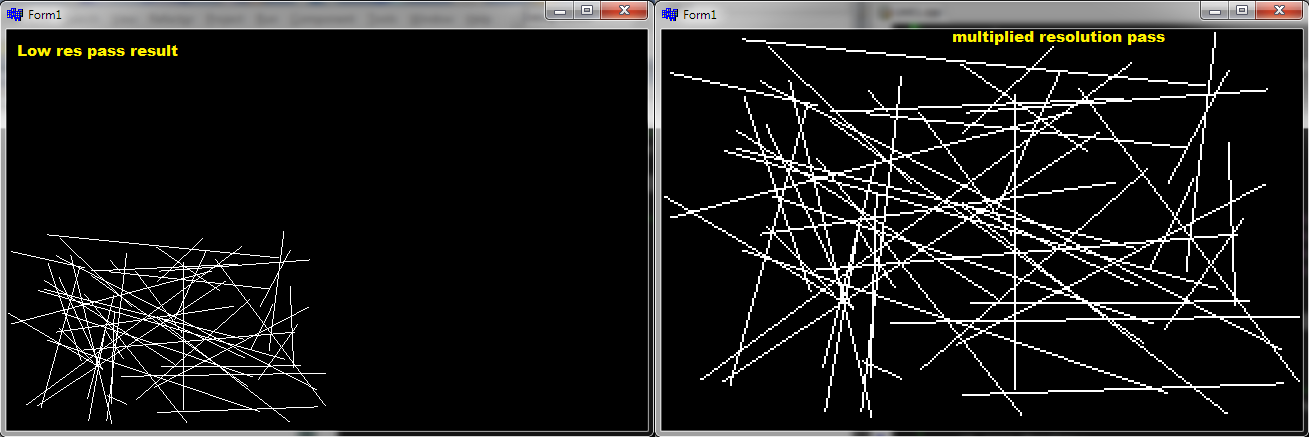

And preview:

![preview]()

I tested this on some Intel HD graphics (god knows which version) I got at my disposal and it works (while standard render to texture approaches are not).