Preconditions:

- PC with 16 Gb of RAM

- JDK 1.8.x installed on Ubuntu 16.10 x64.

- a standard Spring-based web application, that is deployed on Tomcat 8.5.x. Tomcat is configured with next parameters:

CATALINA_OPTS="$CATALINA_OPTS -Xms128m -Xmx512m -XX:NewSize=64m -XX:MaxNewSize=128m -Xss512k -XX:+UseParallelGC -XX:+AggressiveOpts -XX:+UseFastAccessorMethods -XX:MaxMetaspaceSize=512m -XX:-TieredCompilation -XX:ReservedCodeCacheSize=512m" - JMeter 2.13 for load tests running

- JProfiler 9.x for java heap memory usage tracking

toputil for java process memory usage tracking

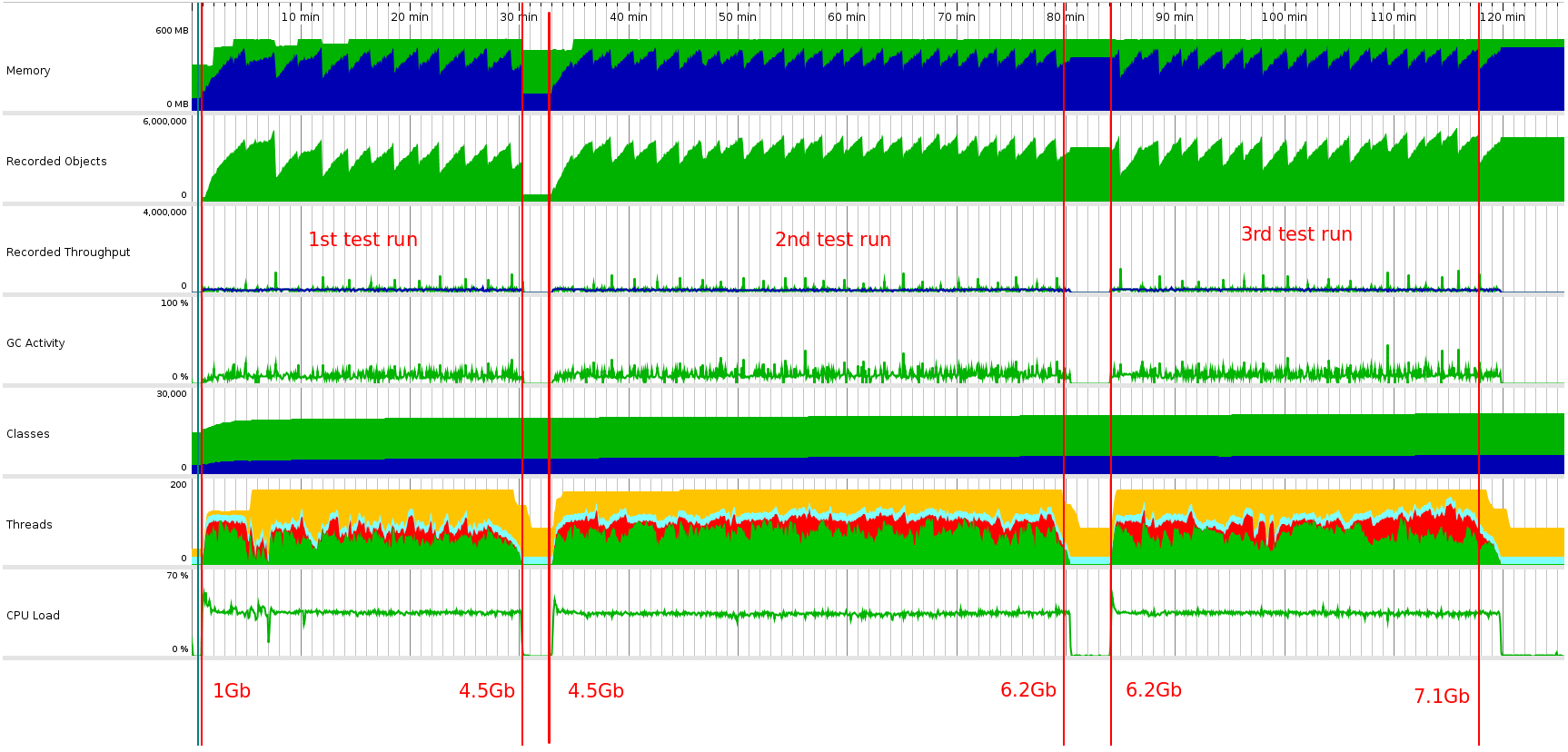

When I start load tests sequentially 3 times I observe (using top) that java process is increasing a number of used memory:

- after Tomcat start it uses ~1Gb

- after first test running it uses 4.5Gb

- when all tests are finished Tomcat is using 7Gb of RAM

The all this time heap size is limited and JProfiler confirms that - heap size does not exceed 512Mb.

This is a screenshot of JProfiler. Red numbers at the bottom are memory size is used by java process (according to top).

The question is: why does the java process keep increasing memory usage the all time while it's working?

Thanks!

UPD#1: About the possible duplicate: they have confirmed that this only happens on Solaris. but I use Ubuntu 16.10. As well the pointed question does not have an answer that would explain the cause of the problem.

UPD#2: I had to return to this issue after some pause. And now I use pmap util to make a dump of memory used by the java process. I have three dumps: before tests running, after the first tests execution and after some N tests executions. Tests they produce a lot of traffic to the application. All dumps are here: https://gist.github.com/proshin-roman/752cea2dc25cde64b30514ed9ed9bbd0. They are quite huge but the most interesting things are on the 8th line with size of heap: it takes 282.272 Kb before tests and 3.036.400 Kb finally - more than 10x difference! And it's growing each time I run tests. At the same time the heap size is constant (according to JProfiler/VisualVM). What options do I have to find the cause of this problem? Debug JVM? I've tried to find any ways to "look" at this segment of memory but failed. So:

- can I identify somehow content of the

[heap]segment of memory? - does such behavior of java look expected?

I will appreciate any tips about this problem. Thanks all!

UPD #3: using jemalloc (thanks @ivan for the idea) I got next image:

And it looks like I have almost the same problem as described here: http://www.evanjones.ca/java-native-leak-bug.html

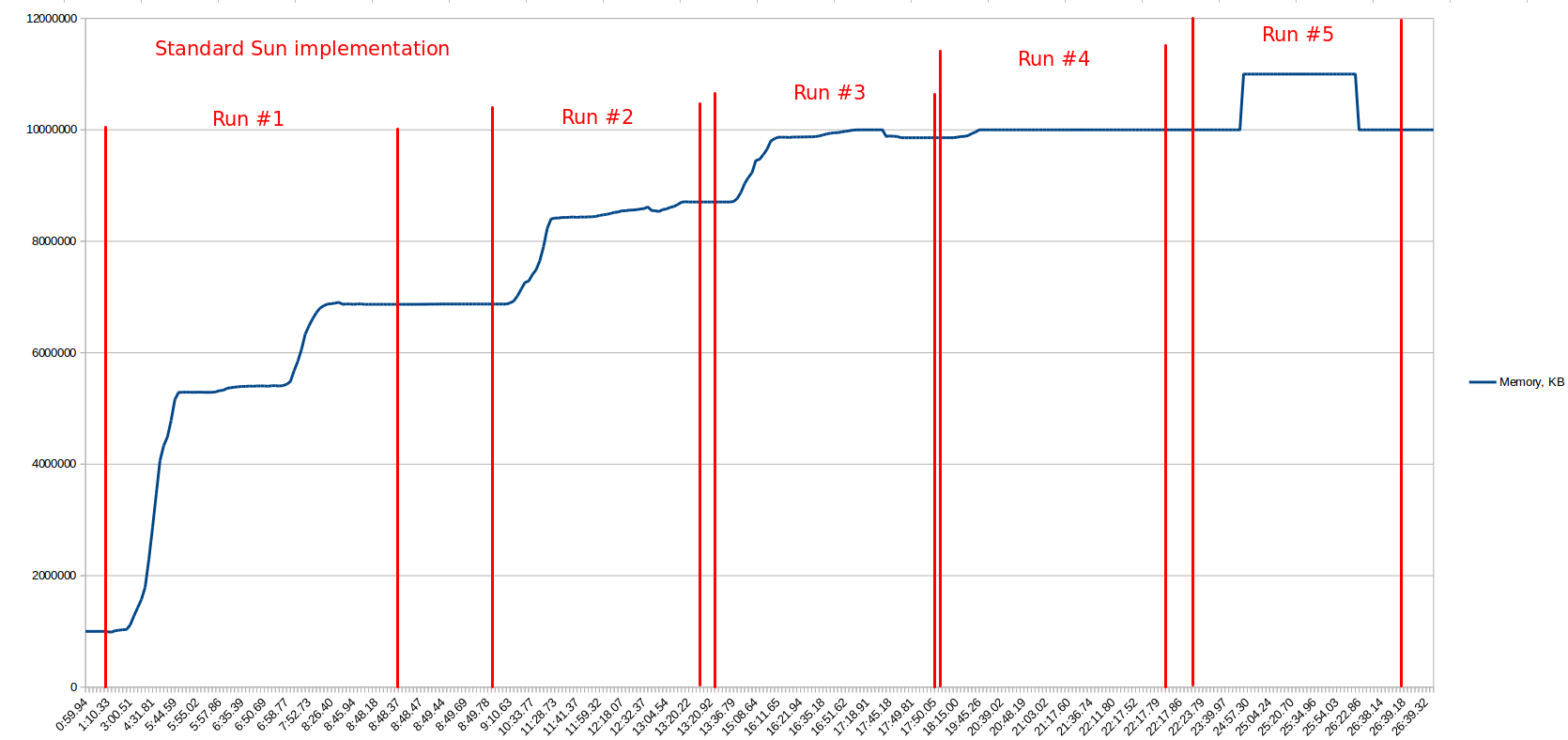

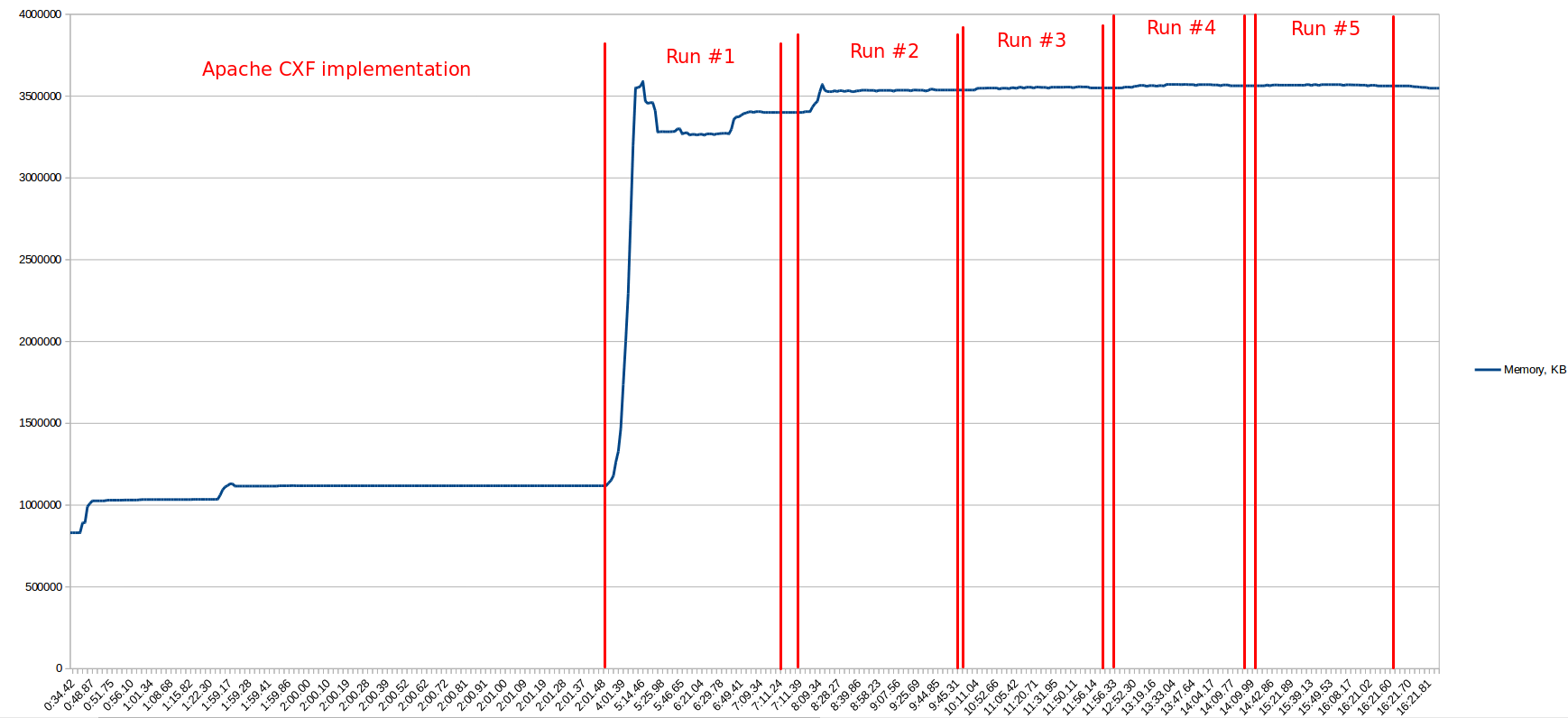

UPD #4: for now I found that the issue is related to java.util.zip.Inflater/Deflater and these classes are used in many places in my application. But the largest impact on memory consumption makes interaction with remove SOAP-service. My application uses reference implementation of JAX-WS standard and it gave next memory consumption under load (it has low precision after 10Gb):  Then I've made the same load tests but with Apache CXF implementation and it gave next result:

Then I've made the same load tests but with Apache CXF implementation and it gave next result:  So you can see that CXF uses less memory and it's more stable (it's not growing the all time as ref.impl.).

Finally I found an issue on JDK issue tracker - https://bugs.openjdk.java.net/browse/JDK-8074108 - it's again about memory leaks in zip library and the issue is not closed yet. So it looks like I can not really fix the problem with memory leaks in my app, just can make some workaround.

So you can see that CXF uses less memory and it's more stable (it's not growing the all time as ref.impl.).

Finally I found an issue on JDK issue tracker - https://bugs.openjdk.java.net/browse/JDK-8074108 - it's again about memory leaks in zip library and the issue is not closed yet. So it looks like I can not really fix the problem with memory leaks in my app, just can make some workaround.

Thanks all for your help!

psortop)? If so, it looks like there is probably a memory leak in the JVM itself. – Merilynmeringuetoputil. I use standard Oracle JDK 1.8.x without any modifications. – Leptospirosislibtcnativethat can be used to use OpenSSL for faster crypto for TLS. – Merilynmeringuetopoutput; as well I don't use SSL. Finally I'm going update my question to add more info. – Leptospirosislibtcnativewon't be an issue. Any non-heap-related memory leak will be a problem with the JVM itself. Tomcat 8.5 has no known memory leaks, so this is either your own application, the JVM, or you are misreading the output of the tool. If you run indefinitely, does it eventually crash? Or are you just worried about the values you are seeing? – Merilynmeringuepmaputil and then it's marked as[heap]) - this heap is growing actively under load. And the problem is that I can not check why does it increase the all time under load. – Leptospirosisjemalloc. It's possible that your application (or Tomcat?) has a native memory leak due to not closing gzip streams. Have you run a static analysis tool such as FindBugs against your application? – Merilynmeringue