Is there a reason to prefer using map() over list comprehension or vice versa? Is either of them generally more efficient or considered generally more Pythonic than the other?

map may be microscopically faster in some cases (when you're not making a lambda for the purpose, but using the same function in map and a list comprehension). List comprehensions may be faster in other cases and most (not all) Pythonistas consider them more direct and clearer.

An example of the tiny speed advantage of map when using exactly the same function:

$ python -m timeit -s'xs=range(10)' 'map(hex, xs)'

100000 loops, best of 3: 4.86 usec per loop

$ python -m timeit -s'xs=range(10)' '[hex(x) for x in xs]'

100000 loops, best of 3: 5.58 usec per loop

An example of how performance comparison gets completely reversed when map needs a lambda:

$ python -m timeit -s'xs=range(10)' 'map(lambda x: x+2, xs)'

100000 loops, best of 3: 4.24 usec per loop

$ python -m timeit -s'xs=range(10)' '[x+2 for x in xs]'

100000 loops, best of 3: 2.32 usec per loop

map(operator.attrgetter('foo'), objs) easier to read than [o.foo for o in objs] ?! –

Rusk o here, and your examples show why. –

Humdinger str() example, though. –

Pericynthion map(operator.attrgetter('foo'), objs) and [o.foo for foo in objs] don't do the same .-. and where does come from the o? If you meant [foo.foo for foo in objs], I'm even more skeptical on which one is actually easier to read (Yeah I know, 4 years later) –

Rambutan map, lambda and filter)?? –

Rambutan $> python -mtimeit -s 's="abcd"*100' '[x.upper() for x in s if x<"c"]' 10000 loops, best of 3: 73 usec per loop $> python -mtimeit -s 's="abcd"*100' 'map(str.upper,filter(lambda x:x<"c",s))' 10000 loops, best of 3: 147 usec per loop –

Lightner [o.foo for o in objs], not [o.foo for foo in objs] –

Hash list(map(...)) with lambda or explicitly defined functions are about the same speed, despite the explicit list call that's now needed. Meanwhile, an equivalent use of a builtin function or method is over twice as fast. (My tests used def test(x): return x + 1, lambda x: x + 1 or (1).__add__ as the functions applied to range(100).) –

Leandroleaning lines = map (str.strip, open (filename, 'r')). Truly perlesque, but also in the bad way. We avoided doing a lookup of "strip" on every instance of string we read from the file but we also forgot to expressly declare we want an iterable so we built a list: producing a generator expression would enable a better overall flow. –

Monadnock map() approach takes lesser time even with the lambda expression. 10000000 loops, best of 3: 0.141 usec per loop for map() and 1000000 loops, best of 3: 0.382 usec per loop for listcomps. ``` I wish to ask if map() with lambda will always be slower than its corresponding listcomps or it is not always the case. –

Compulsive map(int, stuff) is a lot more clear than [int(x) for x in stuff], no need for a random variable. it depends on if you have functional experience or not. map has been around a lot longer than list comprehensions as well. each serves its purpose, and both should be tools in your tool belt. Also map is lazy so you can pass it around until you need it whereas the comp will be done on the line it's written. –

Blastocoel map version will produce an iterator which means it will use less memory than the list comprehension. This makes it much faster than list comprehensions. Using a generator comprehension is closer but still slower. –

Microclimatology map is 7x faster on your first timeit example and 3.5x on the second. –

Rudimentary map creates an iterator in O(1) time, while the list comprehension computes the results in O(n) time, where n is the length of xs. Please check by using xs=range(100000) and see that the list comprehension is orders of magnitude slower. For a fairer comparison you should compare map with a generator expression. –

Jump Cases

- Common case: Almost always, you will want to use a list comprehension in python because it will be more obvious what you're doing to novice programmers reading your code. (This does not apply to other languages, where other idioms may apply.) It will even be more obvious what you're doing to python programmers, since list comprehensions are the de-facto standard in python for iteration; they are expected.

- Less-common case: However if you already have a function defined, it is often reasonable to use

map, though it is considered 'unpythonic'. For example,map(sum, myLists)is more elegant/terse than[sum(x) for x in myLists]. You gain the elegance of not having to make up a dummy variable (e.g.sum(x) for x...orsum(_) for _...orsum(readableName) for readableName...) which you have to type twice, just to iterate. The same argument holds forfilterandreduceand anything from theitertoolsmodule: if you already have a function handy, you could go ahead and do some functional programming. This gains readability in some situations, and loses it in others (e.g. novice programmers, multiple arguments)... but the readability of your code highly depends on your comments anyway. - Almost never: You may want to use the

mapfunction as a pure abstract function while doing functional programming, where you're mappingmap, or curryingmap, or otherwise benefit from talking aboutmapas a function. In Haskell for example, a functor interface calledfmapgeneralizes mapping over any data structure. This is very uncommon in python because the python grammar compels you to use generator-style to talk about iteration; you can't generalize it easily. (This is sometimes good and sometimes bad.) You can probably come up with rare python examples wheremap(f, *lists)is a reasonable thing to do. The closest example I can come up with would besumEach = partial(map,sum), which is a one-liner that is very roughly equivalent to:

def sumEach(myLists):

return [sum(_) for _ in myLists]

- Just using a

for-loop: You can also of course just use a for-loop. While not as elegant from a functional-programming viewpoint, sometimes non-local variables make code clearer in imperative programming languages such as python, because people are very used to reading code that way. For-loops are also, generally, the most efficient when you are merely doing any complex operation that is not building a list like list-comprehensions and map are optimized for (e.g. summing, or making a tree, etc.) -- at least efficient in terms of memory (not necessarily in terms of time, where I'd expect at worst a constant factor, barring some rare pathological garbage-collection hiccuping).

"Pythonism"

I dislike the word "pythonic" because I don't find that pythonic is always elegant in my eyes. Nevertheless, map and filter and similar functions (like the very useful itertools module) are probably considered unpythonic in terms of style.

Laziness

In terms of efficiency, like most functional programming constructs, MAP CAN BE LAZY, and in fact is lazy in python. That means you can do this (in python3) and your computer will not run out of memory and lose all your unsaved data:

>>> map(str, range(10**100))

<map object at 0x2201d50>

Try doing that with a list comprehension:

>>> [str(n) for n in range(10**100)]

# DO NOT TRY THIS AT HOME OR YOU WILL BE SAD #

Do note that list comprehensions are also inherently lazy, but python has chosen to implement them as non-lazy. Nevertheless, python does support lazy list comprehensions in the form of generator expressions, as follows:

>>> (str(n) for n in range(10**100))

<generator object <genexpr> at 0xacbdef>

You can basically think of the [...] syntax as passing in a generator expression to the list constructor, like list(x for x in range(5)).

Brief contrived example

from operator import neg

print({x:x**2 for x in map(neg,range(5))})

print({x:x**2 for x in [-y for y in range(5)]})

print({x:x**2 for x in (-y for y in range(5))})

List comprehensions are non-lazy, so may require more memory (unless you use generator comprehensions). The square brackets [...] often make things obvious, especially when in a mess of parentheses. On the other hand, sometimes you end up being verbose like typing [x for x in.... As long as you keep your iterator variables short, list comprehensions are usually clearer if you don't indent your code. But you could always indent your code.

print(

{x:x**2 for x in (-y for y in range(5))}

)

or break things up:

rangeNeg5 = (-y for y in range(5))

print(

{x:x**2 for x in rangeNeg5}

)

Efficiency comparison for python3

map is now lazy:

% python3 -mtimeit -s 'xs=range(1000)' 'f=lambda x:x' 'z=map(f,xs)'

1000000 loops, best of 3: 0.336 usec per loop ^^^^^^^^^

Therefore if you will not be using all your data, or do not know ahead of time how much data you need, map in python3 (and generator expressions in python2 or python3) will avoid calculating their values until the last moment necessary. Usually this will usually outweigh any overhead from using map. The downside is that this is very limited in python as opposed to most functional languages: you only get this benefit if you access your data left-to-right "in order", because python generator expressions can only be evaluated the order x[0], x[1], x[2], ....

However let's say that we have a pre-made function f we'd like to map, and we ignore the laziness of map by immediately forcing evaluation with list(...). We get some very interesting results:

% python3 -mtimeit -s 'xs=range(1000)' 'f=lambda x:x' 'z=list(map(f,xs))'

10000 loops, best of 3: 165/124/135 usec per loop ^^^^^^^^^^^^^^^

for list(<map object>)

% python3 -mtimeit -s 'xs=range(1000)' 'f=lambda x:x' 'z=[f(x) for x in xs]'

10000 loops, best of 3: 181/118/123 usec per loop ^^^^^^^^^^^^^^^^^^

for list(<generator>), probably optimized

% python3 -mtimeit -s 'xs=range(1000)' 'f=lambda x:x' 'z=list(f(x) for x in xs)'

1000 loops, best of 3: 215/150/150 usec per loop ^^^^^^^^^^^^^^^^^^^^^^

for list(<generator>)

In results are in the form AAA/BBB/CCC where A was performed with on a circa-2010 Intel workstation with python 3.?.?, and B and C were performed with a circa-2013 AMD workstation with python 3.2.1, with extremely different hardware. The result seems to be that map and list comprehensions are comparable in performance, which is most strongly affected by other random factors. The only thing we can tell seems to be that, oddly, while we expect list comprehensions [...] to perform better than generator expressions (...), map is ALSO more efficient that generator expressions (again assuming that all values are evaluated/used).

It is important to realize that these tests assume a very simple function (the identity function); however this is fine because if the function were complicated, then performance overhead would be negligible compared to other factors in the program. (It may still be interesting to test with other simple things like f=lambda x:x+x)

If you're skilled at reading python assembly, you can use the dis module to see if that's actually what's going on behind the scenes:

>>> listComp = compile('[f(x) for x in xs]', 'listComp', 'eval')

>>> dis.dis(listComp)

1 0 LOAD_CONST 0 (<code object <listcomp> at 0x2511a48, file "listComp", line 1>)

3 MAKE_FUNCTION 0

6 LOAD_NAME 0 (xs)

9 GET_ITER

10 CALL_FUNCTION 1

13 RETURN_VALUE

>>> listComp.co_consts

(<code object <listcomp> at 0x2511a48, file "listComp", line 1>,)

>>> dis.dis(listComp.co_consts[0])

1 0 BUILD_LIST 0

3 LOAD_FAST 0 (.0)

>> 6 FOR_ITER 18 (to 27)

9 STORE_FAST 1 (x)

12 LOAD_GLOBAL 0 (f)

15 LOAD_FAST 1 (x)

18 CALL_FUNCTION 1

21 LIST_APPEND 2

24 JUMP_ABSOLUTE 6

>> 27 RETURN_VALUE

>>> listComp2 = compile('list(f(x) for x in xs)', 'listComp2', 'eval')

>>> dis.dis(listComp2)

1 0 LOAD_NAME 0 (list)

3 LOAD_CONST 0 (<code object <genexpr> at 0x255bc68, file "listComp2", line 1>)

6 MAKE_FUNCTION 0

9 LOAD_NAME 1 (xs)

12 GET_ITER

13 CALL_FUNCTION 1

16 CALL_FUNCTION 1

19 RETURN_VALUE

>>> listComp2.co_consts

(<code object <genexpr> at 0x255bc68, file "listComp2", line 1>,)

>>> dis.dis(listComp2.co_consts[0])

1 0 LOAD_FAST 0 (.0)

>> 3 FOR_ITER 17 (to 23)

6 STORE_FAST 1 (x)

9 LOAD_GLOBAL 0 (f)

12 LOAD_FAST 1 (x)

15 CALL_FUNCTION 1

18 YIELD_VALUE

19 POP_TOP

20 JUMP_ABSOLUTE 3

>> 23 LOAD_CONST 0 (None)

26 RETURN_VALUE

>>> evalledMap = compile('list(map(f,xs))', 'evalledMap', 'eval')

>>> dis.dis(evalledMap)

1 0 LOAD_NAME 0 (list)

3 LOAD_NAME 1 (map)

6 LOAD_NAME 2 (f)

9 LOAD_NAME 3 (xs)

12 CALL_FUNCTION 2

15 CALL_FUNCTION 1

18 RETURN_VALUE

It seems it is better to use [...] syntax than list(...). Sadly the map class is a bit opaque to disassembly, but we can make due with our speed test.

map and filter along with standard library itertools are inherently bad style. Unless GvR actually says they were either a terrible mistake or solely for performance, the only natural conclusion if that's what "Pythonicness" says is to forget about it as stupid ;-) –

Calamander map/filter was a great idea for Python 3, and only a rebellion by other Pythonistas kept them in the built-in namespace (while reduce was moved to functools). I personally disagree (map and filter are fine with predefined, particularly built-in, functions, just never use them if a lambda would be needed), but GvR has basically called them not Pythonic for years. –

Questa itertools? The part I quote from this answer is the main claim that befuddles me. I don't know whether in his ideal world, map and filter would move to itertools (or functools) or go entirely, but whichever is the case, once one says that itertools is unPythonic in its entirety, then I don't really know what "Pythonic" is supposed to mean but I don't think it can be anything similar to "what GvR recommends people use". –

Calamander map/filter, not itertools. Functional programming is perfectly Pythonic (itertools, functools and operator were all designed specifically with functional programming in mind, and I use functional idioms in Python all the time), and itertools provides features that would be a pain to implement yourself, It's specifically map and filter being redundant with generator expressions that made Guido hate them. itertools has always been fine. –

Questa list(map(foo, x)). Since python 3, if you need a list and not a generator, I don't see any case where map, filter and the likes are more readable than a list comprehension. The rest of your argument for generator comprehensions stands. –

Beastly Python 2: You should use map and filter instead of list comprehensions.

An objective reason why you should prefer them even though they're not "Pythonic" is this:

They require functions/lambdas as arguments, which introduce a new scope.

I've gotten bitten by this more than once:

for x, y in somePoints:

# (several lines of code here)

squared = [x ** 2 for x in numbers]

# Oops, x was silently overwritten!

but if instead I had said:

for x, y in somePoints:

# (several lines of code here)

squared = map(lambda x: x ** 2, numbers)

then everything would've been fine.

You could say I was being silly for using the same variable name in the same scope.

I wasn't. The code was fine originally -- the two xs weren't in the same scope.

It was only after I moved the inner block to a different section of the code that the problem came up (read: problem during maintenance, not development), and I didn't expect it.

Yes, if you never make this mistake then list comprehensions are more elegant.

But from personal experience (and from seeing others make the same mistake) I've seen it happen enough times that I think it's not worth the pain you have to go through when these bugs creep into your code.

Conclusion:

Use map and filter. They prevent subtle hard-to-diagnose scope-related bugs.

Side note:

Don't forget to consider using imap and ifilter (in itertools) if they are appropriate for your situation!

list(x+2 for x in objs). Right? –

Rambutan map and/or filter. If anything, the most direct and logical translation to avoid your problem is not to map(lambda x: x ** 2, numbers) but rather to a generator expression list(x ** 2 for x in numbers) which doesn't leak, as JeromeJ has already pointed out. Look Mehrdad, don't take a downvote so personally, I just strongly disagree with your reasoning here. –

Deeplaid imap, not map, which changes the question entirely. But if you just want to find a reason to pick on the answer then just keep your downvote... I don't have much else to say honestly. –

Rebel imap and ifilter ? –

Sherrillsherrington for i, for j, for k, for l in completely disjoint iterators just to avoid reusing variable names, and you very quickly run out of reasonable variable names that way. Which you can avoid if you don't use comprehensions. –

Rebel map is 7x faster on the first original timeit example and 3.5x on the second. –

Rudimentary Actually, map and list comprehensions behave quite differently in the Python 3 language. Take a look at the following Python 3 program:

def square(x):

return x*x

squares = map(square, [1, 2, 3])

print(list(squares))

print(list(squares))

You might expect it to print the line "[1, 4, 9]" twice, but instead it prints "[1, 4, 9]" followed by "[]". The first time you look at squares it seems to behave as a sequence of three elements, but the second time as an empty one.

In the Python 2 language map returns a plain old list, just like list comprehensions do in both languages. The crux is that the return value of map in Python 3 (and imap in Python 2) is not a list - it's an iterator!

The elements are consumed when you iterate over an iterator unlike when you iterate over a list. This is why squares looks empty in the last print(list(squares)) line.

To summarize:

- When dealing with iterators you have to remember that they are stateful and that they mutate as you traverse them.

- Lists are more predictable since they only change when you explicitly mutate them; they are containers.

- And a bonus: numbers, strings, and tuples are even more predictable since they cannot change at all; they are values.

map to produce a data structure, not an iterator. But maybe lazy iterators are easier than lazy data structures. Food for thought. Thanks @Sherbet –

Coastguardsman Here is one possible case:

map(lambda op1,op2: op1*op2, list1, list2)

versus:

[op1*op2 for op1,op2 in zip(list1,list2)]

I am guessing the zip() is an unfortunate and unnecessary overhead you need to indulge in if you insist on using list comprehensions instead of the map. Would be great if someone clarifies this whether affirmatively or negatively.

zip lazy by using itertools.izip –

Trapezium itertools.izip when it's not even required for them, that would have been dumb. * Even though this also depends on us. Python3, IMO at the very least, is already ready (so we can push people using it) but I now this isn't the only 'prob'… –

Rambutan map(operator.mul, list1, list2). It's on these very simple left side expressions that comprehensions get clumsy. –

Interlope map can automatically (if you think it's good) or implicitly (if you think it's bad) pair up the arguments, whereas the equivalent list comprehension requires calling zip. This is overlooked far too often. –

Leandroleaning If you plan on writing any asynchronous, parallel, or distributed code, you will probably prefer map over a list comprehension -- as most asynchronous, parallel, or distributed packages provide a map function to overload python's map. Then by passing the appropriate map function to the rest of your code, you may not have to modify your original serial code to have it run in parallel (etc).

I find list comprehensions are generally more expressive of what I'm trying to do than map - they both get it done, but the former saves the mental load of trying to understand what could be a complex lambda expression.

There's also an interview out there somewhere (I can't find it offhand) where Guido lists lambdas and the functional functions as the thing he most regrets about accepting into Python, so you could make the argument that they're un-Pythonic by virtue of that.

const keyword in C++ is a great triumph along these lines. –

Publication lambda's have been made so lame (no statements..) that they're difficult to use and limited anyways. –

Sherrillsherrington So since Python 3, map() is an iterator, you need to keep in mind what do you need: an iterator or list object.

As @AlexMartelli already mentioned, map() is faster than list comprehension only if you don't use lambda function.

I will present you some time comparisons.

Python 3.5.2 and CPython

I've used Jupiter notebook and especially %timeit built-in magic command

Measurements: s == 1000 ms == 1000 * 1000 µs = 1000 * 1000 * 1000 ns

Setup:

x_list = [(i, i+1, i+2, i*2, i-9) for i in range(1000)]

i_list = list(range(1000))

Built-in function:

%timeit map(sum, x_list) # creating iterator object

# Output: The slowest run took 9.91 times longer than the fastest.

# This could mean that an intermediate result is being cached.

# 1000000 loops, best of 3: 277 ns per loop

%timeit list(map(sum, x_list)) # creating list with map

# Output: 1000 loops, best of 3: 214 µs per loop

%timeit [sum(x) for x in x_list] # creating list with list comprehension

# Output: 1000 loops, best of 3: 290 µs per loop

lambda function:

%timeit map(lambda i: i+1, i_list)

# Output: The slowest run took 8.64 times longer than the fastest.

# This could mean that an intermediate result is being cached.

# 1000000 loops, best of 3: 325 ns per loop

%timeit list(map(lambda i: i+1, i_list))

# Output: 1000 loops, best of 3: 183 µs per loop

%timeit [i+1 for i in i_list]

# Output: 10000 loops, best of 3: 84.2 µs per loop

There is also such thing as generator expression, see PEP-0289. So i thought it would be useful to add it to comparison

%timeit (sum(i) for i in x_list)

# Output: The slowest run took 6.66 times longer than the fastest.

# This could mean that an intermediate result is being cached.

# 1000000 loops, best of 3: 495 ns per loop

%timeit list((sum(x) for x in x_list))

# Output: 1000 loops, best of 3: 319 µs per loop

%timeit (i+1 for i in i_list)

# Output: The slowest run took 6.83 times longer than the fastest.

# This could mean that an intermediate result is being cached.

# 1000000 loops, best of 3: 506 ns per loop

%timeit list((i+1 for i in i_list))

# Output: 10000 loops, best of 3: 125 µs per loop

You need list object:

Use list comprehension if it's custom function, use list(map()) if there is builtin function

You don't need list object, you just need iterable one:

Always use map()!

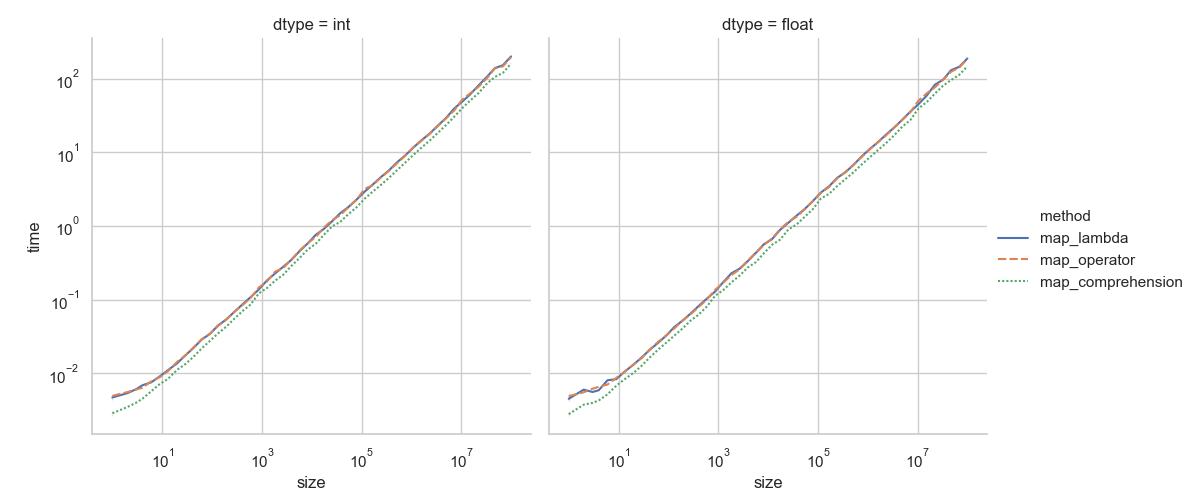

I ran a quick test comparing three methods for invoking the method of an object. The time difference, in this case, is negligible and is a matter of the function in question (see @Alex Martelli's response). Here, I looked at the following methods:

# map_lambda

list(map(lambda x: x.add(), vals))

# map_operator

from operator import methodcaller

list(map(methodcaller("add"), vals))

# map_comprehension

[x.add() for x in vals]

I looked at lists (stored in the variable vals) of both integers (Python int) and floating point numbers (Python float) for increasing list sizes. The following dummy class DummyNum is considered:

class DummyNum(object):

"""Dummy class"""

__slots__ = 'n',

def __init__(self, n):

self.n = n

def add(self):

self.n += 5

Specifically, the add method. The __slots__ attribute is a simple optimization in Python to define the total memory needed by the class (attributes), reducing memory size.

Here are the resulting plots.

As stated previously, the technique used makes a minimal difference and you should code in a way that is most readable to you, or in the particular circumstance. In this case, the list comprehension (map_comprehension technique) is fastest for both types of additions in an object, especially with shorter lists.

Visit this pastebin for the source used to generate the plot and data.

map is faster only if the function is called in the exact same way (i.e. [*map(f, vals)] vs. [f(x) for x in vals]). So list(map(methodcaller("add"), vals)) is faster than [methodcaller("add")(x) for x in vals]. map may not be faster when the looping counterpart uses a different calling method that can avoid some overhead (e.g. x.add() avoids the methodcaller or lambda expression overhead). For this specific test case, [*map(DummyNum.add, vals)] would be faster (because DummyNum.add(x) and x.add() have basically the same performance). –

Handshake list() calls are slightly slower than list comprehensions. For a fair comparison you need to write [*map(...)]. –

Handshake list() calls increased overhead. Should've spent more time reading through the answers. I will re-run these tests for a fair comparison, however negligible the differences may be. –

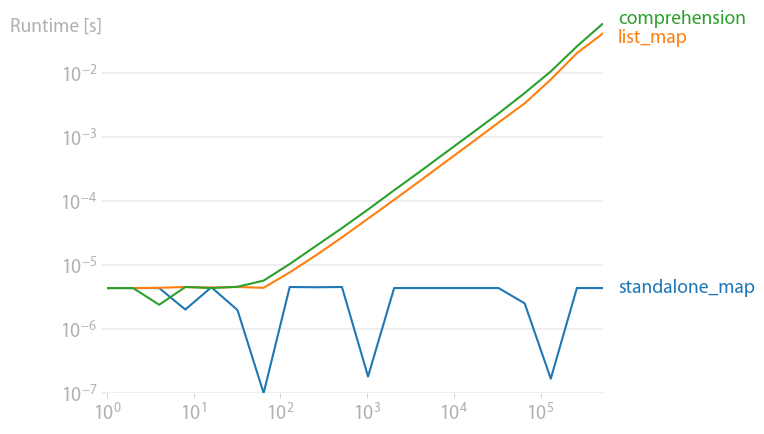

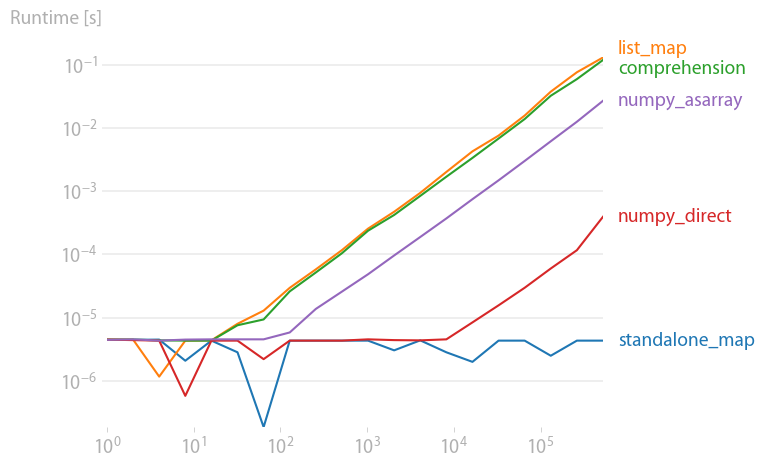

Unspoiled I timed some of the results with perfplot (a project of mine).

As others have noted, map really only returns an iterator so it's a constant-time operation. When realizing the iterator by list(), it's on par with list comprehensions. Depending on the expression, either one might have a slight edge but it's hardly significant.

Note that arithmetic operations like x ** 2 are much faster in NumPy, especially if the input data is already a NumPy array.

hex:

x ** 2:

Code to reproduce the plots:

import perfplot

def standalone_map(data):

return map(hex, data)

def list_map(data):

return list(map(hex, data))

def comprehension(data):

return [hex(x) for x in data]

b = perfplot.bench(

setup=lambda n: list(range(n)),

kernels=[standalone_map, list_map, comprehension],

n_range=[2 ** k for k in range(20)],

equality_check=None,

)

b.save("out.png")

b.show()

import perfplot

import numpy as np

def standalone_map(data):

return map(lambda x: x ** 2, data[0])

def list_map(data):

return list(map(lambda x: x ** 2, data[0]))

def comprehension(data):

return [x ** 2 for x in data[0]]

def numpy_asarray(data):

return np.asarray(data[0]) ** 2

def numpy_direct(data):

return data[1] ** 2

b = perfplot.bench(

setup=lambda n: (list(range(n)), np.arange(n)),

kernels=[standalone_map, list_map, comprehension, numpy_direct, numpy_asarray],

n_range=[2 ** k for k in range(20)],

equality_check=None,

)

b.save("out2.png")

b.show()

standalone_map code is merely instantiating a map object and doesn't perform any iteration - i.e., the computation of the hex/square values never actually happens. This explains the performance results, of course. It doesn't seem very useful to include this in the plots. –

Leandroleaning I tried the code by @alex-martelli but found some discrepancies

python -mtimeit -s "xs=range(123456)" "map(hex, xs)"

1000000 loops, best of 5: 218 nsec per loop

python -mtimeit -s "xs=range(123456)" "[hex(x) for x in xs]"

10 loops, best of 5: 19.4 msec per loop

map takes the same amount of time even for very large ranges while using list comprehension takes a lot of time as is evident from my code. So apart from being considered "unpythonic", I have not faced any performance issues relating to usage of map.

map returns a list. In Python 3, map is lazily evaluated, so simply calling map doesn't compute any of the new list elements, hence why you get such short times. –

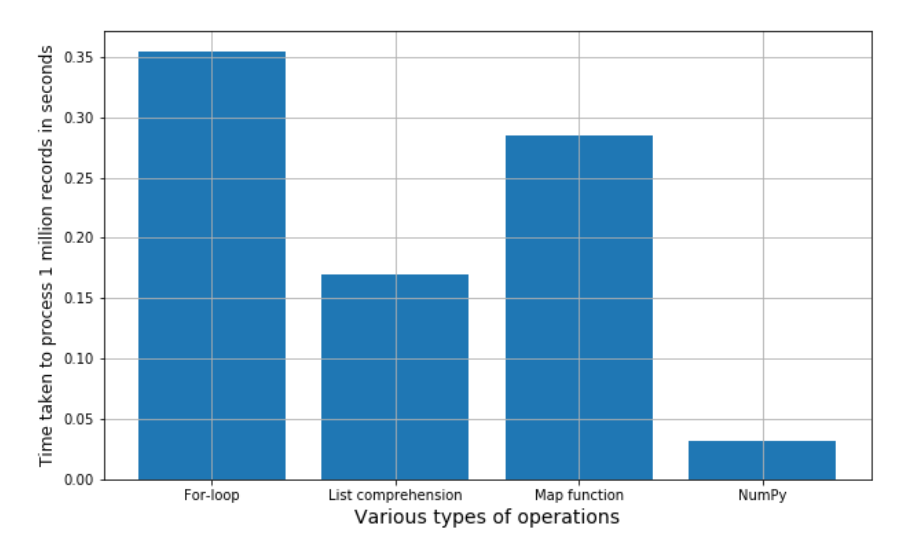

Undertaker Performance measurement

Image Source: Experfy

You can see for yourself which is better between - list comprehension and the map function.

(list comprehension takes less time to process 1 million records when compared to a map function.)

I consider that the most Pythonic way is to use a list comprehension instead of map and filter. The reason is that list comprehensions are clearer than map and filter.

In [1]: odd_cubes = [x ** 3 for x in range(10) if x % 2 == 1] # using a list comprehension

In [2]: odd_cubes_alt = list(map(lambda x: x ** 3, filter(lambda x: x % 2 == 1, range(10)))) # using map and filter

In [3]: odd_cubes == odd_cubes_alt

Out[3]: True

As you an see, a comprehension does not require extra lambda expressions as map needs. Furthermore, a comprehension also allows filtering easily, while map requires filter to allow filtering.

My use case:

def sum_items(*args):

return sum(args)

list_a = [1, 2, 3]

list_b = [1, 2, 3]

list_of_sums = list(map(sum_items,

list_a, list_b))

>>> [3, 6, 9]

comprehension = [sum(items) for items in iter(zip(list_a, list_b))]

I found myself starting to use more map, I thought map could be slower than comp due to pass and return arguments, that's why I found this post.

I believe using map could be much more readable and flexible, especially when I need to construct the values of the list.

You actually understand it when you read it if you used map.

def pair_list_items(*args):

return args

packed_list = list(map(pair_list_items,

lista, *listb, listc.....listn))

Plus the flexibility bonus. And thank for all other answers, plus the performance bonus.

© 2022 - 2024 — McMap. All rights reserved.