I'm working through some examples of Linear Regression under different scenarios, comparing the results from using Normalizer and StandardScaler, and the results are puzzling.

I'm using the boston housing dataset, and prepping it this way:

import numpy as np

import pandas as pd

from sklearn.datasets import load_boston

from sklearn.preprocessing import Normalizer

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LinearRegression

#load the data

df = pd.DataFrame(boston.data)

df.columns = boston.feature_names

df['PRICE'] = boston.target

I'm currently trying to reason about the results I get from the following scenarios:

- Initializing Linear Regression with the parameter

normalize=Truevs usingNormalizer - Initializing Linear Regression with the parameter

fit_intercept = Falsewith and without standardization.

Collectively, I find the results confusing.

Here's how I'm setting everything up:

# Prep the data

X = df.iloc[:, :-1]

y = df.iloc[:, -1:]

normal_X = Normalizer().fit_transform(X)

scaled_X = StandardScaler().fit_transform(X)

#now prepare some of the models

reg1 = LinearRegression().fit(X, y)

reg2 = LinearRegression(normalize=True).fit(X, y)

reg3 = LinearRegression().fit(normal_X, y)

reg4 = LinearRegression().fit(scaled_X, y)

reg5 = LinearRegression(fit_intercept=False).fit(scaled_X, y)

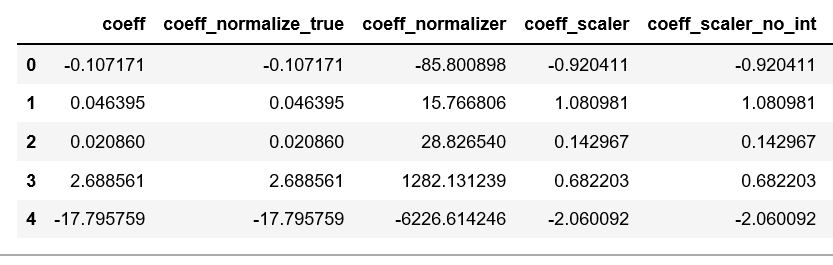

Then, I created 3 separate dataframes to compare the R_score, coefficient values, and predictions from each model.

To create the dataframe to compare coefficient values from each model, I did the following:

#Create a dataframe of the coefficients

coef = pd.DataFrame({

'coeff': reg1.coef_[0],

'coeff_normalize_true': reg2.coef_[0],

'coeff_normalizer': reg3.coef_[0],

'coeff_scaler': reg4.coef_[0],

'coeff_scaler_no_int': reg5.coef_[0]

})

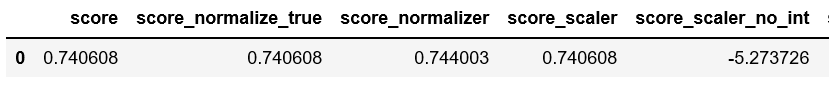

Here's how I created the dataframe to compare the R^2 values from each model:

scores = pd.DataFrame({

'score': reg1.score(X, y),

'score_normalize_true': reg2.score(X, y),

'score_normalizer': reg3.score(normal_X, y),

'score_scaler': reg4.score(scaled_X, y),

'score_scaler_no_int': reg5.score(scaled_X, y)

}, index=range(1)

)

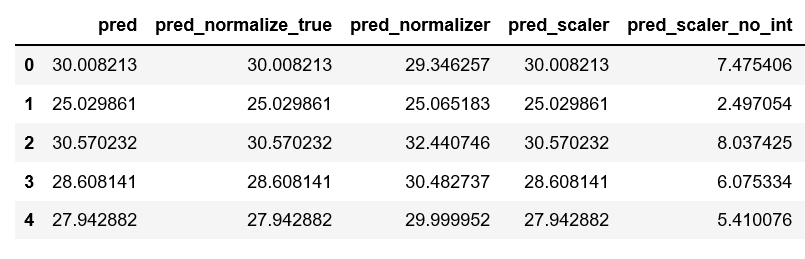

Lastly, here's the dataframe that compares the predictions from each:

predictions = pd.DataFrame({

'pred': reg1.predict(X).ravel(),

'pred_normalize_true': reg2.predict(X).ravel(),

'pred_normalizer': reg3.predict(normal_X).ravel(),

'pred_scaler': reg4.predict(scaled_X).ravel(),

'pred_scaler_no_int': reg5.predict(scaled_X).ravel()

}, index=range(len(y)))

Here are the resulting dataframes:

I have three questions that I can't reconcile:

- Why is there absolutely no difference between the first two models? It appears that setting

normalize=Falsedoes nothing. I can understand having predictions and R^2 values that are the same, but my features have different numerical scales, so I'm not sure why normalizing would have no effect at all. This is doubly confusing when you consider that usingStandardScalerchanges the coefficients considerably. - I don't understand why the model using

Normalizercauses such radically different coefficient values from the others, especially when the model withLinearRegression(normalize=True)makes no change at all.

If you were to look at the documentation for each, it appears they're very similar if not identical.

From the docs on sklearn.linear_model.LinearRegression():

normalize : boolean, optional, default False

This parameter is ignored when fit_intercept is set to False. If True, the regressors X will be normalized before regression by subtracting the mean and dividing by the l2-norm.

Meanwhile, the docs on sklearn.preprocessing.Normalizer states that it normalizes to the l2 norm by default.

I don't see a difference between what these two options do, and I don't see why one would have such radical differences in coefficient values from the other.

- The results from the model using the

StandardScalerare coherent to me, but I don't understand why the model usingStandardScalerand settingset_intercept=Falseperforms so poorly.

From the docs on the Linear Regression module:

fit_intercept : boolean, optional, default True

whether to calculate the intercept for this model. If set to False, no

intercept will be used in calculations (e.g. data is expected to be already

centered).

The StandardScaler centers your data, so I don't understand why using it with fit_intercept=False produces incoherent results.