I faced the same problem. Here is the strategy I used to send text that is much, much longer than OpenAIs GPT3 token limit.

Depending on the model (Davinci, Curie, etc.) used, requests can use up to 4097 tokens shared between prompt and completion.

- Prompt being the input you send to OpenAI, i.e. your "command", e.g. "Summarize the following text" plus the text itself

- Completion being the response, i.e. the entire summary of your text

If your prompt is 4000 tokens, your completion can be 97 tokens at most. For more information on OpenAI tokens and how to count them, see here.

To ensure that we don’t exceed the maximum length limit for prompt plus completion, we need to ensure that prompt (i.e. your text) and completion (i.e. the summary) put together always fits into the 4097 token boundary.

For that reason we split the entire text into multiple text chunks, summarize each chunk independently and finally merge all summarized chunks using a simple " ".join() function.

Maximum Number of Words - Token-to-Word Conversion

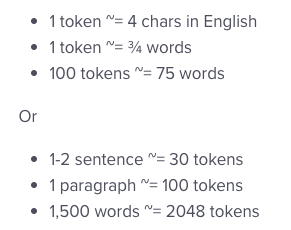

OpenAI has a fixed limit on the number of tokens. However, a token is not the same as a word. Hence, we first need to calculate the maximum number of words we can send to OpenAI. The documentation says:

![enter image description here]()

Given the token-to-word ratio, we can send approximately 2900 words to OpenAI's GPT3 assuming a 5 sentence summary per text chunk.

- Max tokens per request: 4000 tokens (leaving 97 tokens as a safety buffer) = 3000 words

- Max prompt tokens: “Summarize the following text in five sentences” has 7 words = 10 tokens

- Max tokens of returned summary (5 sentences): 20 words per sentence. 5 * 20 = 100 words = 133 tokens

- Max tokens of text chunk: 4000 - 10 - 133 = 3857 tokens = 2900 words

Text Chunking

We can choose from a plethora of strategies to split up the entire text into smaller chunks.

The simplest approach is creating a single list of all words by splitting the entire text on whitespaces, and then creating buckets of words with words evenly distributed across all buckets. The downside is that we are likely to split a sentence half-way through and lose the meaning of the sentence because GPT ends up summarizing the first half of the sentence independently from the second half — ignoring any relations between the two chunks.

Other options include tokenizers such as SentencePiece and spaCy’s sentence splitter. Choosing the later generates the most stable results.

Implementation of Text Chunking with spaCy

The following example splits the text “My first birthday was great. My 2. was even better.” into a list of two sentences.

python -m spacy download en_core_web_sm

import spacy

from spacy.lang.en import English

nlp = spacy.load("en_core_web_sm")

text = "My first birthday was great. My 2. was even better."

for sentence in nlp(text).sents:

print(sentence.text)

Output

My first birthday was great.

My 2. was even better.

spaCy correctly detected the second sentence instead of splitting it after the “2.”.

Now, let’s write a text_to_chunks helper function to generate chunks of sentences where each chunk holds at most 2700 words. 2900 words was the initially calculated word limit, but we want to ensure to have enough buffer for words that are longer than 1.33 tokens.

def text_to_chunks(text):

chunks = [[]]

chunk_total_words = 0

sentences = nlp(text)

for sentence in sentences.sents:

chunk_total_words += len(sentence.text.split(" "))

if chunk_total_words > 2700:

chunks.append([])

chunk_total_words = len(sentence.text.split(" "))

chunks[len(chunks)-1].append(sentence.text)

return chunks

An alternative approach to determine the number of tokens of a text was recently introduced by OpenAI. The approach uses tiktoken and is tailored towards OpenAI's models.

import tiktoken

encoding = tiktoken.encoding_for_model("gpt-3.5-turbo")

number_of_tokens = len(encoding.encode("tiktoken is great!"))

print(number_of_tokens)

Next, we wrap the text summarization logic into a summarize_text function.

def summarize_text(text):

prompt = f"Summarize the following text in 5 sentences:\n{text}"

response = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

temperature=0.3,

max_tokens=150, # = 112 words

top_p=1,

frequency_penalty=0,

presence_penalty=1

)

return response["choices"][0]["text"]

Our final piece of code looks like this:

chunks = text_to_chunks(one_large_text)

chunk_summaries = []

for chunk in chunks:

chunk_summary = summarize_text(" ".join(chunk))

chunk_summaries.append(chunk_summary)

summary = " ".join(chunk_summaries)

References