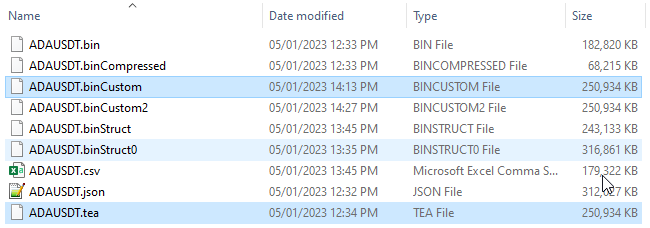

I came across a situation where I have a pretty big file that I need to read binary data from.

Consequently, I realized that the default BinaryReader implementation in .NET is pretty slow. Upon looking at it with .NET Reflector I came across this:

public virtual int ReadInt32()

{

if (this.m_isMemoryStream)

{

MemoryStream stream = this.m_stream as MemoryStream;

return stream.InternalReadInt32();

}

this.FillBuffer(4);

return (((this.m_buffer[0] | (this.m_buffer[1] << 8)) | (this.m_buffer[2] << 0x10)) | (this.m_buffer[3] << 0x18));

}

Which strikes me as extremely inefficient, thinking at how computers were designed to work with 32-bit values since the 32 bit CPU was invented.

So I made my own (unsafe) FastBinaryReader class with code such as this instead:

public unsafe class FastBinaryReader :IDisposable

{

private static byte[] buffer = new byte[50];

//private Stream baseStream;

public Stream BaseStream { get; private set; }

public FastBinaryReader(Stream input)

{

BaseStream = input;

}

public int ReadInt32()

{

BaseStream.Read(buffer, 0, 4);

fixed (byte* numRef = &(buffer[0]))

{

return *(((int*)numRef));

}

}

...

}

Which is much faster - I managed to shave off 5-7 seconds off the time it took to read a 500 MB file, but it's still pretty slow overall (29 seconds initially and ~22 seconds now with my FastBinaryReader).

It still kind of baffles me as to why it still takes so long to read such a relatively small file. If I copy the file from one disk to another it takes only a couple of seconds, so disk throughput is not an issue.

I further inlined the ReadInt32, etc. calls, and I ended up with this code:

using (var br = new FastBinaryReader(new FileStream(cacheFilePath, FileMode.Open, FileAccess.Read, FileShare.Read, 0x10000, FileOptions.SequentialScan)))

while (br.BaseStream.Position < br.BaseStream.Length)

{

var doc = DocumentData.Deserialize(br);

docData[doc.InternalId] = doc;

}

}

public static DocumentData Deserialize(FastBinaryReader reader)

{

byte[] buffer = new byte[4 + 4 + 8 + 4 + 4 + 1 + 4];

reader.BaseStream.Read(buffer, 0, buffer.Length);

DocumentData data = new DocumentData();

fixed (byte* numRef = &(buffer[0]))

{

data.InternalId = *((int*)&(numRef[0]));

data.b = *((int*)&(numRef[4]));

data.c = *((long*)&(numRef[8]));

data.d = *((float*)&(numRef[16]));

data.e = *((float*)&(numRef[20]));

data.f = numRef[24];

data.g = *((int*)&(numRef[25]));

}

return data;

}

Any further ideas on how to make this even faster? I was thinking maybe I could use marshalling to map the entire file straight into memory on top of some custom structure, since the data is linear, fixed size and sequential.

SOLVED: I came to the conclusion that FileStream's buffering/BufferedStream are flawed. Please see the accepted answer and my own answer (with the solution) below.