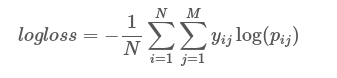

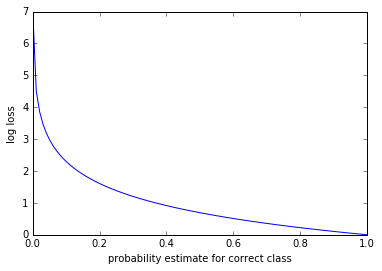

I prepared several models for binary classification of documents in the fraud field. I calculated the log loss for all models. I thought it was essentially measuring the confidence of the predictions and that log loss should be in the range of [0-1]. I believe it is an important measure in classification when the outcome - determining the class is not sufficient for evaluation purposes. So if two models have acc, recall and precision that are quite close but one has a lower log-loss function it should be selected given there are no other parameters/metrics (such as time, cost) in the decision process.

The log loss for the decision tree is 1.57, for all other models it is in the 0-1 range. How do I interpret this score?