This is very specific, but I will try to be brief:

We are running a Django app on Heroku. Three servers:

- test (1 web, 1 celery dyno)

- training (1 web, 1 celery dyno)

- prod (2 web, 1 celery dyno).

We are using Gunicorn with gevents and 4 workers on each dyno.

We are experiencing sporadic high service times. Here is an example from Logentries:

High Response Time:

heroku router - - at=info

method=GET

path="/accounts/login/"

dyno=web.1

connect=1ms

service=6880ms

status=200

bytes=3562

I have been Googling this for weeks now. We are unable to reproduce at will but experience these alerts 0 to 5 times a day. Notable points:

- Occurs on all three apps (all running similar code)

- Occurs on different pages, including simple pages such as 404 and /admin

- Occurs at random times

- Occurs with varying throughput. One of our instances only drives 3 users/day. It is not related to sleeping dynos because we ping with New Relic and the issue can occur mid-session

- Unable to reproduce at will. I have experienced this issue personally once. Clicking a page that normally executes in 500ms resulted in a 30 second delay and eventually an app error screen from Heroku's 30s timeout

- High response times vary from 5000ms - 30000ms.

- New Relic does not point to a specific issue. Here are the past few transactions and times:

- RegexURLResolver.resolve

4,270ms - SessionMiddleware.process_request

2,750ms - Render login.html

1,230ms - WSGIHandler

1,390ms - The above are simple calls and do not normally take near that amount of time

- RegexURLResolver.resolve

What I have narrowed it down to:

This article on Gunicorn and slow clients- I have seen this issue happen with slow clients but also at our office where we have a fiber connection.

Gevent and async workers not playing nicely- We've switched to gunicorn sync workers and problem still persists.

- Gunicorn worker timeout

- It's possible that workers are somehow being kept-alive in a null state.

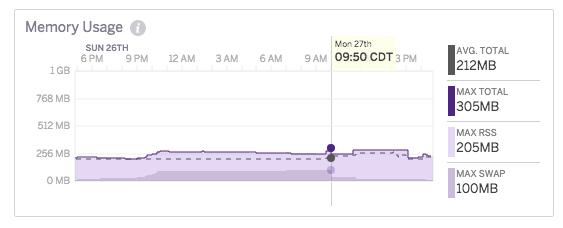

Insufficient workers / dynos- No indication of CPU/memory/db overutilization and New Relic doesn't display any indication of DB latency

- Noisy Neighbors

- Among my multiple emails with Heroku, the support rep has mentioned at least one of my long requests was due to a noisy neighbor, but was not convinced that was the issue.

Subdomain 301- The requests are coming through fine, but getting stuck randomly in the application.

Dynos restarting- If this were the case, many users would be affected. Also, I can see that our dynos have not restarted recently.

- Heroku routing / service issue

- It is possible that the Heroku service is less than advertised and this is simply a downside of using their service.

We have been having this issue for the past few months, but now that we are scaling it needs to be fixed. Any ideas would be much appreciated as I have exhausted nearly every SO or Google link.