I would like to copy a very large storage container from one Azure storage account into another (which also happens to be in another subscription).

I would like an opinion on the following options:

Write a tool that would connect to both storage accounts and copy blobs one at a time using CloudBlob's DownloadToStream() and UploadFromStream(). This seems to be the worst option because it will incur costs when transferring the data and also be quite slow because data will have to come down to the machine running the tool and then get re-uploaded back to Azure.

Write a worker role to do the same - this should theoretically be faster and not incur any cost. However, this is more work.

Upload the tool to a running instance bypassing the worker role deployment and pray the tool finishes before the instance gets recycled/reset.

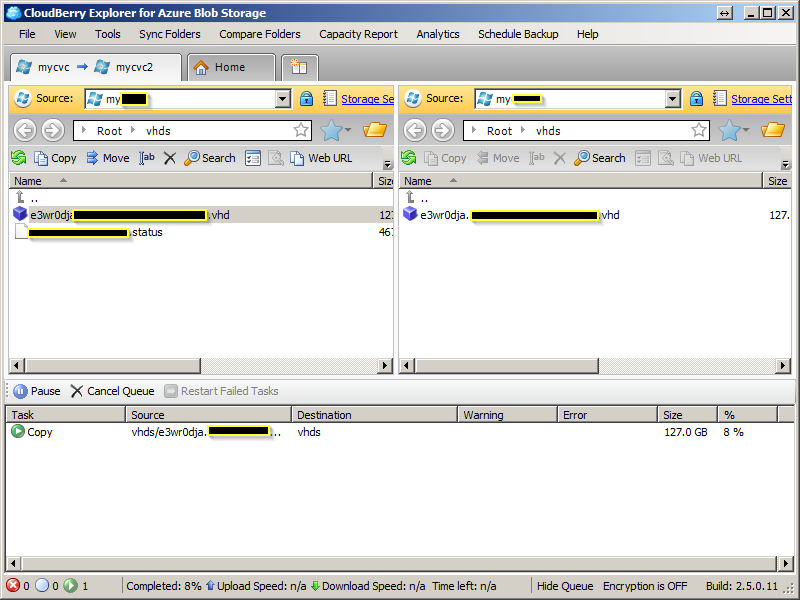

Use an existing tool - have not found anything interesting.

Any suggestions on the approach?

Update: I just found out that this functionality has finally been introduced (REST APIs only for now) for all storage accounts created on July 7th, 2012 or later:

http://msdn.microsoft.com/en-us/library/windowsazure/dd894037.aspx