I want to know if there's a way to use iOS speech recognition in offline mode. According to the documentation (https://developer.apple.com/reference/speech) I didn't see anything about it.

I am afraid that there is no way to do it (however, please make sure to check the update at the end of the answer).

As mentioned at the Speech Framework Official Documentation:

Best Practices for a Great User Experience:

Be prepared to handle the failures that can be caused by reaching speech recognition limits. Because speech recognition is a network-based service, limits are enforced so that the service can remain freely available to all apps.

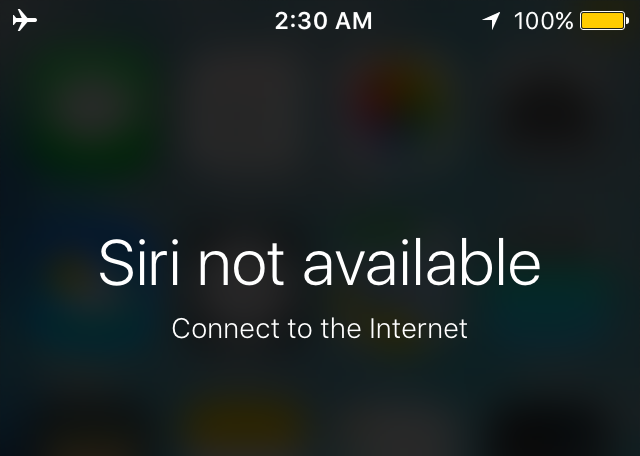

As an end user perspective, trying to get Siri's help without connecting to a network should displays a screen similar to:

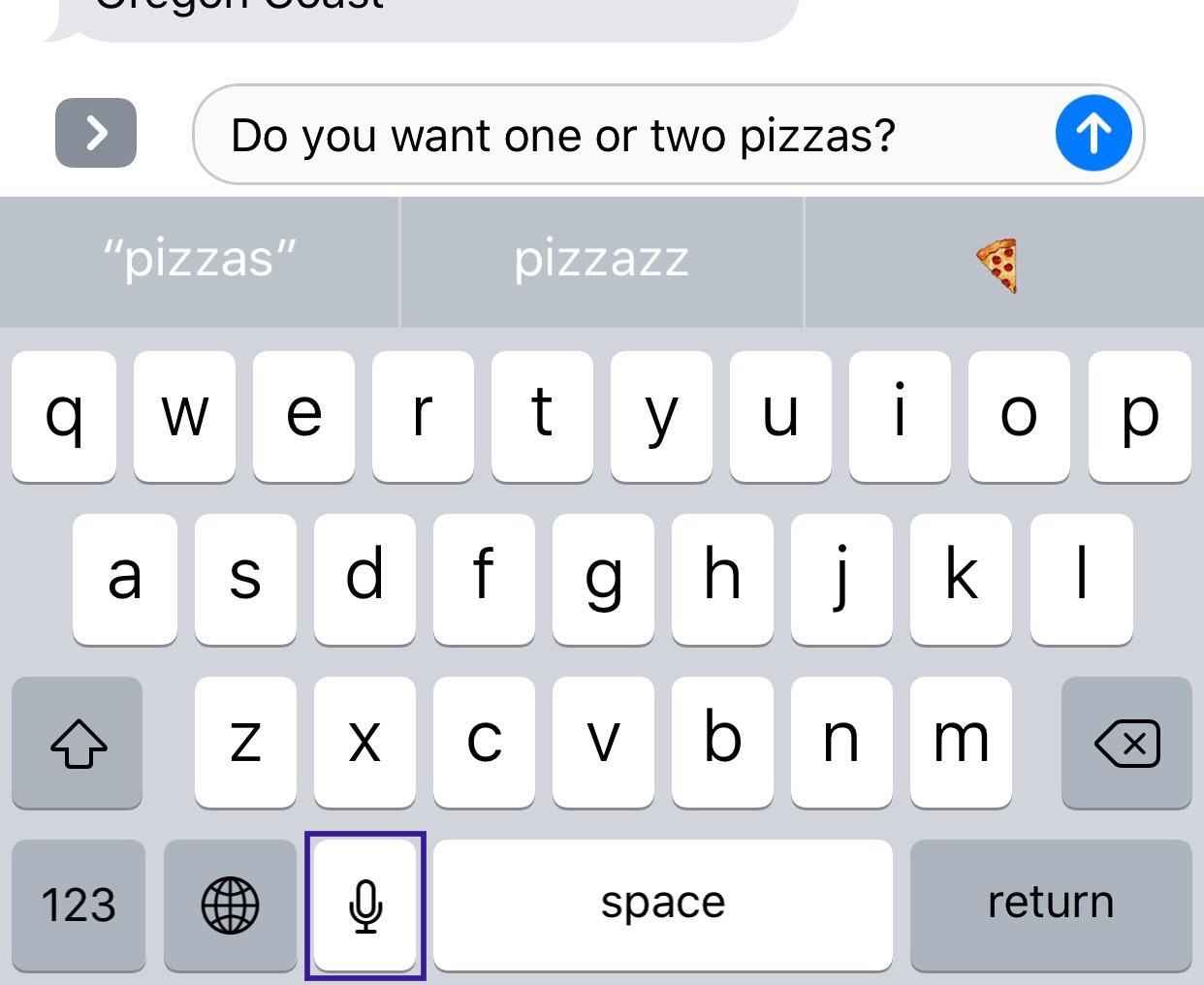

Also, When trying to send a massage -for example-, you'll notice that the mike button should be disabled if the device is unconnected to a network.

Natively, the iOS itself won't able this feature until checking network connection, I assume that would be the same for the third-party developer when using the Speech Framework.

UPDATE:

After watching Speech Recognition API Session (especially, the part 03:00 - 03:25) , I came up with:

Speech Recognition API usually requires an internet connection, but there are some of new devices do support this feature all the time; You might want to check whether the given language is available or not.

Adapted from SFSpeechRecognizer Documentation:

Note that a supported speech recognizer is not the same as an available speech recognizer; for example, the recognizers for some locales may require an Internet connection. You can use the

supportedLocales()method to get a list of supported locales and theisAvailableproperty to find out if the recognizer for a specific locale is available.

Further Reading:

These topics might be related:

Offline transcription will be available starting in iOS 13. You enable it with requiresOnDeviceRecognition.

Example code (Swift 5):

// Create and configure the speech recognition request.

recognitionRequest = SFSpeechAudioBufferRecognitionRequest()

guard let recognitionRequest = recognitionRequest else { fatalError("Unable to create a SFSpeechAudioBufferRecognitionRequest object") }

recognitionRequest.shouldReportPartialResults = true

// Keep speech recognition data on device

if #available(iOS 13, *) {

recognitionRequest.requiresOnDeviceRecognition = true

}

© 2022 - 2024 — McMap. All rights reserved.