A Google search reveals plenty about generating all possible partitions of an integer n into m parts, but I haven't found anything about sampling a uniformly distributed random partition of n into m parts.

Here is some code that does it. This is O(n2) the first time you call it, but it builds a cache so that subsequent calls are O(n).

import random

cache = {}

def count_partitions(n, limit):

if n == 0:

return 1

if (n, limit) in cache:

return cache[n, limit]

x = cache[n, limit] = sum(count_partitions(n-k, k) for k in range(1, min(limit, n) + 1))

return x

def random_partition(n):

a = []

limit = n

total = count_partitions(n, limit)

which = random.randrange(total)

while n:

for k in range(1, min(limit, n) + 1):

count = count_partitions(n-k, k)

if which < count:

break

which -= count

a.append(k)

limit = k

n -= k

return a

How this works: We can calculate how many partitions of an integer n there are in O(n2) time. As a side effect, this produces a table of size O(n2) which we can then use to generate the kth partition of n, for any integer k, in O(n) time.

So let total = the number of partitions. Pick a random number k from 0 to total - 1. Generate the kth partition.

The title of this post is a bit misleading. A random integer partition is by default unrestricted, meaning it can have as many parts of any size. The specific question asked is about partitions of n into m parts, which is a type of restricted integer partition.

For generating unrestricted integer partitions, a very fast and simple algorithm is due to Fristedt, in a paper called The Structure of Random Partitions of Large Integer (1993). The algorithm is as follows:

- Set x = exp(-pi/sqrt(6n) ).

- Generate independent random variables Z(1), Z(2), ..., Z(n), where Z(i) is geometrically distributed with parameter 1-x^i.

- IF sum i*Z(i) = n, where the sum is taken over all i=1,2,...,n, then STOP.

ELSE, repeat 2.

Once the algorithm stops, then Z(1) is the number of 1s, Z(2) is the number of 2s, etc., in a partition chosen uniformly at random. The probability of accepting a randomly chosen set of Z's is asymptotically 1/(94n^3)^(1/4), which means one would expect to run this algorithm O(n^(3/4)) times before accepting a single sample.

The reason I took the time to explain this algorithm is because it applies directly to the problem of generating a partition of n into exactly m parts. First, observe that

The number of partitions of n into exactly m parts is equal to the number of partitions of n with largest part equal to m.

Then we may apply Fristedt's algorithm directly, but instead of generating Z(1), Z(2), ..., Z(n), we can generate Z(1), Z(2), ..., Z(m-1), Z(m)+1 (the +1 here ensures that the largest part is exactly m, and 1+Z(m) is equal in distribution to Z(m) conditional on Z(m)>=1) and set all other Z(m+1), Z(m+2), ... equal to 0. Then once we obtain the target sum in step 3 we are also guaranteed to have an unbiased sample. To obtain a partition of n into exactly m parts simply take the conjugate of the partition generated.

The advantage this has over the recursive method of Nijenhuis and Wilf is that there is no memory requirements other than to store the random variables Z(1), Z(2), etc. Also, the value of x can be anything between 0 and 1 and this algorithm is still unbiased! Choosing a good value of x, however, can make the algorithm much faster, though the choice in Step 1 is nearly optimal for unrestricted integer partitions.

If n is really huge and Fristedt's algorithm takes too long (and table methods are out of the question), then there are other options, but they are a little more complicated; see my thesis https://sites.google.com/site/stephendesalvo/home/papers for more info on probabilistic divide-and-conquer and its applications.

Here is some code that does it. This is O(n2) the first time you call it, but it builds a cache so that subsequent calls are O(n).

import random

cache = {}

def count_partitions(n, limit):

if n == 0:

return 1

if (n, limit) in cache:

return cache[n, limit]

x = cache[n, limit] = sum(count_partitions(n-k, k) for k in range(1, min(limit, n) + 1))

return x

def random_partition(n):

a = []

limit = n

total = count_partitions(n, limit)

which = random.randrange(total)

while n:

for k in range(1, min(limit, n) + 1):

count = count_partitions(n-k, k)

if which < count:

break

which -= count

a.append(k)

limit = k

n -= k

return a

How this works: We can calculate how many partitions of an integer n there are in O(n2) time. As a side effect, this produces a table of size O(n2) which we can then use to generate the kth partition of n, for any integer k, in O(n) time.

So let total = the number of partitions. Pick a random number k from 0 to total - 1. Generate the kth partition.

Another algorithm from Combinatorial Algorithms page 52, "Random Generation of n into k parts"

- Choose

a1,a2, .. ,ak-1a randomk-1subset of{1,2,..,n+k-1}(see below 1., 2.) - Set

r1=a1-1;rj=aj-aj-1-1(j=2..k-1);rk= n+k-1-ak-1 - The

rj(j=1..k) constitute the random partition ofnintokparts

This algorithm for random compositions is based on the "balls-in-cells" model.

Briefly we choose the posiitons of the cell boundaries at random, then by differencing we find out how many balls are in each cell.

For efficiently generating a random subset of a set, see a 1. related answer here and 2. here

update

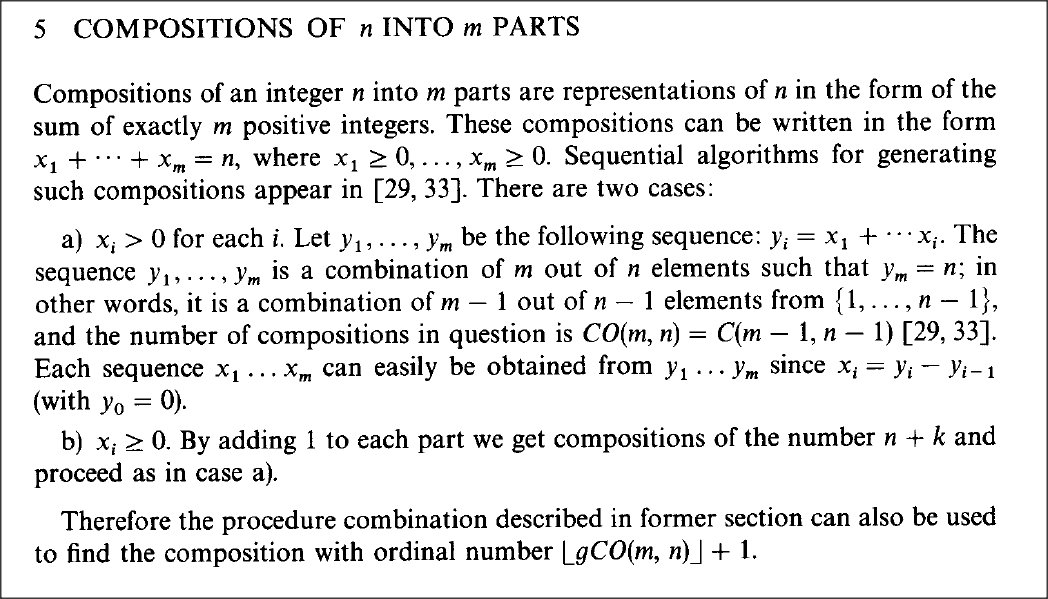

Another approach using a single random number in [0,1] to uniformly generate a random partition (also called composition) is given in IVAN STOJMENOVIC, "ON RANDOM AND ADAPTIVE PARALLEL GENERATION OF COMBINATORIAL OBJECTS" (section 5, section 10)

Just one more version in c#.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace ConsoleApplication6

{

class Program

{

static Random random = new Random();

static void Main(string[] args)

{

PrintPartition(GetUniformPartition(24, 5));

PrintPartition(GetUniformPartition(24, 5));

PrintPartition(GetUniformPartition(24, 5));

PrintPartition(GetUniformPartition(24, 5));

PrintPartition(GetUniformPartition(24, 5));

Console.ReadKey();

}

static int[] GetUniformPartition(int input, int parts)

{

if(input<= 0 || parts <= 0)

throw new ArgumentException("invalid input or parts");

if (input < MinUniformPartition(parts))

throw new ArgumentException("input is to small");

int[] partition = new int[parts];

int sum = 0;

for (int i = 0; i < parts-1; i++)

{

int max = input - MinUniformPartition(parts - i - 1) - sum;

partition[i] = random.Next(parts - i, max);

sum += partition[i];

}

partition[parts - 1] = input - sum; // last

return partition;

}

// sum of 1,2,3,4,..,n

static int MinUniformPartition(int n)

{

return n * n - 1;

}

static void PrintPartition(int[] p)

{

for (int i = 0; i < p.Length; i++)

{

Console.Write("{0},", p[i]);

}

Console.WriteLine();

}

}

}

This code will produce next output:

5,8,7,2,2,

6,6,7,2,3,

5,7,6,2,4,

6,4,3,2,9,

7,8,4,4,1,

I have an evenly distributed partition generator.

Where n := the integer to be partitioned, r:= the number of slices: The algorithm is a patched version of the naive method of simply inserting partings at random. The problem with this method, as it appeared to me when I looked at its output, was that scenarios where partings are placed in the same spot are less likely to occur. There is only one way to get {1,1,1}, while there are 3! ways of getting {2,4,9}, any of {4,2,9},{2,4,9},{9,4,2}... will lead to the same partition placement when sorted. This has been amended by providing additional explicit opportunities for repeats. For each parting insertion, there's a chance that the position of the parting wont be random, but will be selected as a repeat of a formerly selected value. This balances the uneven probability distribution of the naive method right out.

I have proved by exhaustion that each partitioning is perfectly equally likely for r = 3, n = 2. I cbf proving it for higher values but healfhearted ventures to do so found only promising signs. I also tested it on random input, finding that it is at least roughly even for every values I tried[but probably perfectly even].

here it is in C++11: [the output format is different to what you're expecting, it's the positions of the partings rather than the size of the space between them. The conversion is easy, though]

#include <vector>

#include <algorithm>

#include <random>

#include <cassert>

template <typename Parting, typename Seed>

vector<Parting> partitionGen(unsigned nparts, unsigned bandw, Seed seed){//nparts is the number of parts, that is, one greater than the number of dividers listed in the output vector. Bandw is the integer being partitioned.

assert(nparts > 0);

vector<Parting> out(nparts-1);

srand(seed);

unsigned genRange = bandw;

for(auto i=out.begin(); i<out.end(); ++i, ++genRange){

unsigned gen = rand()%genRange;

*i = ((gen<bandw)?

gen:

*(i-(gen-bandw+1)));

}

sort(out.begin(), out.end(), less<Parting>());

return out;

}

I don't like the fact that I have to sort it though. If Vlody's version has an even distribution, it appears that it'd be better.

After some googling I found an algorithm for this in the "Handbook of Applied Algorithms," which Google Books has indexed. The algorithm is given in section 1.12.2, on page 31.

I have implemented the above solution and found that it works very well if one wants to calculate integer partitions for n but not with respect to m. If working with large n, recursion limits and call stacks may need to be increased a lot.

However, you don't need the first function because count_partitions(n, limit) will actually equal the number of partitions of 'n+limit' with 'limit' number of parts. Some mathematical software have very fast functions for finding the number of partition of n into m parts.

I have recently derived a definitely unbiased, very simple, and very fast method (using memoization) to solve your exact question: An algorithm for randomly generating integer partitions of a particular length, in Python?

It's based on knowing something about lexically ordered partitions of n having m parts and uses a similar approach to well-accepted algorithms (e.g. Nijenhuis and Wilf 1978) that find random partitions of n, and is conceptually similar to the above.

In short, if there are x partitions of n with m parts, then we choose a random number between 1 and x. That random number will code for one and only one partition satisfying n and m. I hope this helps.

© 2022 - 2024 — McMap. All rights reserved.