Beyond the simple cases of the InterlockedXxx functions, it seems like

the prevailing pattern with all of these is that they implement their

own locks.

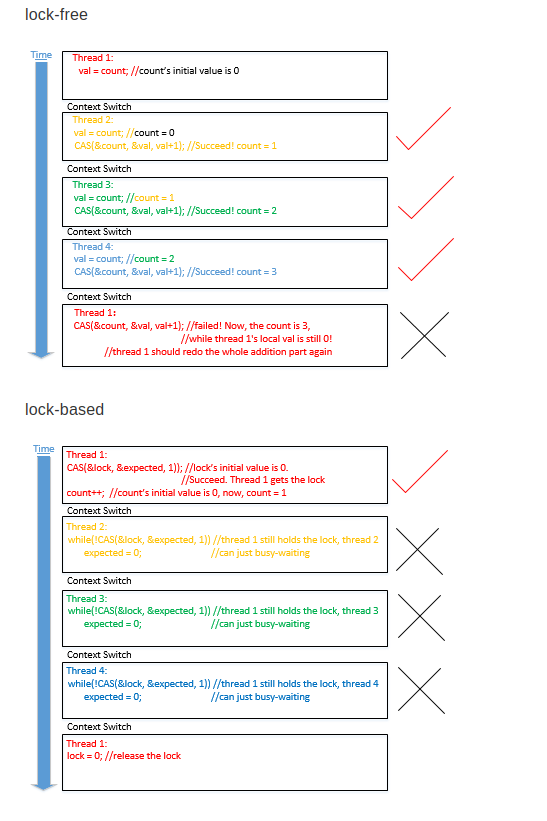

None of the answers here really seem to get to the heart of the difference between a "lock-free" CAS loop and a mutex or spin-lock.

The important difference is that lock-free algorithms are guaranteed to make progress on their own - without the assistance of other threads. With a lock or spin lock, any poor thread that can't acquire a lock is entirely at the mercy of the thread that owns the lock. The poor thread that can't acquire the lock can do nothing except wait (either via a busy wait or an OS-assisted sleep).

With lock-free algorithms that loop on a CAS, each thread is guaranteed to make progress regardless of what other contending threads are doing. Each thread is, essentially, in control of its own fate. Yes, it still may have to loop many times, but the number of times it loops is limited by the number of contending threads. It cannot infinitely loop, for the most part. (In practice, it's possible for live lock to occur due to, e.g. an LL/SC loop that keeps failing due to false sharing) - but again measures can be taken by the thread itself to deal with this - it is not at the mercy of another thread holding a lock.

As for performance, it depends. I've seen flagrant examples of lock-free algorithms being totally out-performed by their locking counterparts, even under high-thread contention. On an x86-64 machine running Debian 7, I compared the performance between the C++ Boost.Lockfree queue (based on the Michael/Scott algorithm) and a plain old std::queue surround by an std::mutex. Under high thread contention, the lockfree version was almost twice as slow.

So why is that? Well, the performance of lockfree algorithms ultimately comes down to the implementation details. How does the algorithm avoid ABA? How does it accomplish safe memory reclamation? There are so many variants... tagged pointers, epoch based reclamation, RCU/quiescent state, hazard pointers, general process-wide garbage collection, etc. All these strategies have performance implications, and some also place restrictions on how your application in general can be designed. In general, reference-counting approaches (or tagged pointer approaches) tend to perform poorly, in my experience. But the alternatives can be much more complex to implement, and require a lot more memory-reclamation infrastructure based around thread-local storage or generalized garbage collection.