When using Templates, you need to declare a Request parameter in the endpoint that will return a template, as shown below:

from fastapi import Request

@app.get('/')

def index(request: Request):

return templates.TemplateResponse("index.html", {"request": request})

Below are given two options (with complete code samples) on how to stream (live) video using FastAPI and OpenCV. Option 1 demonstrates an approach based on your question using the HTTP protocol and FastAPI/Starlette's StreamingResponse. Option 2 uses the WebSocket protocol, which can easily handle HD video streaming and is supported by FastAPI/Starlette (documentation can be found here and here)

I would also suggest having a look at this answer to better understand async/await in Python, and FastAPI in particular, as well as when to define an endpoint in FastAPI with async def or normal def. For instance, the functions of cv2 library, such as camera.read() and cv2.imencode(), are synchronous blocking (either IO-bound or CPU-bound) functions, which means that, when called, they would block the event loop until they complete. That is the reason that both the StreamingResponse's generator (i.e., the gen_frames() function) and the video_feed endpoint in Option 1 are defined with normal def, as this would cause any requests to that endpoint to be run in an external threadpool that would be then awaited (hence, FastAPI will still work asynchronously). It should be noted though that, in the case of StreamingResponse, even if one defined the video_feed endpoint with async def and the StreamingResponse's generator with normal def (as it contains blocking operations), FastAPI/Starlette, in order to prevent the event loop from getting blocked, would use iterate_in_threadpool() to run the generator in a separate thread that would be then awaited—see this answer for more details and the relevant source code. Hence, any requests to that async def endpoint would not block the event loop because of some blocking StreamingResponse generator. Note: Avoid defining the generator with async def, if it contains blocking operations, as FastAPI/Starlette would then run it in the event loop, instead of a separate thread. Only define it with async def, when you are certain that there is no synchronous operation inside that would block the event loop and/or when need to await for coroutines/async functions. In Option 2, the get_stream endpoint had to be defined with async def, as FastAPI/Starlette's websockets functions are async functions and need to be awaited. In this case, the cv2 blocking functions will block the event loop every time they get called, until they complete (which may or may not be trivial, given the time taken to complete those operations, as well as the needs/requirements of one's application; for instance, whether the application is expected to serve multiple requests/users at the same time). However, one could still run blocking operations, such as cv2's functions, inside async def endpoints without blocking the event loop, as well as having multiple instances (workers/processes) of the application running at the same time. Please take a look at the linked answer above for more details and solutions on this subject.

Option 1 - Using HTTP Protocol

You can access the live streaming at http://127.0.0.1:8000/.

app.py

import cv2

import time

import uvicorn

from fastapi import FastAPI, Request

from fastapi.templating import Jinja2Templates

from fastapi.responses import StreamingResponse

app = FastAPI()

camera = cv2.VideoCapture(0, cv2.CAP_DSHOW)

templates = Jinja2Templates(directory="templates")

def gen_frames():

while True:

success, frame = camera.read()

if not success:

break

else:

ret, buffer = cv2.imencode('.jpg', frame)

frame = buffer.tobytes()

yield (b'--frame\r\n'

b'Content-Type: image/jpeg\r\n\r\n' + frame + b'\r\n')

time.sleep(0.03)

@app.get('/')

def index(request: Request):

return templates.TemplateResponse("index.html", {"request": request})

@app.get('/video_feed')

def video_feed():

return StreamingResponse(gen_frames(), media_type='multipart/x-mixed-replace; boundary=frame')

if __name__ == '__main__':

uvicorn.run(app, host='127.0.0.1', port=8000, debug=True)

templates/index.html

<!DOCTYPE html>

<html>

<body>

<div class="container">

<h3> Live Streaming </h3>

<img src="{{ url_for('video_feed') }}" width="50%">

</div>

</body>

</html>

Option 2 - Using WebSocket Protocol

You can access the live streaming at http://127.0.0.1:8000/. Related answers using the WebSocket protocol can be found here, as well as here and here.

app.py

from fastapi import FastAPI, Request, WebSocket, WebSocketDisconnect

from websockets.exceptions import ConnectionClosed

from fastapi.templating import Jinja2Templates

import uvicorn

import asyncio

import cv2

app = FastAPI()

camera = cv2.VideoCapture(0,cv2.CAP_DSHOW)

templates = Jinja2Templates(directory="templates")

@app.get('/')

def index(request: Request):

return templates.TemplateResponse("index.html", {"request": request})

@app.websocket("/ws")

async def get_stream(websocket: WebSocket):

await websocket.accept()

try:

while True:

success, frame = camera.read()

if not success:

break

else:

ret, buffer = cv2.imencode('.jpg', frame)

await websocket.send_bytes(buffer.tobytes())

await asyncio.sleep(0.03)

except (WebSocketDisconnect, ConnectionClosed):

print("Client disconnected")

if __name__ == '__main__':

uvicorn.run(app, host='127.0.0.1', port=8000)

Below is the HTML template for establishing the WebSocket connection, receiving the image bytes and creating a Blob URL (which is released after the image is loaded, so that the object will subsequently be garbage collected, rather being kept in memory, unnecessarily), as shown here, to display the video frame in the browser.

templates/index.html

<!DOCTYPE html>

<html>

<head>

<title>Live Streaming</title>

</head>

<body>

<img id="frame" src="">

<script>

let ws = new WebSocket("ws://localhost:8000/ws");

let image = document.getElementById("frame");

image.onload = function(){

URL.revokeObjectURL(this.src); // release the blob URL once the image is loaded

}

ws.onmessage = function(event) {

image.src = URL.createObjectURL(event.data);

};

</script>

</body>

</html>

Below is also a Python client based on the websockets library and OpenCV, which you may use to connect to the server, in order to receive and display the video frames in a Python app.

client.py

from websockets.exceptions import ConnectionClosed

import websockets

import numpy as np

import asyncio

import cv2

async def main():

url = 'ws://127.0.0.1:8000/ws'

async for websocket in websockets.connect(url):

try:

#count = 1

while True:

contents = await websocket.recv()

arr = np.frombuffer(contents, np.uint8)

frame = cv2.imdecode(arr, cv2.IMREAD_UNCHANGED)

cv2.imshow('frame', frame)

cv2.waitKey(1)

#cv2.imwrite("frame%d.jpg" % count, frame)

#count += 1

except ConnectionClosed:

continue # attempt reconnecting to the server (otherwise, call break)

asyncio.run(main())

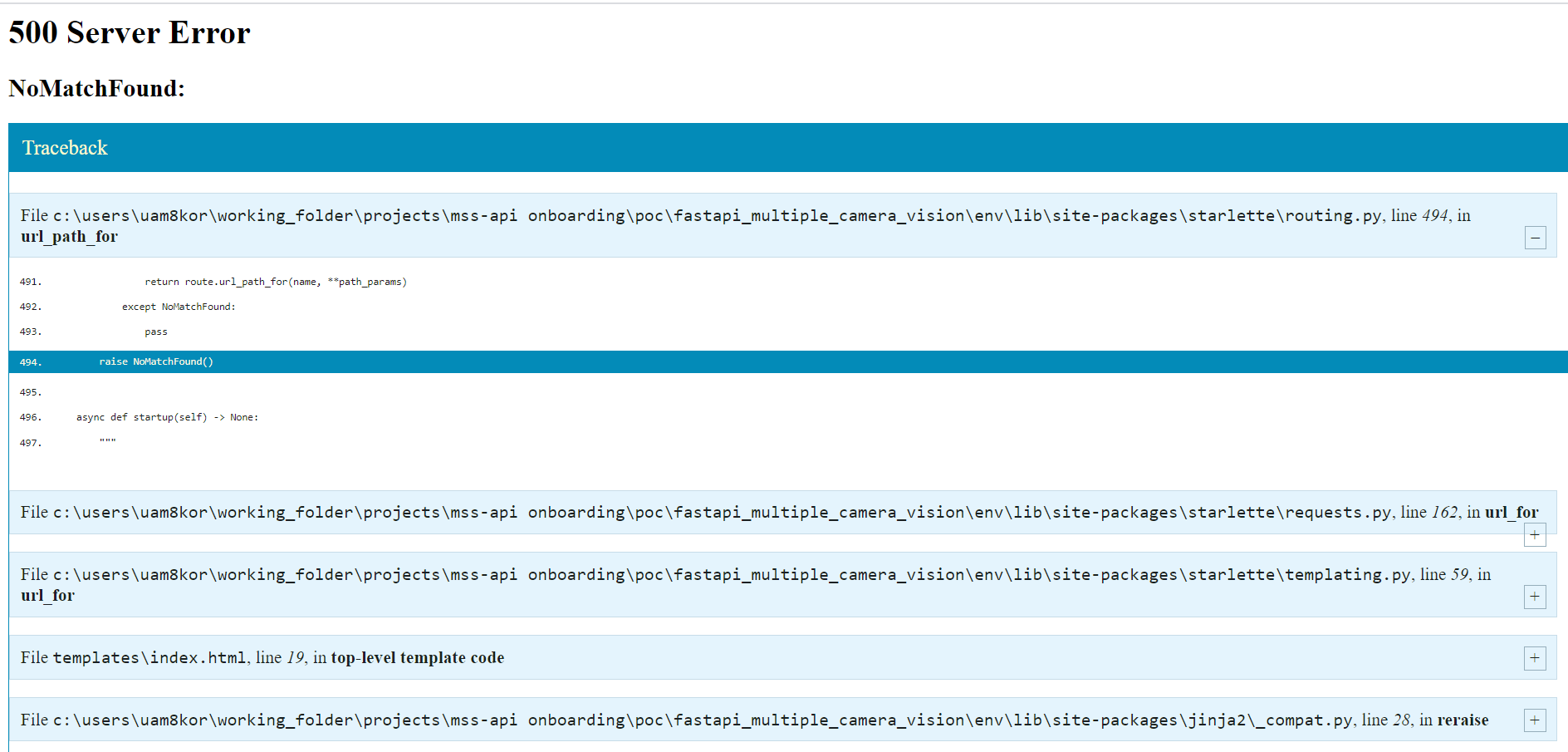

500 Server Error NoMatchFound: in url_path_for raise NoMatchFound() starlette.routing.NoMatchFound– Avocet