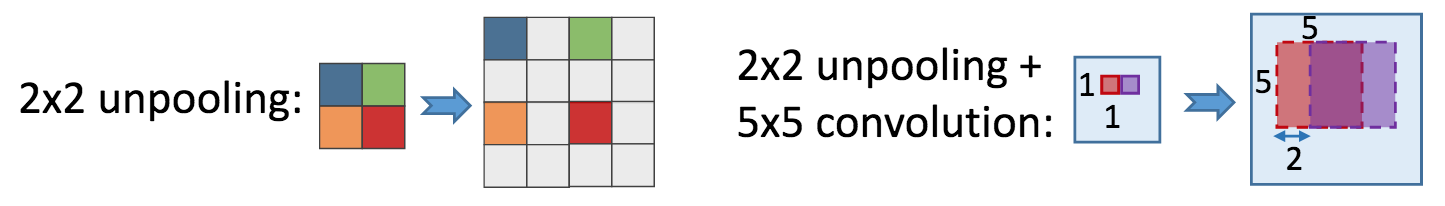

I noticed in a number of places that people use something like this, usually in fully convolutional networks, autoencoders, and similar:

model.add(UpSampling2D(size=(2,2)))

model.add(Conv2DTranspose(kernel_size=k, padding='same', strides=(1,1))

I am wondering what is the difference between that and simply:

model.add(Conv2DTranspose(kernel_size=k, padding='same', strides=(2,2))

Links towards any papers that explain this difference are welcome.