Why do we need Unicode?

In the (not too) early days, all that existed was ASCII. This was okay, as all that would ever be needed were a few control characters, punctuation, numbers and letters like the ones in this sentence. Unfortunately, today's strange world of global intercommunication and social media was not foreseen, and it is not too unusual to see English, العربية, 汉语, עִבְרִית, ελληνικά, and ភាសាខ្មែរ in the same document (I hope I didn't break any old browsers).

But for argument's sake, let’s say Joe Average is a software developer. He insists that he will only ever need English, and as such only wants to use ASCII. This might be fine for Joe the user, but this is not fine for Joe the software developer. Approximately half the world uses non-Latin characters and using ASCII is arguably inconsiderate to these people, and on top of that, he is closing off his software to a large and growing economy.

Therefore, an encompassing character set including all languages is needed. Thus came Unicode. It assigns every character a unique number called a code point. One advantage of Unicode over other possible sets is that the first 256 code points are identical to ISO-8859-1, and hence also ASCII. In addition, the vast majority of commonly used characters are representable by only two bytes, in a region called the Basic Multilingual Plane (BMP). Now a character encoding is needed to access this character set, and as the question asks, I will concentrate on UTF-8 and UTF-16.

Memory considerations

So how many bytes give access to what characters in these encodings?

- 1 byte: Standard ASCII

- 2 bytes: Arabic, Hebrew, most European scripts (most notably excluding Georgian)

- 3 bytes: BMP

- 4 bytes: All Unicode characters

- 2 bytes: BMP

- 4 bytes: All Unicode characters

It's worth mentioning now that characters not in the BMP include ancient scripts, mathematical symbols, musical symbols, and rarer Chinese, Japanese, and Korean (CJK) characters.

If you'll be working mostly with ASCII characters, then UTF-8 is certainly more memory efficient. However, if you're working mostly with non-European scripts, using UTF-8 could be up to 1.5 times less memory efficient than UTF-16. When dealing with large amounts of text, such as large web-pages or lengthy word documents, this could impact performance.

Encoding basics

Note: If you know how UTF-8 and UTF-16 are encoded, skip to the next section for practical applications.

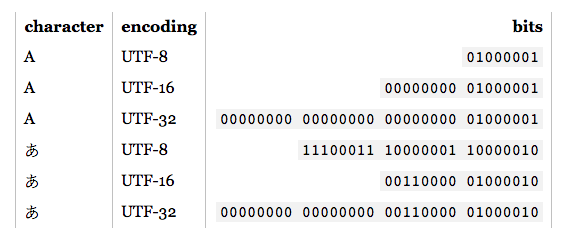

- UTF-8: For the standard ASCII (0-127) characters, the UTF-8 codes are identical. This makes UTF-8 ideal if backwards compatibility is required with existing ASCII text. Other characters require anywhere from 2-4 bytes. This is done by reserving some bits in each of these bytes to indicate that it is part of a multi-byte character. In particular, the first bit of each byte is

1 to avoid clashing with the ASCII characters.

- UTF-16: For valid BMP characters, the UTF-16 representation is simply its code point. However, for non-BMP characters UTF-16 introduces surrogate pairs. In this case a combination of two two-byte portions map to a non-BMP character. These two-byte portions come from the BMP numeric range, but are guaranteed by the Unicode standard to be invalid as BMP characters. In addition, since UTF-16 has two bytes as its basic unit, it is affected by endianness. To compensate, a reserved byte order mark can be placed at the beginning of a data stream which indicates endianness. Thus, if you are reading UTF-16 input, and no endianness is specified, you must check for this.

As can be seen, UTF-8 and UTF-16 are nowhere near compatible with each other. So if you're doing I/O, make sure you know which encoding you are using! For further details on these encodings, please see the UTF FAQ.

Practical programming considerations

Character and string data types: How are they encoded in the programming language? If they are raw bytes, the minute you try to output non-ASCII characters, you may run into a few problems. Also, even if the character type is based on a UTF, that doesn't mean the strings are proper UTF. They may allow byte sequences that are illegal. Generally, you'll have to use a library that supports UTF, such as ICU for C, C++ and Java. In any case, if you want to input/output something other than the default encoding, you will have to convert it first.

Recommended, default, and dominant encodings: When given a choice of which UTF to use, it is usually best to follow recommended standards for the environment you are working in. For example, UTF-8 is dominant on the web, and since HTML5, it has been the recommended encoding. Conversely, both .NET and Java environments are founded on a UTF-16 character type. Confusingly (and incorrectly), references are often made to the "Unicode encoding", which usually refers to the dominant UTF encoding in a given environment.

Library support: The libraries you are using support some kind of encoding. Which one? Do they support the corner cases? Since necessity is the mother of invention, UTF-8 libraries will generally support 4-byte characters properly, since 1, 2, and even 3 byte characters can occur frequently. However, not all purported UTF-16 libraries support surrogate pairs properly since they occur very rarely.

Counting characters: There exist combining characters in Unicode. For example, the code point U+006E (n), and U+0303 (a combining tilde) forms ñ, but the code point U+00F1 forms ñ. They should look identical, but a simple counting algorithm will return 2 for the first example, and 1 for the latter. This isn't necessarily wrong, but it may not be the desired outcome either.

Comparing for equality: A, А, and Α look the same, but they're Latin, Cyrillic, and Greek respectively. You also have cases like C and Ⅽ. One is a letter, and the other is a Roman numeral. In addition, we have the combining characters to consider as well. For more information, see Duplicate characters in Unicode.

Surrogate pairs: These come up often enough on Stack Overflow, so I'll just provide some example links: