Try the bnlearn library, it contains many functions to learn parameters from data and perform the inference.

pip install bnlearn

Your use-case would be like this:

# Import the library

import bnlearn

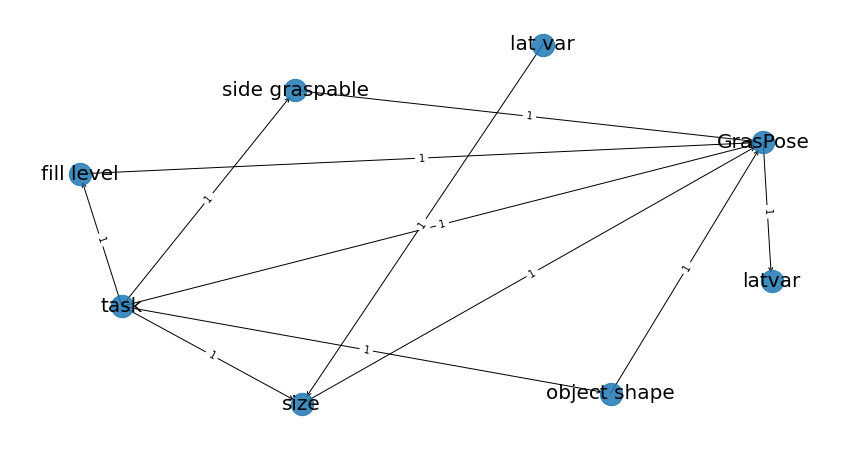

# Define the network structure

edges = [('task', 'size'),

('lat var', 'size'),

('task', 'fill level'),

('task', 'object shape'),

('task', 'side graspable'),

('size', 'GrasPose'),

('task', 'GrasPose'),

('fill level', 'GrasPose'),

('object shape', 'GrasPose'),

('side graspable', 'GrasPose'),

('GrasPose', 'latvar'),

]

# Make the actual Bayesian DAG

DAG = bnlearn.make_DAG(edges)

# DAG is stored in adjacency matrix

print(DAG['adjmat'])

# target task size lat var ... side graspable GrasPose latvar

# source ...

# task False True False ... True True False

# size False False False ... False True False

# lat var False True False ... False False False

# fill level False False False ... False True False

# object shape False False False ... False True False

# side graspable False False False ... False True False

# GrasPose False False False ... False False True

# latvar False False False ... False False False

#

# [8 rows x 8 columns]

# No CPDs are in the DAG. Lets see what happens if we print it.

bnlearn.print_CPD(DAG)

# >[BNLEARN.print_CPD] No CPDs to print. Use bnlearn.plot(DAG) to make a plot.

# Plot DAG. Note that it can be differently orientated if you re-make the plot.

bnlearn.plot(DAG)

![Pre-defined DAG]()

Now we need the data to learn its parameters. Suppose these are stored in your df. The variable names in the data-file must be present in the DAG.

# Read data

df = pd.read_csv('path_to_your_data.csv')

# Learn the parameters and store CPDs in the DAG. Use the methodtype your desire. Options are maximumlikelihood or bayes.

DAG = bnlearn.parameter_learning.fit(DAG, df, methodtype='maximumlikelihood')

# CPDs are present in the DAG at this point.

bnlearn.print_CPD(DAG)

# Start making inferences now. As an example:

q1 = bnlearn.inference.fit(DAG, variables=['lat var'], evidence={'fill level':1, 'size':0, 'task':1})

Below is a working example with a demo dataset (sprinkler). You can play around with this.

# Import example dataset

df = bnlearn.import_example('sprinkler')

print(df)

# Cloudy Sprinkler Rain Wet_Grass

# 0 0 0 0 0

# 1 1 0 1 1

# 2 0 1 0 1

# 3 1 1 1 1

# 4 1 1 1 1

# .. ... ... ... ...

# 995 1 0 1 1

# 996 1 0 1 1

# 997 1 0 1 1

# 998 0 0 0 0

# 999 0 1 1 1

# [1000 rows x 4 columns]

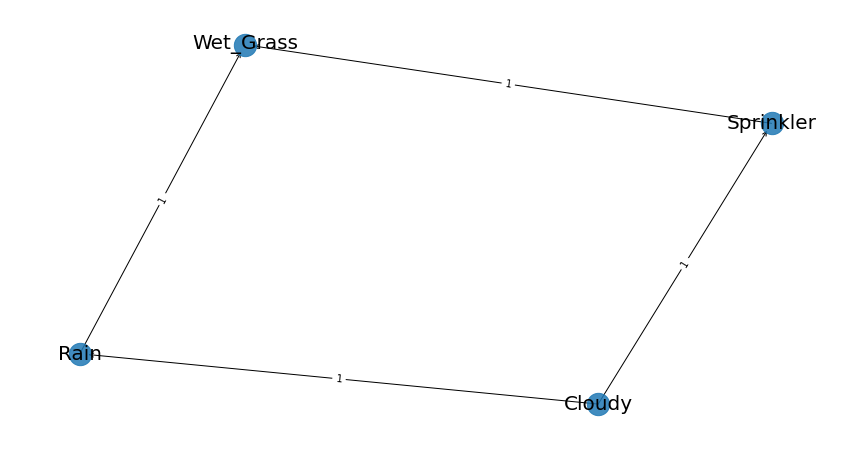

# Define the network structure

edges = [('Cloudy', 'Sprinkler'),

('Cloudy', 'Rain'),

('Sprinkler', 'Wet_Grass'),

('Rain', 'Wet_Grass')]

# Make the actual Bayesian DAG

DAG = bnlearn.make_DAG(edges)

# Print the CPDs

bnlearn.print_CPD(DAG)

# [BNLEARN.print_CPD] No CPDs to print. Use bnlearn.plot(DAG) to make a plot.

# Plot the DAG

bnlearn.plot(DAG)

![enter image description here]()

# Parameter learning on the user-defined DAG and input data

DAG = bnlearn.parameter_learning.fit(DAG, df)

# Print the learned CPDs

bnlearn.print_CPD(DAG)

# [BNLEARN.print_CPD] Independencies:

# (Cloudy _|_ Wet_Grass | Rain, Sprinkler)

# (Sprinkler _|_ Rain | Cloudy)

# (Rain _|_ Sprinkler | Cloudy)

# (Wet_Grass _|_ Cloudy | Rain, Sprinkler)

# [BNLEARN.print_CPD] Nodes: ['Cloudy', 'Sprinkler', 'Rain', 'Wet_Grass']

# [BNLEARN.print_CPD] Edges: [('Cloudy', 'Sprinkler'), ('Cloudy', 'Rain'), ('Sprinkler', 'Wet_Grass'), ('Rain', 'Wet_Grass')]

# CPD of Cloudy:

# +-----------+-------+

# | Cloudy(0) | 0.494 |

# +-----------+-------+

# | Cloudy(1) | 0.506 |

# +-----------+-------+

# CPD of Sprinkler:

# +--------------+--------------------+--------------------+

# | Cloudy | Cloudy(0) | Cloudy(1) |

# +--------------+--------------------+--------------------+

# | Sprinkler(0) | 0.4807692307692308 | 0.7075098814229249 |

# +--------------+--------------------+--------------------+

# | Sprinkler(1) | 0.5192307692307693 | 0.2924901185770751 |

# +--------------+--------------------+--------------------+

# CPD of Rain:

# +---------+--------------------+---------------------+

# | Cloudy | Cloudy(0) | Cloudy(1) |

# +---------+--------------------+---------------------+

# | Rain(0) | 0.6518218623481782 | 0.33695652173913043 |

# +---------+--------------------+---------------------+

# | Rain(1) | 0.3481781376518219 | 0.6630434782608695 |

# +---------+--------------------+---------------------+

# CPD of Wet_Grass:

# +--------------+--------------------+---------------------+---------------------+---------------------+

# | Rain | Rain(0) | Rain(0) | Rain(1) | Rain(1) |

# +--------------+--------------------+---------------------+---------------------+---------------------+

# | Sprinkler | Sprinkler(0) | Sprinkler(1) | Sprinkler(0) | Sprinkler(1) |

# +--------------+--------------------+---------------------+---------------------+---------------------+

# | Wet_Grass(0) | 0.7553816046966731 | 0.33755274261603374 | 0.25588235294117645 | 0.37910447761194027 |

# +--------------+--------------------+---------------------+---------------------+---------------------+

# | Wet_Grass(1) | 0.2446183953033268 | 0.6624472573839663 | 0.7441176470588236 | 0.6208955223880597 |

# +--------------+--------------------+---------------------+---------------------+---------------------+

# Make inference

q1 = bnlearn.inference.fit(DAG, variables=['Wet_Grass'], evidence={'Rain':1, 'Sprinkler':0, 'Cloudy':1})

# +--------------+------------------+

# | Wet_Grass | phi(Wet_Grass) |

# +==============+==================+

# | Wet_Grass(0) | 0.2559 |

# +--------------+------------------+

# | Wet_Grass(1) | 0.7441 |

# +--------------+------------------+

print(q1.values)

# array([0.25588235, 0.74411765])

More examples can be found on documentation the pages of bnlearn or read the blog.