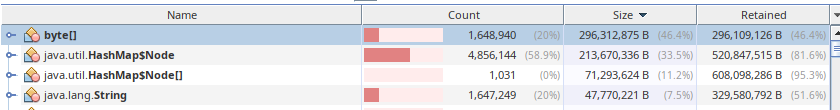

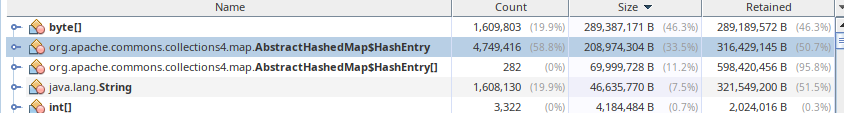

I've currently got a spreadsheet type program that keeps its data in an ArrayList of HashMaps. You'll no doubt be shocked when I tell you that this hasn't proven ideal. The overhead seems to use 5x more memory than the data itself.

This question asks about efficient collections libraries, and the answer was use Google Collections. My follow up is "which part?". I've been reading through the documentation but don't feel like it gives a very good sense of which classes are a good fit for this. (I'm also open to other libraries or suggestions).

So I'm looking for something that will let me store dense spreadsheet-type data with minimal memory overhead.

- My columns are currently referenced by Field objects, rows by their indexes, and values are Objects, almost always Strings

- Some columns will have a lot of repeated values

- primary operations are to update or remove records based on values of certain fields, and also adding/removing/combining columns

I'm aware of options like H2 and Derby but in this case I'm not looking to use an embedded database.

EDIT: If you're suggesting libraries, I'd also appreciate it if you could point me to a particular class or two in them that would apply here. Whereas Sun's documentation usually includes information about which operations are O(1), which are O(N), etc, I'm not seeing much of that in third-party libraries, nor really any description of which classes are best suited for what.