I am getting following error with Spark 2.4.8 Or Spark 3.1.3 or Spark 3.2.2. The hadoop version is 3.2, Hbase 2.4.14 and Hive 3.1.13 and Scala 2.12

Exception in thread "main" java.io.IOException: Cannot create a record reader because of a previous error. Please look at the previous logs lines from the task's full log for more details.

at org.apache.hadoop.hbase.mapreduce.TableInputFormatBase.getSplits(TableInputFormatBase.java:253)

at org.apache.spark.rdd.NewHadoopRDD.getPartitions(NewHadoopRDD.scala:131)

at org.apache.spark.rdd.RDD.$anonfun$partitions$2(RDD.scala:300)

I am calling spark-submit as follows.

export HBASE_JAR_FILES="/usr/local/hbase/lib/hbase-unsafe-4.1.1.jar,/usr/local/hbase/lib/hbase-common-2.4.14.jar,/usr/local/hbase/lib/hbase-client-2.4.14.jar,/usr/local/hbase/lib/hbase-protocol-2.4.14.jar,/usr/local/hbase/lib/guava-11.0.2.jar,/usr/local/hbase/lib/client-facing-thirdparty/htrace-core4-4.2.0-incubating.jar"

/opt/spark/bin/spark-submit --master local[*] --deploy-mode client --num-executors 1 --executor-cores 1 --executor-memory 480m --driver-memory 512m --driver-class-path $(echo $HBASE_JAR_FILES | tr ',' ':') --jars "$HBASE_JAR_FILES" --files /usr/local/hive/conf/hive-site.xml --conf "spark.hadoop.metastore.catalog.default=hive" --files /usr/local/hbase/conf/hbase-site.xml --class com.hbase.dynamodb.migration.HbaseToDynamoDbSparkMain --conf "spark.driver.maxResultSize=256m" /home/hadoop/scala-2.12/sbt-1.0/HbaseToDynamoDb-assembly-0.1.0-SNAPSHOT.jar

The code is as follows.

val spark: SparkSession = SparkSession.builder()

.master("local[*]")

.appName("Hbase To DynamoDb migration demo")

.config("hive.metastore.warehouse.dir", "/user/hive/warehouse")

.config("hive.metastore.uris","thrift://localhost:9083")

.enableHiveSupport()

.getOrCreate()

spark.catalog.listDatabases().show()

val sqlDF = spark.sql("select rowkey, office_address, office_phone, name, personal_phone from hvcontacts")

sqlDF.show()

The hive external table was created on top of Hbase as follows.

create external table if not exists hvcontacts (rowkey STRING, office_address STRING, office_phone STRING, name STRING, personal_phone STRING) STORED BY 'org.apache.hadoop.hive.hbase.HBaseStorageHandler' WITH SERDEPROPERTIES ('hbase.columns.mapping' = ':key,Office:Address,Office:Phone,Personal:name,Personal:Phone') TBLPROPERTIES ('hbase.table.name' = 'Contacts');

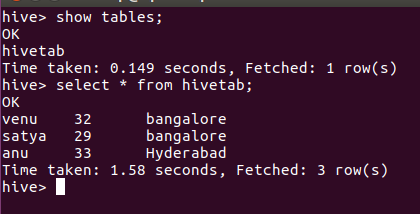

The metastore is in mysql and I can query tbls table to verify the external table in hive. Is there anyone else facing similar issue?

NOTE: I am not using hive spark connector here.