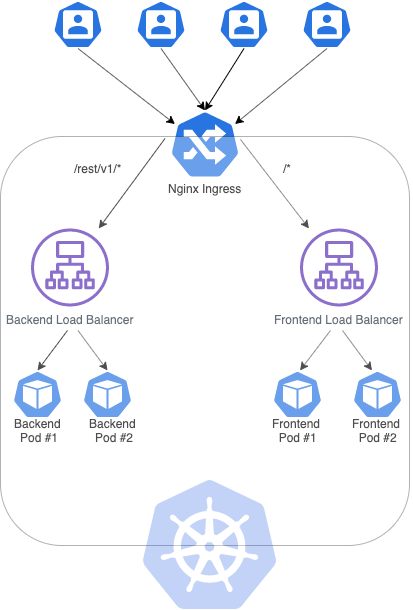

Currently, I'm trying to create a Kubernetes cluster on Google Cloud with two load balancers: one for backend (in Spring boot) and another for frontend (in Angular), where each service (load balancer) communicates with 2 replicas (pods). To achieve that, I created the following ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: sample-ingress

spec:

rules:

- http:

paths:

- path: /rest/v1/*

backend:

serviceName: sample-backend

servicePort: 8082

- path: /*

backend:

serviceName: sample-frontend

servicePort: 80

The ingress above mentioned can make the frontend app communicate with the REST API made available by the backend app. However, I have to create sticky sessions, so that every user communicates with the same POD because of the authentication mechanism provided by the backend. To clarify, if one user authenticates in POD #1, the cookie will not be recognized by POD #2.

To overtake this issue, I read that the Nginx-ingress manages to deal with this situation and I installed through the steps available here: https://kubernetes.github.io/ingress-nginx/deploy/ using Helm.

You can find below the diagram for the architecture I'm trying to build:

With the following services (I will just paste one of the services, the other one is similar):

apiVersion: v1

kind: Service

metadata:

name: sample-backend

spec:

selector:

app: sample

tier: backend

ports:

- protocol: TCP

port: 8082

targetPort: 8082

type: LoadBalancer

And I declared the following ingress:

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: sample-nginx-ingress

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/affinity: cookie

nginx.ingress.kubernetes.io/affinity-mode: persistent

nginx.ingress.kubernetes.io/session-cookie-hash: sha1

nginx.ingress.kubernetes.io/session-cookie-name: sample-cookie

spec:

rules:

- http:

paths:

- path: /rest/v1/*

backend:

serviceName: sample-backend

servicePort: 8082

- path: /*

backend:

serviceName: sample-frontend

servicePort: 80

After that, I run kubectl apply -f sample-nginx-ingress.yaml to apply the ingress, it is created and its status is OK. However, when I access the URL that appears in "Endpoints" column, the browser can't connect to the URL.

Am I doing anything wrong?

Edit 1

** Updated service and ingress configurations **

After some help, I've managed to access the services through the Ingress Nginx. Above here you have the configurations:

Nginx Ingress

The paths shouldn't contain the "", unlike the default Kubernetes ingress that is mandatory to have the "" to route the paths I want.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: sample-ingress

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/affinity: "cookie"

nginx.ingress.kubernetes.io/session-cookie-name: "sample-cookie"

nginx.ingress.kubernetes.io/session-cookie-expires: "172800"

nginx.ingress.kubernetes.io/session-cookie-max-age: "172800"

spec:

rules:

- http:

paths:

- path: /rest/v1/

backend:

serviceName: sample-backend

servicePort: 8082

- path: /

backend:

serviceName: sample-frontend

servicePort: 80

Services

Also, the services shouldn't be of type "LoadBalancer" but "ClusterIP" as below:

apiVersion: v1

kind: Service

metadata:

name: sample-backend

spec:

selector:

app: sample

tier: backend

ports:

- protocol: TCP

port: 8082

targetPort: 8082

type: ClusterIP

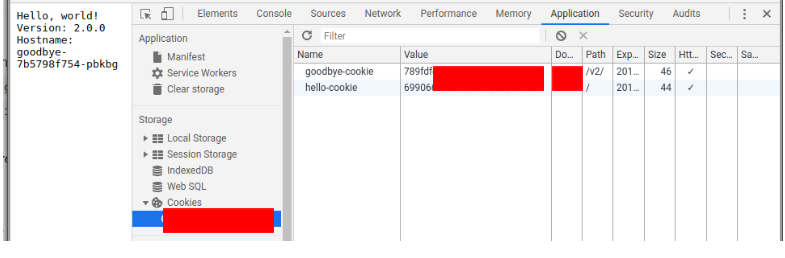

However, I still can't achieve sticky sessions in my Kubernetes Cluster, once I'm still getting 403 and even the cookie name is not replaced, so I guess the annotations are not working as expected.

Service? Is itLoadBalancerorNodePort? – HuffordIngress...you must have Service of typeNodePorton GKE for this. – HuffordServiceyaml? – SchoenburgNodePortwhen usingIngresson GCP, but I am not sure. – Huffordkubectl exec -it $(kubectl get pods -l app=nginx-ingress,component=controller -o jsonpath='{.items[0].metadata.name}') -- /nginx-ingress-controller --version– Ginnygino