My team has encountered a deadlock that I suspect is a bug in the Windows implementation of SRW locks. The code below is a distilled version of real code. Here's the summary:

- Main thread acquires exclusive lock

- Main thread creates N children threads

- Each child thread

- Acquires a shared lock

- Spins until all children have acquired a shared lock

- Releases the shared lock

- Main thread releases exclusive lock

Yes this could be done with std::latch in C++20. That's not the point.

This code works most of the time. However roughly 1 in 5000 loops it deadlocks. When it deadlocks exactly 1 child successfully acquires a shared lock and N-1 children are stuck in lock_shared(). On Windows this function calls into RtlAcquireSRWLockShared and blocks in NtWaitForAlertByThreadId.

The behavior is observed when used std::shared_mutex directly, std::shared_lock/std::unique_lock, or simply calling SRW functions directly.

A 2017 Raymond Chen post asks about this exact behavior, but user error is blamed.

This looks like an SRW bug to me. It's maybe worth noting that if a child doesn't attempt to latch and calls unlock_shared that this will wake its blocked siblings. There is nothing in the documentation for std::shared_lock or *SRW* that suggests is allowed to block even when there is not an active exclusive lock.

This deadlock has not been observed on non-Windows platforms.

Example code:

#include <atomic>

#include <cstdint>

#include <iostream>

#include <memory>

#include <shared_mutex>

#include <thread>

#include <vector>

struct ThreadTestData {

int32_t numThreads = 0;

std::shared_mutex sharedMutex = {};

std::atomic<int32_t> readCounter;

};

int DoStuff(ThreadTestData* data) {

// Acquire reader lock

data->sharedMutex.lock_shared();

// wait until all read threads have acquired their shared lock

data->readCounter.fetch_add(1);

while (data->readCounter.load() != data->numThreads) {

std::this_thread::yield();

}

// Release reader lock

data->sharedMutex.unlock_shared();

return 0;

}

int main() {

int count = 0;

while (true) {

ThreadTestData data = {};

data.numThreads = 5;

// Acquire write lock

data.sharedMutex.lock();

// Create N threads

std::vector<std::unique_ptr<std::thread>> readerThreads;

readerThreads.reserve(data.numThreads);

for (int i = 0; i < data.numThreads; ++i) {

readerThreads.emplace_back(std::make_unique<std::thread>(DoStuff, &data));

}

// Release write lock

data.sharedMutex.unlock();

// Wait for all readers to succeed

for (auto& thread : readerThreads) {

thread->join();

}

// Cleanup

readerThreads.clear();

// Spew so we can tell when it's deadlocked

count += 1;

std::cout << count << std::endl;

}

return 0;

}

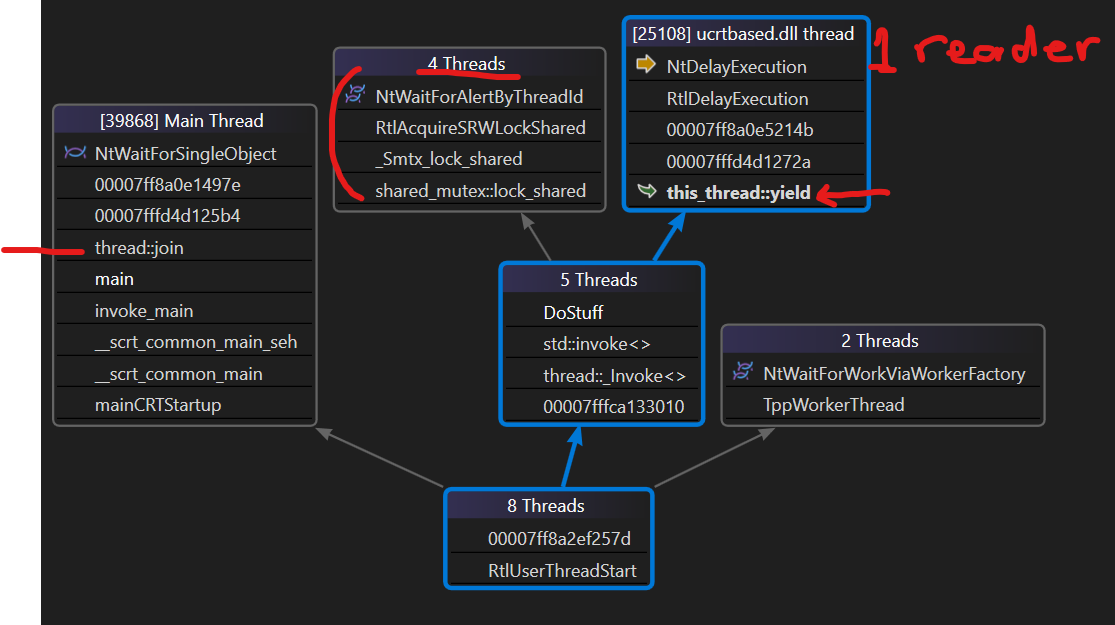

Here's a picture of the parallel stacks. You can see the main thread is correctly blocking on thread::join. One reader thread acquired the lock and is in a yield loop. Four reader threads are blocked within lock_shared.

std::shared_mutex/SRWallows this behavior please point it out. – AvowntYieldExecutionand all other readers are blocked onAcquireSRWLockShared. I added a screenshot of the Parallel Stacks view to the root post. It's effectively identical as my original code. It still deadlocks roughly once per few thousand. – AvowSRWLock(or thestd::shared_lockinner handle) is a low integer. Values between 0x01 and 0x50 are quite common. Numbers can be re-used. – Avow0x7263aff6f3,0xedf26ffb63,0xb5606ff843,0x1e6e8ff753,0xa811bffae3. Always ends with a 3. Very curious!! I think it always sets 40 bits, never the full 64. They also all include the same ff. So it definitely doesn't seem random. The docs don't include any details on what the state means that I can tell. learn.microsoft.com/en-us/windows/win32/sync/… – Avowmain.cpp. As bare bones as it gets. – AvowSRWLOCKto my classCPushLock-#define SRWLOCK CPushLockand all 4 api calls -#define AcquireSRWLockShared(p) (p)->AcquireShared()and so on. are my implementation also have bug )). now i need to go, but some late try full research bug wich you found – Privilegedstd::this_thread::yield();withSleep(10). Once I get it even after1is dumped, i.e. on the second iteration of the main loop. Interesting fact,readCounteris always 1, i.e. 1 thread is waked up, other 4 threads are not. – Tayibsrw.exe *2000) and my implementation - push.exe which have no deadlocks (push.exe *2000). look src code in SrwTest.cpp. some late i write full research on this. – Privileged