Task

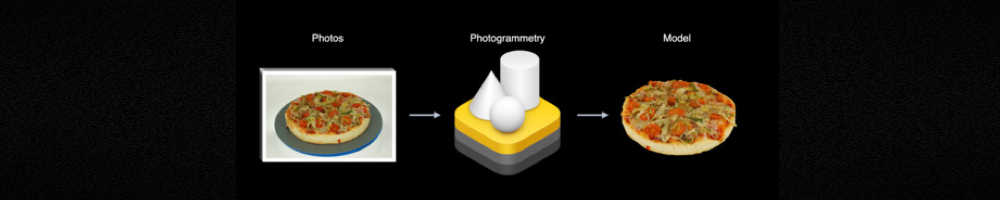

I would like to capture a real-world texture and apply it to a reconstructed mesh produced with a help of LiDAR scanner. I suppose that Projection-View-Model matrices should be used for that. A texture must be made from fixed Point-of-View, for example, from center of a room. However, it would be an ideal solution if we could apply an environmentTexturing data, collected as a cube-map texture in a scene.

Look at 3D Scanner App. It's a reference app allowing us to export a model with its texture.

I need to capture a texture with one iteration. I do not need to update it in a realtime. I realize that changing PoV leads to a wrong texture's perception, in other words, distortion of a texture. Also I realize that there's a dynamic tesselation in RealityKit and there's an automatic texture mipmapping (texture's resolution depends on a distance it captured from).

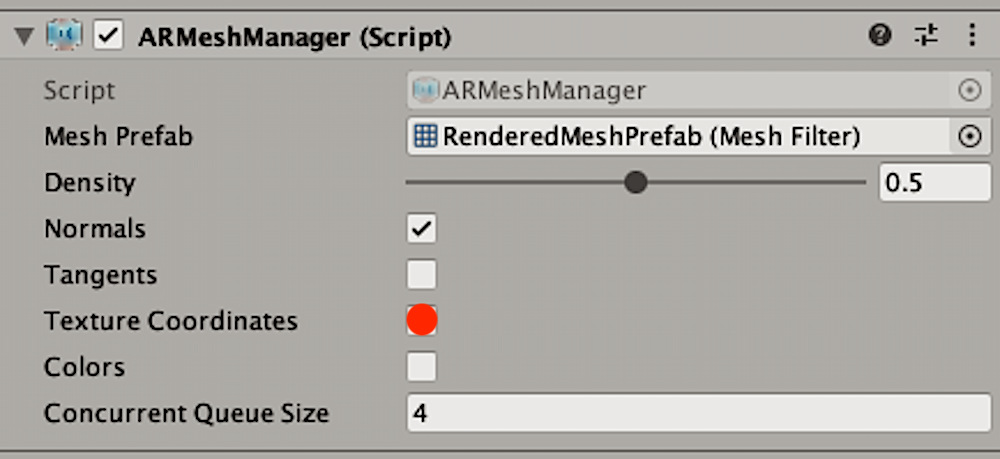

import RealityKit

import ARKit

import Metal

import ModelIO

class ViewController: UIViewController, ARSessionDelegate {

@IBOutlet var arView: ARView!

override func viewDidLoad() {

super.viewDidLoad()

arView.session.delegate = self

arView.debugOptions.insert(.showSceneUnderstanding)

let config = ARWorldTrackingConfiguration()

config.sceneReconstruction = .mesh

config.environmentTexturing = .automatic

arView.session.run(config)

}

}

Question

- How to capture and apply a real world texture to a reconstructed 3D mesh?