I'm trying to get a list of the names of all the files present in a directory using Node.js. I want output that is an array of filenames. How can I do this?

You can use the fs.readdir or fs.readdirSync methods. fs is included in Node.js core, so there's no need to install anything.

fs.readdir

const testFolder = './tests/';

const fs = require('fs');

fs.readdir(testFolder, (err, files) => {

files.forEach(file => {

console.log(file);

});

});

fs.readdirSync

const testFolder = './tests/';

const fs = require('fs');

fs.readdirSync(testFolder).forEach(file => {

console.log(file);

});

The difference between the two methods, is that the first one is asynchronous, so you have to provide a callback function that will be executed when the read process ends.

The second is synchronous, it will return the file name array, but it will stop any further execution of your code until the read process ends.

readdir also shows directory names. To filter these, use fs.stat(path, callback(err, stats)) and stats.isDirectory(). –

Nolpros ls or dir /b/s for this job? Would have thought these methods would be much faster than iterating in Node... –

Gilley ls? Just wait until somebody creates some filenames with embedded spaces and newlines… –

Turbary ls isn't really good for file lists. But find is pretty good at it :) –

Gilley withFileTypes option for the readdir and readdirSync functions and the isDirectory() method can be used to filter just the files in the directory - docs and an example here –

Concierge readdirSync I push the file into my array I defined in first line of the class. In the readdirSync the array size is 1 but, out of that scope, the size is 0! –

Barbra IMO the most convenient way to do such tasks is to use a glob tool. Here's a glob package for node.js. Install with

npm install glob

Then use wild card to match filenames (example taken from package's website)

var glob = require("glob")

// options is optional

glob("**/*.js", options, function (er, files) {

// files is an array of filenames.

// If the `nonull` option is set, and nothing

// was found, then files is ["**/*.js"]

// er is an error object or null.

})

If you are planning on using globby here is an example to look for any xml files that are under current folder

var globby = require('globby');

const paths = await globby("**/*.xml");

cwd in the options object. –

Pelkey glob outside of itself? Eg. I want to console.log the results, but not inside glob()? –

Ferraro glob.sync(pattern, [options]) method may be easier to use as it simply returns an array of file names, rather than using a callback. More info here: github.com/isaacs/node-glob –

Compensation As of Node v10.10.0, it is possible to use the new withFileTypes option for fs.readdir and fs.readdirSync in combination with the dirent.isDirectory() function to filter for filenames in a directory. That looks like this:

fs.readdirSync('./dirpath', {withFileTypes: true})

.filter(item => !item.isDirectory())

.map(item => item.name)

The returned array is in the form:

['file1.txt', 'file2.txt', 'file3.txt']

names of the files –

Allowable The answer above does not perform a recursive search into the directory though. Here's what I did for a recursive search (using node-walk: npm install walk)

var walk = require('walk');

var files = [];

// Walker options

var walker = walk.walk('./test', { followLinks: false });

walker.on('file', function(root, stat, next) {

// Add this file to the list of files

files.push(root + '/' + stat.name);

next();

});

walker.on('end', function() {

console.log(files);

});

.git –

Fir Get files in all subdirs

const fs=require('fs');

function getFiles (dir, files_){

files_ = files_ || [];

var files = fs.readdirSync(dir);

for (var i in files){

var name = dir + '/' + files[i];

if (fs.statSync(name).isDirectory()){

getFiles(name, files_);

} else {

files_.push(name);

}

}

return files_;

}

console.log(getFiles('path/to/dir'))

if (typeof files_ === 'undefined') files_=[];? you only need to do var files_ = files_ || []; instead of files_ = files_ || [];. –

Gaven var fs = require('fs'); at the start of getFiles. –

Might Here's a simple solution using only the native fs and path modules:

// sync version

function walkSync(currentDirPath, callback) {

var fs = require('fs'),

path = require('path');

fs.readdirSync(currentDirPath).forEach(function (name) {

var filePath = path.join(currentDirPath, name);

var stat = fs.statSync(filePath);

if (stat.isFile()) {

callback(filePath, stat);

} else if (stat.isDirectory()) {

walkSync(filePath, callback);

}

});

}

or async version (uses fs.readdir instead):

// async version with basic error handling

function walk(currentDirPath, callback) {

var fs = require('fs'),

path = require('path');

fs.readdir(currentDirPath, function (err, files) {

if (err) {

throw new Error(err);

}

files.forEach(function (name) {

var filePath = path.join(currentDirPath, name);

var stat = fs.statSync(filePath);

if (stat.isFile()) {

callback(filePath, stat);

} else if (stat.isDirectory()) {

walk(filePath, callback);

}

});

});

}

Then you just call (for sync version):

walkSync('path/to/root/dir', function(filePath, stat) {

// do something with "filePath"...

});

or async version:

walk('path/to/root/dir', function(filePath, stat) {

// do something with "filePath"...

});

The difference is in how node blocks while performing the IO. Given that the API above is the same, you could just use the async version to ensure maximum performance.

However there is one advantage to using the synchronous version. It is easier to execute some code as soon as the walk is done, as in the next statement after the walk. With the async version, you would need some extra way of knowing when you are done. Perhaps creating a map of all paths first, then enumerating them. For simple build/util scripts (vs high performance web servers) you could use the sync version without causing any damage.

walkSync from walk(filePath, callback); to walkSync(filePath, callback); –

Gait Using Promises with ES7

Asynchronous use with mz/fs

The mz module provides promisified versions of the core node library. Using them is simple. First install the library...

npm install mz

Then...

const fs = require('mz/fs');

fs.readdir('./myDir').then(listing => console.log(listing))

.catch(err => console.error(err));

Alternatively you can write them in asynchronous functions in ES7:

async function myReaddir () {

try {

const file = await fs.readdir('./myDir/');

}

catch (err) { console.error( err ) }

};

Update for recursive listing

Some of the users have specified a desire to see a recursive listing (though not in the question)... Use fs-promise. It's a thin wrapper around mz.

npm install fs-promise;

then...

const fs = require('fs-promise');

fs.walk('./myDir').then(

listing => listing.forEach(file => console.log(file.path))

).catch(err => console.error(err));

non-recursive version

You don't say you want to do it recursively so I assume you only need direct children of the directory.

Sample code:

const fs = require('fs');

const path = require('path');

fs.readdirSync('your-directory-path')

.filter((file) => fs.lstatSync(path.join(folder, file)).isFile());

Dependencies.

var fs = require('fs');

var path = require('path');

Definition.

// String -> [String]

function fileList(dir) {

return fs.readdirSync(dir).reduce(function(list, file) {

var name = path.join(dir, file);

var isDir = fs.statSync(name).isDirectory();

return list.concat(isDir ? fileList(name) : [name]);

}, []);

}

Usage.

var DIR = '/usr/local/bin';

// 1. List all files in DIR

fileList(DIR);

// => ['/usr/local/bin/babel', '/usr/local/bin/bower', ...]

// 2. List all file names in DIR

fileList(DIR).map((file) => file.split(path.sep).slice(-1)[0]);

// => ['babel', 'bower', ...]

Please note that fileList is way too optimistic. For anything serious, add some error handling.

excludeDirs array argument also. It changes it enough so that maybe you should edit it instead (if you want it). Otherwise I'll add it in a different answer. gist.github.com/AlecTaylor/f3f221b4fb86b4375650 –

Calondra I'm assuming from your question that you don't want directories names, just files.

Directory Structure Example

animals

├── all.jpg

├── mammals

│ └── cat.jpg

│ └── dog.jpg

└── insects

└── bee.jpg

Walk function

Credits go to Justin Maier in this gist

If you want just an array of the files paths use return_object: false:

const fs = require('fs').promises;

const path = require('path');

async function walk(dir) {

let files = await fs.readdir(dir);

files = await Promise.all(files.map(async file => {

const filePath = path.join(dir, file);

const stats = await fs.stat(filePath);

if (stats.isDirectory()) return walk(filePath);

else if(stats.isFile()) return filePath;

}));

return files.reduce((all, folderContents) => all.concat(folderContents), []);

}

Usage

async function main() {

console.log(await walk('animals'))

}

Output

[

"/animals/all.jpg",

"/animals/mammals/cat.jpg",

"/animals/mammals/dog.jpg",

"/animals/insects/bee.jpg"

];

files.unshift(dir) berfore the last return of the async function. Anyway it'd be best if you could create a new question as it might help other people with the same need and receive better feedback. ;-) –

Gibbsite animals/mammals/name and i want to stop at mammal by providing some depth [ "/animals/all.jpg", "/animals/mammals/cat.jpg", "/animals/mammals/dog.jpg", "/animals/insects/bee.jpg" ]; –

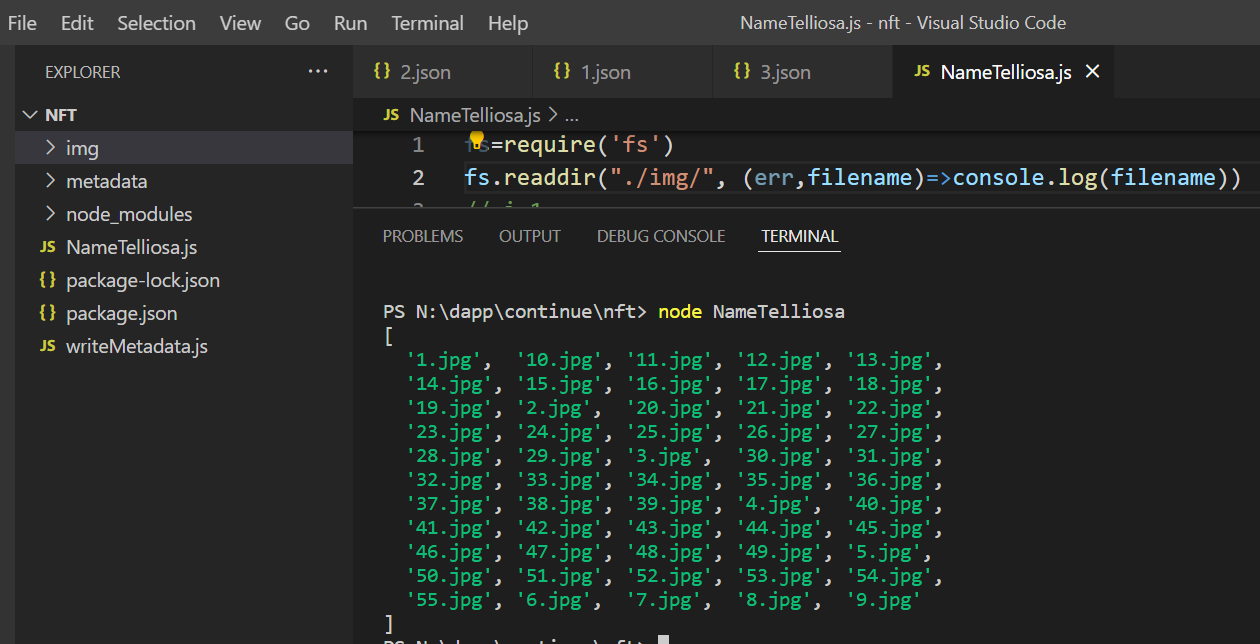

Dinerman its just 2 lines of code:

fs=require('fs')

fs.readdir("./img/", (err,filename)=>console.log(filename))

if someone still search for this, i do this:

import fs from 'fs';

import path from 'path';

const getAllFiles = dir =>

fs.readdirSync(dir).reduce((files, file) => {

const name = path.join(dir, file);

const isDirectory = fs.statSync(name).isDirectory();

return isDirectory ? [...files, ...getAllFiles(name)] : [...files, name];

}, []);and its work very good for me

[...files, ...getAllFiles(name)] or [...files, name] works. A bit of explanation would be very helpful :) –

Telfer ... used here is called a spread syntax. What it basically does is takes all objects inside the array and 'spreads' it into the new array. In this case, all entries inside the files array is added to the return along with all the values returned from the recursive call. YOu can refer to the spread syntax here: developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/… –

Prosenchyma Load fs:

const fs = require('fs');

Read files async:

fs.readdir('./dir', function (err, files) {

// "files" is an Array with files names

});

Read files sync:

var files = fs.readdirSync('./dir');

My one liner code:

const fs = require("fs")

const path = 'somePath/'

const filesArray = fs.readdirSync(path).filter(file => fs.lstatSync(path+file).isFile())

Get sorted filenames. You can filter results based on a specific extension such as '.txt', '.jpg' and so on.

import * as fs from 'fs';

import * as Path from 'path';

function getFilenames(path, extension) {

return fs

.readdirSync(path)

.filter(

item =>

fs.statSync(Path.join(path, item)).isFile() &&

(extension === undefined || Path.extname(item) === extension)

)

.sort();

}

My 2 cents if someone:

Just want to list file names (excluding directories) from a local sub-folder on their project

- ✅ No additional dependencies

- ✅ 1 function

- ✅ Normalize path (Unix vs. Windows)

const fs = require("fs");

const path = require("path");

/**

* @param {string} relativeName "resources/foo/goo"

* @return {string[]}

*/

const listFileNames = (relativeName) => {

try {

const folderPath = path.join(process.cwd(), ...relativeName.split("/"));

return fs

.readdirSync(folderPath, { withFileTypes: true })

.filter((dirent) => dirent.isFile())

.map((dirent) => dirent.name.split(".")[0]);

} catch (err) {

// ...

}

};

README.md

package.json

resources

|-- countries

|-- usa.yaml

|-- japan.yaml

|-- gb.yaml

|-- provinces

|-- .........

listFileNames("resources/countries") #=> ["usa", "japan", "gb"]

path is the name of your imported require('path') but then you re-define const path inside the function... This is really confusing and might lead to bugs! –

Clevelandclevenger Try this, it works for me

import fs from "fs/promises";

const path = "path/to/folder";

export const readDir = async function readDir(path) {

const files = await fs.readdir(path);

// array of file names

console.log(files);

}

npm install needed and it works with esm import and async/await. For a full example, you should wrapt it in a function. –

Necrosis This is a TypeScript, optionally recursive, optionally error logging and asynchronous solution. You can specify a regular expression for the file names you want to find.

I used fs-extra, because its an easy super set improvement on fs.

import * as FsExtra from 'fs-extra'

/**

* Finds files in the folder that match filePattern, optionally passing back errors .

* If folderDepth isn't specified, only the first level is searched. Otherwise anything up

* to Infinity is supported.

*

* @static

* @param {string} folder The folder to start in.

* @param {string} [filePattern='.*'] A regular expression of the files you want to find.

* @param {(Error[] | undefined)} [errors=undefined]

* @param {number} [folderDepth=0]

* @returns {Promise<string[]>}

* @memberof FileHelper

*/

public static async findFiles(

folder: string,

filePattern: string = '.*',

errors: Error[] | undefined = undefined,

folderDepth: number = 0

): Promise<string[]> {

const results: string[] = []

// Get all files from the folder

let items = await FsExtra.readdir(folder).catch(error => {

if (errors) {

errors.push(error) // Save errors if we wish (e.g. folder perms issues)

}

return results

})

// Go through to the required depth and no further

folderDepth = folderDepth - 1

// Loop through the results, possibly recurse

for (const item of items) {

try {

const fullPath = Path.join(folder, item)

if (

FsExtra.statSync(fullPath).isDirectory() &&

folderDepth > -1)

) {

// Its a folder, recursively get the child folders' files

results.push(

...(await FileHelper.findFiles(fullPath, filePattern, errors, folderDepth))

)

} else {

// Filter by the file name pattern, if there is one

if (filePattern === '.*' || item.search(new RegExp(filePattern, 'i')) > -1) {

results.push(fullPath)

}

}

} catch (error) {

if (errors) {

errors.push(error) // Save errors if we wish

}

}

}

return results

}

Using flatMap:

function getFiles(dir) {

return fs.readdirSync(dir).flatMap((item) => {

const path = `${dir}/${item}`;

if (fs.statSync(path).isDirectory()) {

return getFiles(path);

}

return path;

});

}

Given the following directory:

dist

├── 404.html

├── app-AHOLRMYQ.js

├── img

│ ├── demo.gif

│ └── start.png

├── index.html

└── sw.js

Usage:

getFiles("dist")

Output:

[

'dist/404.html',

'dist/app-AHOLRMYQ.js',

'dist/img/demo.gif',

'dist/img/start.png',

'dist/index.html'

]

Out of the box

In case you want an object with the directory structure out-of-the-box I highly reccomend you to check directory-tree.

Lets say you have this structure:

photos

│ june

│ └── windsurf.jpg

└── january

├── ski.png

└── snowboard.jpg

const dirTree = require("directory-tree");

const tree = dirTree("/path/to/photos");

Will return:

{

path: "photos",

name: "photos",

size: 600,

type: "directory",

children: [

{

path: "photos/june",

name: "june",

size: 400,

type: "directory",

children: [

{

path: "photos/june/windsurf.jpg",

name: "windsurf.jpg",

size: 400,

type: "file",

extension: ".jpg"

}

]

},

{

path: "photos/january",

name: "january",

size: 200,

type: "directory",

children: [

{

path: "photos/january/ski.png",

name: "ski.png",

size: 100,

type: "file",

extension: ".png"

},

{

path: "photos/january/snowboard.jpg",

name: "snowboard.jpg",

size: 100,

type: "file",

extension: ".jpg"

}

]

}

]

}

Custom Object

Otherwise if you want to create an directory tree object with your custom settings have a look at the following snippet. A live example is visible on this codesandbox.

// my-script.js

const fs = require("fs");

const path = require("path");

const isDirectory = filePath => fs.statSync(filePath).isDirectory();

const isFile = filePath => fs.statSync(filePath).isFile();

const getDirectoryDetails = filePath => {

const dirs = fs.readdirSync(filePath);

return {

dirs: dirs.filter(name => isDirectory(path.join(filePath, name))),

files: dirs.filter(name => isFile(path.join(filePath, name)))

};

};

const getFilesRecursively = (parentPath, currentFolder) => {

const currentFolderPath = path.join(parentPath, currentFolder);

let currentDirectoryDetails = getDirectoryDetails(currentFolderPath);

const final = {

current_dir: currentFolder,

dirs: currentDirectoryDetails.dirs.map(dir =>

getFilesRecursively(currentFolderPath, dir)

),

files: currentDirectoryDetails.files

};

return final;

};

const getAllFiles = relativePath => {

const fullPath = path.join(__dirname, relativePath);

const parentDirectoryPath = path.dirname(fullPath);

const leafDirectory = path.basename(fullPath);

const allFiles = getFilesRecursively(parentDirectoryPath, leafDirectory);

return allFiles;

};

module.exports = { getAllFiles };

Then you can simply do:

// another-file.js

const { getAllFiles } = require("path/to/my-script");

const allFiles = getAllFiles("/path/to/my-directory");

Here's an asynchronous recursive version.

function ( path, callback){

// the callback gets ( err, files) where files is an array of file names

if( typeof callback !== 'function' ) return

var

result = []

, files = [ path.replace( /\/\s*$/, '' ) ]

function traverseFiles (){

if( files.length ) {

var name = files.shift()

fs.stat(name, function( err, stats){

if( err ){

if( err.errno == 34 ) traverseFiles()

// in case there's broken symbolic links or a bad path

// skip file instead of sending error

else callback(err)

}

else if ( stats.isDirectory() ) fs.readdir( name, function( err, files2 ){

if( err ) callback(err)

else {

files = files2

.map( function( file ){ return name + '/' + file } )

.concat( files )

traverseFiles()

}

})

else{

result.push(name)

traverseFiles()

}

})

}

else callback( null, result )

}

traverseFiles()

}

Took the general approach of @Hunan-Rostomyan, made it a litle more concise and added excludeDirs argument. It'd be trivial to extend with includeDirs, just follow same pattern:

import * as fs from 'fs';

import * as path from 'path';

function fileList(dir, excludeDirs?) {

return fs.readdirSync(dir).reduce(function (list, file) {

const name = path.join(dir, file);

if (fs.statSync(name).isDirectory()) {

if (excludeDirs && excludeDirs.length) {

excludeDirs = excludeDirs.map(d => path.normalize(d));

const idx = name.indexOf(path.sep);

const directory = name.slice(0, idx === -1 ? name.length : idx);

if (excludeDirs.indexOf(directory) !== -1)

return list;

}

return list.concat(fileList(name, excludeDirs));

}

return list.concat([name]);

}, []);

}

Example usage:

console.log(fileList('.', ['node_modules', 'typings', 'bower_components']));

I usually use: FS-Extra.

const fileNameArray = Fse.readdir('/some/path');

Result:

[

"b7c8a93c-45b3-4de8-b9b5-a0bf28fb986e.jpg",

"daeb1c5b-809f-4434-8fd9-410140789933.jpg"

]

This will work and store the result in test.txt file which will be present in the same directory

fs.readdirSync(__dirname).forEach(file => {

fs.appendFileSync("test.txt", file+"\n", function(err){

})

})

I've recently built a tool for this that does just this... It fetches a directory asynchronously and returns a list of items. You can either get directories, files or both, with folders being first. You can also paginate the data in case where you don't want to fetch the entire folder.

https://www.npmjs.com/package/fs-browser

This is the link, hope it helps someone!

No npm install. This works for the current folder where you launch the terminal, but you can change process.cwd() to another folder. Enjoy!

const fs=require('fs');

fs.readdirSync(process.cwd());The modern (Node 21) version of the current top listed answer using the promise-based fs api is:

import { readdir } from 'node:fs/promises'

const testFolder = "./tests/"

for (const file of await readdir(testFolder)) {

console.log(file);

};

I made a node module to automate this task: mddir

Usage

node mddir "../relative/path/"

To install: npm install mddir -g

To generate markdown for current directory: mddir

To generate for any absolute path: mddir /absolute/path

To generate for a relative path: mddir ~/Documents/whatever.

The md file gets generated in your working directory.

Currently ignores node_modules, and .git folders.

Troubleshooting

If you receive the error 'node\r: No such file or directory', the issue is that your operating system uses different line endings and mddir can't parse them without you explicitly setting the line ending style to Unix. This usually affects Windows, but also some versions of Linux. Setting line endings to Unix style has to be performed within the mddir npm global bin folder.

Line endings fix

Get npm bin folder path with:

npm config get prefix

Cd into that folder

brew install dos2unix

dos2unix lib/node_modules/mddir/src/mddir.js

This converts line endings to Unix instead of Dos

Then run as normal with: node mddir "../relative/path/".

Example generated markdown file structure 'directoryList.md'

|-- .bowerrc

|-- .jshintrc

|-- .jshintrc2

|-- Gruntfile.js

|-- README.md

|-- bower.json

|-- karma.conf.js

|-- package.json

|-- app

|-- app.js

|-- db.js

|-- directoryList.md

|-- index.html

|-- mddir.js

|-- routing.js

|-- server.js

|-- _api

|-- api.groups.js

|-- api.posts.js

|-- api.users.js

|-- api.widgets.js

|-- _components

|-- directives

|-- directives.module.js

|-- vendor

|-- directive.draganddrop.js

|-- helpers

|-- helpers.module.js

|-- proprietary

|-- factory.actionDispatcher.js

|-- services

|-- services.cardTemplates.js

|-- services.cards.js

|-- services.groups.js

|-- services.posts.js

|-- services.users.js

|-- services.widgets.js

|-- _mocks

|-- mocks.groups.js

|-- mocks.posts.js

|-- mocks.users.js

|-- mocks.widgets.js

Use npm list-contents module. It reads the contents and sub-contents of the given directory and returns the list of files' and folders' paths.

const list = require('list-contents');

list("./dist",(o)=>{

if(o.error) throw o.error;

console.log('Folders: ', o.dirs);

console.log('Files: ', o.files);

});

If many of the above options seem too complex or not what you are looking for here is another approach using node-dir - https://github.com/fshost/node-dir

npm install node-dir

Here is a somple function to list all .xml files searching in subdirectories

import * as nDir from 'node-dir' ;

listXMLs(rootFolderPath) {

let xmlFiles ;

nDir.files(rootFolderPath, function(err, items) {

xmlFiles = items.filter(i => {

return path.extname(i) === '.xml' ;

}) ;

console.log(xmlFiles) ;

});

}

const fs = require('fs');

const path = require('path');

function readFile(filePath) {

return new Promise((resolve, reject) => {

fs.readFile(filePath, 'utf8', (err, data) => {

if (err) {

reject(err);

} else {

resolve(data);

}

});

});

}

function readFolderFiles(folderPath) {

return new Promise((resolve, reject) => {

fs.readdir(folderPath, { withFileTypes: true }, (err, files) => {

if (err) {

reject(err);

} else {

const filePromises = files.map((file) => {

const filePath = path.join(folderPath, file.name);

return readFile(filePath);

});

Promise.all(filePromises)

.then((fileContents) => {

resolve(fileContents);

})

.catch((err) => {

reject(err);

});

}

});

});

}

// Usage example

const folderPath = './s3';

readFolderFiles(folderPath)

.then((fileContents) => {

fileContents.forEach((content, index) => {

console.log(`File ${index + 1}:`);

console.log(content);

console.log('------------------');

});

})

.catch((err) => {

console.error('Error:', err);

});

function getFilesRecursiveSync(dir, fileList, optionalFilterFunction) {

if (!fileList) {

grunt.log.error("Variable 'fileList' is undefined or NULL.");

return;

}

var files = fs.readdirSync(dir);

for (var i in files) {

if (!files.hasOwnProperty(i)) continue;

var name = dir + '/' + files[i];

if (fs.statSync(name).isDirectory()) {

getFilesRecursiveSync(name, fileList, optionalFilterFunction);

} else {

if (optionalFilterFunction && optionalFilterFunction(name) !== true)

continue;

fileList.push(name);

}

}

}

© 2022 - 2024 — McMap. All rights reserved.

fs.readdirworks, but cannot use file name glob patterns likels /tmp/*core*. Check out github.com/isaacs/node-glob. Globs can even search in sub-directories. – Koalareaddir-recursivemodule though if you're looking for the names of files in subdirectories also – Oblivion