bash tools are always nice to use, but for this purpose it seems to be more efficient to just use a tool that does that. I played with some of the main ones as of 2022, namely cloc (perl), gocloc (go), pygount (python).

Got various results without tweaking them too much.

Seems the most accurate and blazingly fast is gocloc.

Example on a small laravel project with a vue frontend:

gocloc

$ ~/go/bin/gocloc /home/jmeyo/project/sequasa

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

JSON 5 0 0 16800

Vue 96 1181 137 8993

JavaScript 37 999 454 7604

PHP 228 1493 2622 7290

CSS 2 157 44 877

Sass 5 72 426 466

XML 11 0 2 362

Markdown 2 45 0 111

YAML 1 0 0 13

Plain Text 1 0 0 2

-------------------------------------------------------------------------------

TOTAL 388 3947 3685 42518

-------------------------------------------------------------------------------

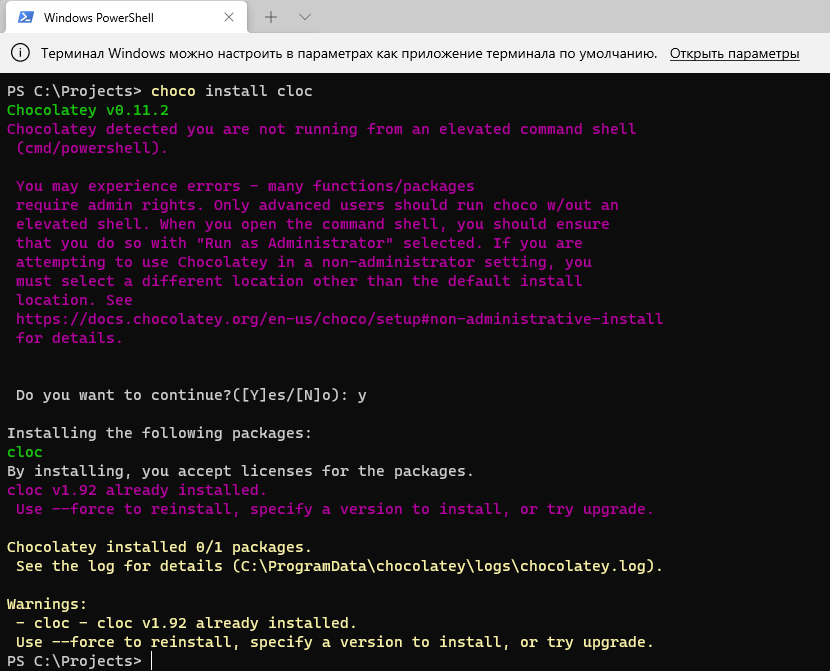

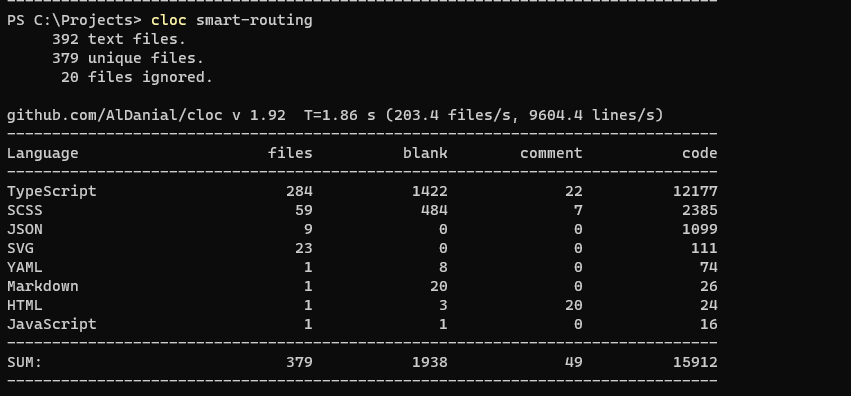

cloc

$ cloc /home/jmeyo/project/sequasa

450 text files.

433 unique files.

40 files ignored.

github.com/AlDanial/cloc v 1.90 T=0.24 s (1709.7 files/s, 211837.9 lines/s)

-------------------------------------------------------------------------------

Language files blank comment code

-------------------------------------------------------------------------------

JSON 5 0 0 16800

Vuejs Component 95 1181 370 8760

JavaScript 37 999 371 7687

PHP 180 1313 2600 5321

Blade 48 180 187 1804

SVG 27 0 0 1273

CSS 2 157 44 877

XML 12 0 2 522

Sass 5 72 418 474

Markdown 2 45 0 111

YAML 4 11 37 53

-------------------------------------------------------------------------------

SUM: 417 3958 4029 43682

-------------------------------------------------------------------------------

pygcount

$ pygount --format=summary /home/jmeyo/project/sequasa

┏━━━━━━━━━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━┳━━━━━━━━━┳━━━━━━┓

┃ Language ┃ Files ┃ % ┃ Code ┃ % ┃ Comment ┃ % ┃

┡━━━━━━━━━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━╇━━━━━━━━━╇━━━━━━┩

│ JSON │ 5 │ 1.0 │ 12760 │ 76.0 │ 0 │ 0.0 │

│ PHP │ 182 │ 37.1 │ 4052 │ 43.8 │ 1288 │ 13.9 │

│ JavaScript │ 37 │ 7.5 │ 3654 │ 40.4 │ 377 │ 4.2 │

│ XML+PHP │ 43 │ 8.8 │ 1696 │ 89.6 │ 39 │ 2.1 │

│ CSS+Lasso │ 2 │ 0.4 │ 702 │ 65.2 │ 44 │ 4.1 │

│ SCSS │ 5 │ 1.0 │ 368 │ 38.2 │ 419 │ 43.5 │

│ HTML+PHP │ 2 │ 0.4 │ 171 │ 85.5 │ 0 │ 0.0 │

│ Markdown │ 2 │ 0.4 │ 86 │ 55.1 │ 4 │ 2.6 │

│ XML │ 1 │ 0.2 │ 29 │ 93.5 │ 2 │ 6.5 │

│ Text only │ 1 │ 0.2 │ 2 │ 100.0 │ 0 │ 0.0 │

│ __unknown__ │ 132 │ 26.9 │ 0 │ 0.0 │ 0 │ 0.0 │

│ __empty__ │ 6 │ 1.2 │ 0 │ 0.0 │ 0 │ 0.0 │

│ __duplicate__ │ 6 │ 1.2 │ 0 │ 0.0 │ 0 │ 0.0 │

│ __binary__ │ 67 │ 13.6 │ 0 │ 0.0 │ 0 │ 0.0 │

├───────────────┼───────┼───────┼───────┼───────┼─────────┼──────┤

│ Sum │ 491 │ 100.0 │ 23520 │ 59.7 │ 2173 │ 5.5 │

└───────────────┴───────┴───────┴───────┴───────┴─────────┴──────┘

Results are mixed, the closest to the reality seems to be the gocloc one, and is also by far the fastest:

- cloc: 0m0.430s

- gocloc: 0m0.059s

- pygcount: 0m39.980s