SQL log

If you are interested only in the statements that are executed, then you can simply activate the PostgreSQL statement log.

For that, open the postgresql.conf file and set the following configuration property:

log_statement = 'all'

Afterward, you will see the SQL statements logged in a file under the following path:

$PG_DATA/pg_log/postgresql-YYYY-MM-DD_HHMMSS.log

However, if you want to record the row-level changes, then you need an audit logging mechanism which can be implemented using triggers, as follows.

Database tables

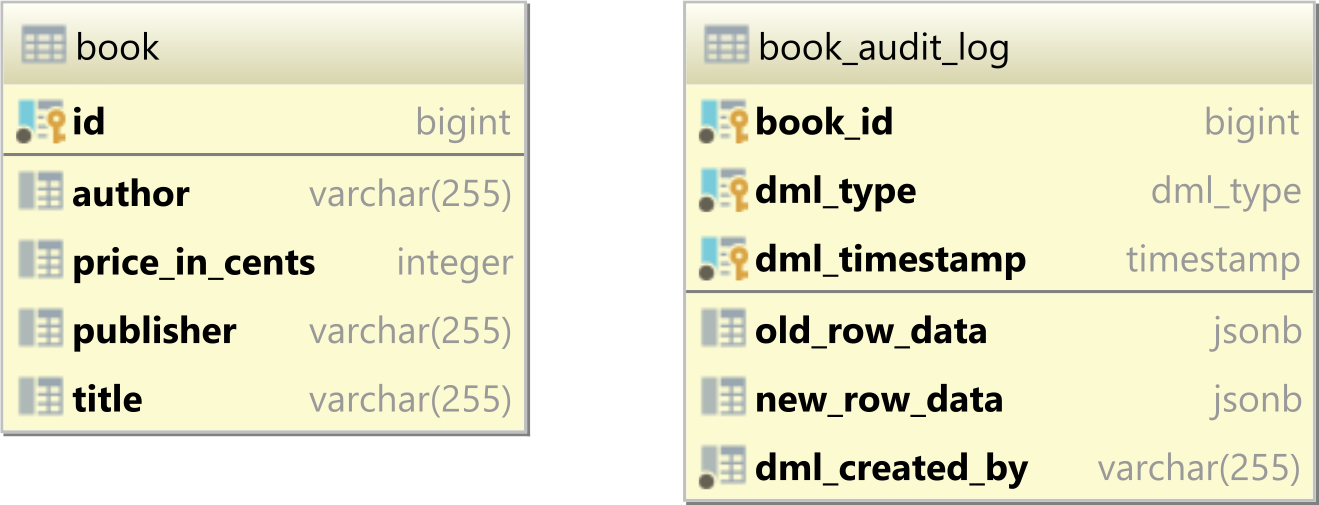

Let's consider we have the following database tables:

![The book and book_audit_log tables]()

The book_audit_log is going to store all the changes that happen in the book table.

The book_audit_log is created like this:

CREATE TABLE IF NOT EXISTS book_audit_log (

book_id bigint NOT NULL,

old_row_data jsonb,

new_row_data jsonb,

dml_type dml_type NOT NULL,

dml_timestamp timestamp NOT NULL,

dml_created_by varchar(255) NOT NULL,

PRIMARY KEY (book_id, dml_type, dml_timestamp)

)

The book_id column stores the identifier of the associated book table record that was inserted, updated, or deleted by the current executing DML statement.

The old_row_data and new_row_data columns are of the JSONB type, and they will capture the state of the book row before and after the execution of the current INSERT, UPDATE, or DELETE statement.

The dml_type column stores the type of the current executing DML statement (e.g., INSERT, UPDATE, and DELETE). The dml_type type is a PostgreSQL enumeration type, that was created like this:

CREATE TYPE dml_type AS ENUM ('INSERT', 'UPDATE', 'DELETE')

The dml_timestamp column stores the current timestamp.

The dml_created_by column stores the application user who generated the current INSERT, UPDATE, or DELETE DML statement.

PostgreSQL audit logging triggers

To capture the INSERT, UPDATE, and DELETE DML statements on the book table, we need to create a trigger function that looks as follows:

CREATE OR REPLACE FUNCTION book_audit_trigger_func()

RETURNS trigger AS $body$

BEGIN

if (TG_OP = 'INSERT') then

INSERT INTO book_audit_log (

book_id,

old_row_data,

new_row_data,

dml_type,

dml_timestamp,

dml_created_by

)

VALUES(

NEW.id,

null,

to_jsonb(NEW),

'INSERT',

CURRENT_TIMESTAMP,

current_setting('var.logged_user')

);

RETURN NEW;

elsif (TG_OP = 'UPDATE') then

INSERT INTO book_audit_log (

book_id,

old_row_data,

new_row_data,

dml_type,

dml_timestamp,

dml_created_by

)

VALUES(

NEW.id,

to_jsonb(OLD),

to_jsonb(NEW),

'UPDATE',

CURRENT_TIMESTAMP,

current_setting('var.logged_user')

);

RETURN NEW;

elsif (TG_OP = 'DELETE') then

INSERT INTO book_audit_log (

book_id,

old_row_data,

new_row_data,

dml_type,

dml_timestamp,

dml_created_by

)

VALUES(

OLD.id,

to_jsonb(OLD),

null,

'DELETE',

CURRENT_TIMESTAMP,

current_setting('var.logged_user')

);

RETURN OLD;

end if;

END;

$body$

LANGUAGE plpgsql;

In order for the book_audit_trigger_func function to be executed after a book table record is inserted, updated or deleted, we have to define the following trigger:

CREATE TRIGGER book_audit_trigger

AFTER INSERT OR UPDATE OR DELETE ON book

FOR EACH ROW EXECUTE FUNCTION book_audit_trigger_func();

The dml_created_by column is set to the value of the var.logged_user PostgreSQL session variable, which was previously set by the application with the currently logged user, like this:

SET LOCAL var.logged_user = 'Vlad Mihalcea'

Testing time

When executing an INSERT statement on the book table:

INSERT INTO book (

id,

author,

price_in_cents,

publisher,

title

)

VALUES (

1,

'Vlad Mihalcea',

3990,

'Amazon',

'High-Performance Java Persistence 1st edition'

)

We can see that a record is inserted in the book_audit_log that captures the INSERT statement that was just executed on the book table:

| book_id | old_row_data | new_row_data | dml_type | dml_timestamp | dml_created_by |

|---------|--------------|-----------------------------------------------------------------------------------------------------------------------------------------------|----------|----------------------------|----------------|

| 1 | | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 3990} | INSERT | 2020-08-25 13:19:57.073026 | Vlad Mihalcea |

When updating the book table row:

UPDATE book

SET price_in_cents = 4499

WHERE id = 1

We can see that a new record is going to be added to the book_audit_log by the book_audit_trigger:

| book_id | old_row_data | new_row_data | dml_type | dml_timestamp | dml_created_by |

|---------|-----------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------|----------|----------------------------|----------------|

| 1 | | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 3990} | INSERT | 2020-08-25 13:19:57.073026 | Vlad Mihalcea |

| 1 | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 3990} | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 4499} | UPDATE | 2020-08-25 13:21:15.006365 | Vlad Mihalcea |

When deleting the book table row:

DELETE FROM book

WHERE id = 1

A new record is added to the book_audit_log by the book_audit_trigger:

| book_id | old_row_data | new_row_data | dml_type | dml_timestamp | dml_created_by |

|---------|-----------------------------------------------------------------------------------------------------------------------------------------------|-----------------------------------------------------------------------------------------------------------------------------------------------|----------|----------------------------|----------------|

| 1 | | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 3990} | INSERT | 2020-08-25 13:19:57.073026 | Vlad Mihalcea |

| 1 | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 3990} | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 4499} | UPDATE | 2020-08-25 13:21:15.006365 | Vlad Mihalcea |

| 1 | {"id": 1, "title": "High-Performance Java Persistence 1st edition", "author": "Vlad Mihalcea", "publisher": "Amazon", "price_in_cents": 4499} | | DELETE | 2020-08-25 13:21:58.499881 | Vlad Mihalcea |

TG_OP = 'DELETE'etc. have to use uppercase'DELETE'etc. I had lowercased everything and was wondering what was wrong. – Wayward