I'm using the Vader SentimentAnalyzer to obtain the polarity scores. I used the probability scores for positive/negative/neutral before, but I just realized the "compound" score, ranging from -1 (most neg) to 1 (most pos) would provide a single measure of polarity. I wonder how the "compound" score computed. Is that calculated from the [pos, neu, neg] vector?

The VADER algorithm outputs sentiment scores to 4 classes of sentiments https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L441:

neg: Negativeneu: Neutralpos: Positivecompound: Compound (i.e. aggregated score)

Let's walk through the code, the first instance of compound is at https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L421, where it computes:

compound = normalize(sum_s)

The normalize() function is defined as such at https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L107:

def normalize(score, alpha=15):

"""

Normalize the score to be between -1 and 1 using an alpha that

approximates the max expected value

"""

norm_score = score/math.sqrt((score*score) + alpha)

return norm_score

So there's a hyper-parameter alpha.

As for the sum_s, it is a sum of the sentiment arguments passed to the score_valence() function https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L413

And if we trace back this sentiment argument, we see that it's computed when calling the polarity_scores() function at https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L217:

def polarity_scores(self, text):

"""

Return a float for sentiment strength based on the input text.

Positive values are positive valence, negative value are negative

valence.

"""

sentitext = SentiText(text)

#text, words_and_emoticons, is_cap_diff = self.preprocess(text)

sentiments = []

words_and_emoticons = sentitext.words_and_emoticons

for item in words_and_emoticons:

valence = 0

i = words_and_emoticons.index(item)

if (i < len(words_and_emoticons) - 1 and item.lower() == "kind" and \

words_and_emoticons[i+1].lower() == "of") or \

item.lower() in BOOSTER_DICT:

sentiments.append(valence)

continue

sentiments = self.sentiment_valence(valence, sentitext, item, i, sentiments)

sentiments = self._but_check(words_and_emoticons, sentiments)

Looking at the polarity_scores function, what it's doing is to iterate through the whole SentiText lexicon and checks with the rule-based sentiment_valence() function to assign the valence score to the sentiment https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L243, see Section 2.1.1 of http://comp.social.gatech.edu/papers/icwsm14.vader.hutto.pdf

So going back to the compound score, we see that:

- the

compoundscore is a normalized score ofsum_sand sum_sis the sum of valence computed based on some heuristics and a sentiment lexicon (aka. Sentiment Intensity) and- the normalized score is simply the

sum_sdivided by its square plus an alpha parameter that increases the denominator of the normalization function.

Is that calculated from the [pos, neu, neg] vector?

Not really =)

If we take a look at the score_valence function https://github.com/nltk/nltk/blob/develop/nltk/sentiment/vader.py#L411, we see that the compound score is computed with the sum_s before the pos, neg and neu scores are computed using _sift_sentiment_scores() that computes the invidiual pos, neg and neu scores using the raw scores from sentiment_valence() without the sum.

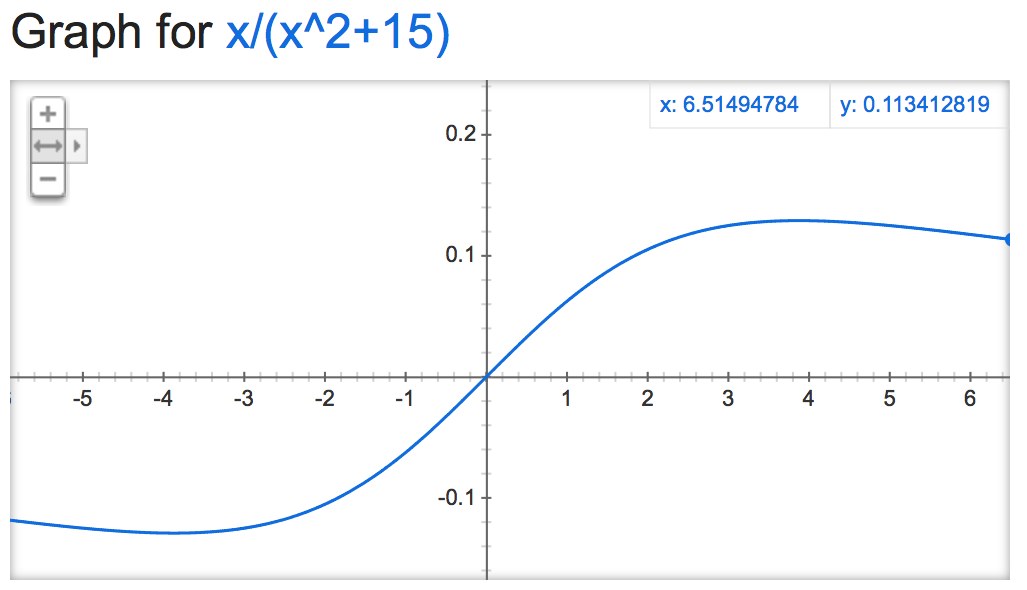

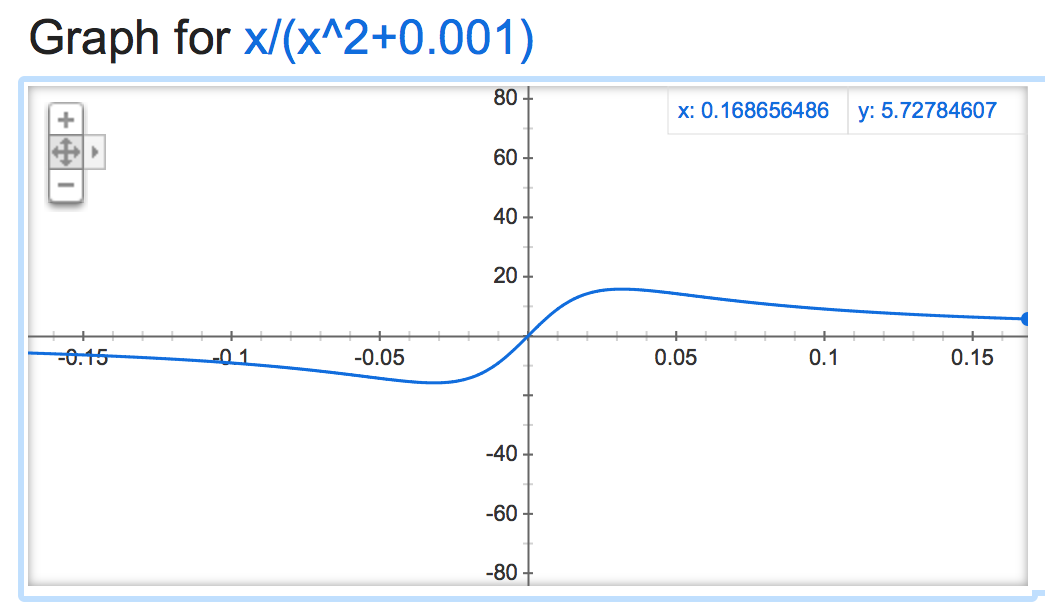

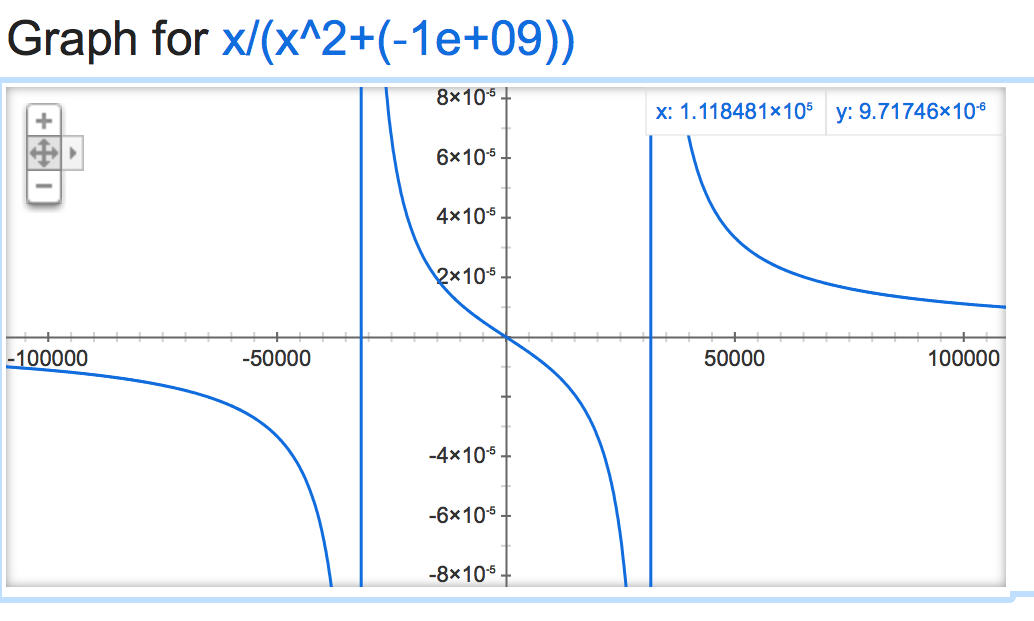

If we take a look at this alpha mathemagic, it seems the output of the normalization is rather unstable (if left unconstrained), depending on the value of alpha:

alpha=0:

alpha=15:

alpha=50000:

alpha=0.001:

It gets funky when it's negative:

alpha=-10:

alpha=-1,000,000:

alpha=-1,000,000,000:

score/math.sqrt(score*score+alpha), but you are plotting score/(score*score+alpha). Otherwise, good analysis! –

Handwork "About the Scoring" section at the github repo has a description.

© 2022 - 2024 — McMap. All rights reserved.