Demo for Airflow 2.X:

First, create a Connection URI

# refer doc: https://airflow.apache.org/docs/apache-airflow/stable/howto/connection.html

# in airflow HOST, run bash cmd:

"""

airflow connections add 'ssh_dt17' --conn-uri 'ssh://[username]:[password]@192.168.1.17'

"""

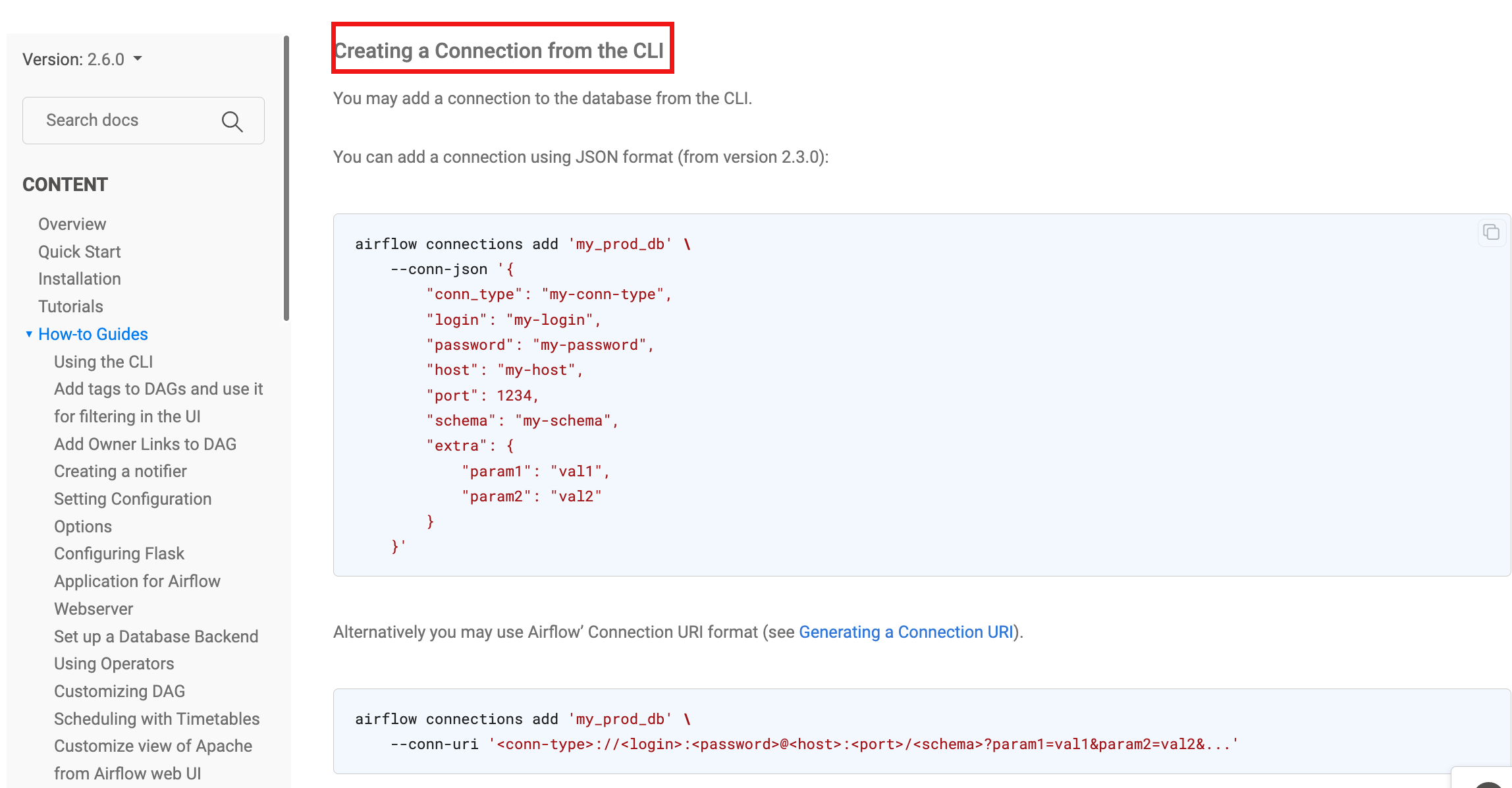

![Creating a Connection from the CLI]()

Second, Demo dag code:

from airflow.decorators import dag, task

# from airflow.operators.bash import BashOperator

from airflow.operators.python import PythonOperator, get_current_context

from airflow.operators.dummy import DummyOperator

from airflow.providers.ssh.operators.ssh import SSHOperator

from airflow.providers.ssh.hooks.ssh import SSHHook

@dag(

default_args=default_args,

description='demo',

schedule_interval=None,

start_date=datetime(2022, 9, 20, tzinfo=tz),

catchup = False,

max_active_tasks = 1,

)

def demo_run_ssh_remote_cmd():

# ssh_conn_id

################################################################

ssh_dt17 = SSHHook(ssh_conn_id='ssh_dt17', remote_host='192.168.1.17')

# dt17 ssh run remote cmd

################################################################

cmd_logrotate =(r'''

/usr/sbin/logrotate -v -f /etc/logrotate.d/access_log_8am_8pm

''')

logrotate_ad = SSHOperator(

task_id='logrotate_ad',

command=cmd_logrotate,

ssh_hook=ssh_dt17,

max_active_tis_per_dag=1,

cmd_timeout = 60*5,

# trigger_rule="none_failed",

)

# =============================================================================================

start = DummyOperator(task_id="start")

start >> logrotate_ad

_ = demo_run_ssh_remote_cmd()