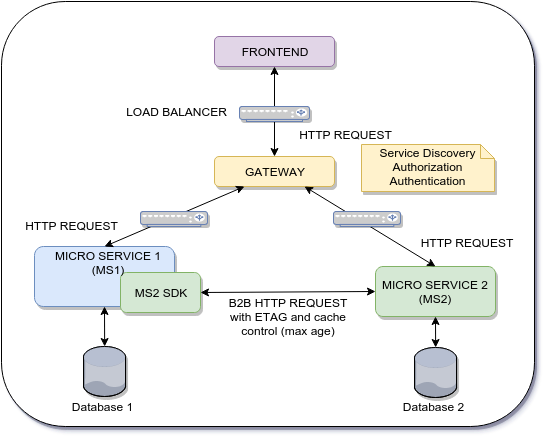

Current Architecture:

Problem:

We have a two-step flow between frontend and backend layers.

- First step: The frontend validates an input I1 from the user on microservice 1 (MS1)

- Second step: The frontend submits I1 and more information to the microservice 2

The micro service 2 (MS2) needs to validates the integrity of I1 as it is coming from the frontend. How to do avoid a new query to MS1? What's the best approach?

Flows that I'm trying to optimize removing the steps 1.3 and 2.3

Flow 1:

- 1.1 The User X requests data (MS2_Data) from MS2

- 1.2 The User X persists data (MS2_Data + MS1_Data) on MS1

- 1.3 The MS1 check the integrity of MS2_Data using a B2B HTTP request

- 1.4 The MS1 use MS2_Data and MS1_Data to persist and Database 1 and build the HTTP response.

Flow 2:

- 2.1 The User X already has data (MS2_Data) stored on local/session storage

- 2.2 The User X persists data (MS2_Data + MS1_Data) on MS1

- 2.3 The MS1 check the integrity of MS2_Data using a B2B HTTP request

- 2.4 The MS1 use MS2_Data and MS1_Data to persist and Database 1 and build the HTTP response.

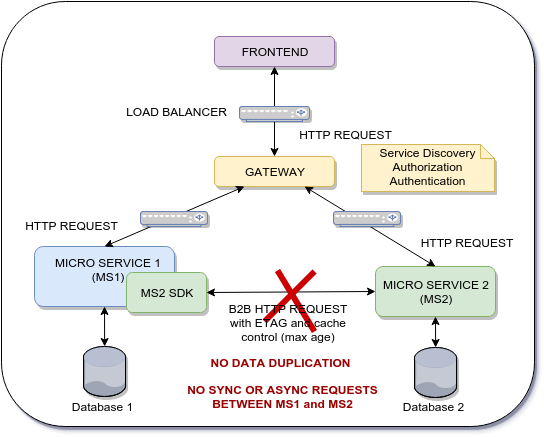

Approach

One possible approach is to use a B2B HTTP request between MS2 and MS1 but we would be duplicating the validation in the first step. Another approach will be duplicating data from MS1 to MS2. however this is prohibitive due to the amount of data and it's volatility nature. Duplication does not seem to be a viable option.

A more suitable solution is my opinion will the frontend to have the responsibility to fetch all the information required by the micro service 1 on the micro service 2 and delivered it to the micro service 2. This will avoid all this B2B HTTP requests.

The problem is how the micro service 1 can trust the information sent by the frontend. Perhaps using JWT to somehow sign the data from the micro service 1 and the micro service 2 will be able to verify the message.

Note Every time the micro service 2 needs information from the micro service 1 a B2B http request is performed. (The HTTP request use ETAG and Cache Control: max-age). How to avoid this?

Architecture Goal

The micro service 1 needs the data from the micro service 2 on demand to be able to persist MS1_Data and MS2_Data on MS1 database, so the ASYNC approach using a broker does not apply here.

My question is if exists a design pattern, best practice or a framework to enable this kind of thrust communication.

The downside of the current architecture is the number of B2B HTTP requests that are performed between each micro services. Even if I use a cache-control mechanism the response time of each micro service will be affected. The response time of each micro services is critical. The goal here is to archive a better performance and some how use the frontend as a gateway to distribute data across several micro services but using a thrust communication.

MS2_Data is just an Entity SID like product SID or vendor SID that the MS1 must use to maintain data integrity.

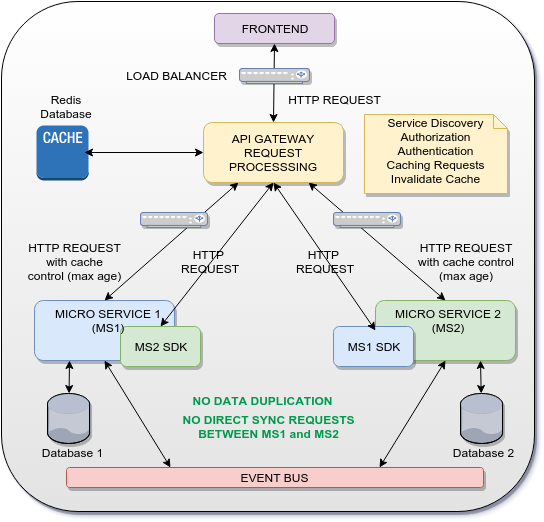

Possible Solution

The idea is to use the gateway as an api gateway request processing that will cache some HTTP response from MS1 and MS2 and use them as a response to MS2 SDK and MS1 SDK. This way no communication (SYNC OR ASYNC) is made directly between MS1 and MS2 and data duplication is also avoided.

Of course the above solution is just for shared UUID/GUID across micro services. For full data, an event bus is used to distribute events and data across micro services in an asynchronous way (Event sourcing pattern).

Inspiration: https://aws.amazon.com/api-gateway/ and https://getkong.org/

Related questions and documentation:

- How to sync the database with the microservices (and the new one)?

- https://auth0.com/blog/introduction-to-microservices-part-4-dependencies/

- Transactions across REST microservices?

- https://en.wikipedia.org/wiki/Two-phase_commit_protocol

- http://ws-rest.org/2014/sites/default/files/wsrest2014_submission_7.pdf

- https://www.tigerteam.dk/2014/micro-services-its-not-only-the-size-that-matters-its-also-how-you-use-them-part-1/