You can programmatically import data from a csv file in your Drive into an existing Google Sheet using Google Apps Script, replacing/appending data as needed.

Below is some sample code. It assumes that: a) you have a designated folder in your Drive where the CSV file is saved/uploaded to; b) the CSV file is named "report.csv" and the data in it comma-delimited; and c) the CSV data is imported into a designated spreadsheet. See comments in code for further details.

function importData() {

var fSource = DriveApp.getFolderById(reports_folder_id); // reports_folder_id = id of folder where csv reports are saved

var fi = fSource.getFilesByName('report.csv'); // latest report file

var ss = SpreadsheetApp.openById(data_sheet_id); // data_sheet_id = id of spreadsheet that holds the data to be updated with new report data

if ( fi.hasNext() ) { // proceed if "report.csv" file exists in the reports folder

var file = fi.next();

var csv = file.getBlob().getDataAsString();

var csvData = CSVToArray(csv); // see below for CSVToArray function

var newsheet = ss.insertSheet('NEWDATA'); // create a 'NEWDATA' sheet to store imported data

// loop through csv data array and insert (append) as rows into 'NEWDATA' sheet

for ( var i=0, lenCsv=csvData.length; i<lenCsv; i++ ) {

newsheet.getRange(i+1, 1, 1, csvData[i].length).setValues(new Array(csvData[i]));

}

/*

** report data is now in 'NEWDATA' sheet in the spreadsheet - process it as needed,

** then delete 'NEWDATA' sheet using ss.deleteSheet(newsheet)

*/

// rename the report.csv file so it is not processed on next scheduled run

file.setName("report-"+(new Date().toString())+".csv");

}

};

// http://www.bennadel.com/blog/1504-Ask-Ben-Parsing-CSV-Strings-With-Javascript-Exec-Regular-Expression-Command.htm

// This will parse a delimited string into an array of

// arrays. The default delimiter is the comma, but this

// can be overriden in the second argument.

function CSVToArray( strData, strDelimiter ) {

// Check to see if the delimiter is defined. If not,

// then default to COMMA.

strDelimiter = (strDelimiter || ",");

// Create a regular expression to parse the CSV values.

var objPattern = new RegExp(

(

// Delimiters.

"(\\" + strDelimiter + "|\\r?\\n|\\r|^)" +

// Quoted fields.

"(?:\"([^\"]*(?:\"\"[^\"]*)*)\"|" +

// Standard fields.

"([^\"\\" + strDelimiter + "\\r\\n]*))"

),

"gi"

);

// Create an array to hold our data. Give the array

// a default empty first row.

var arrData = [[]];

// Create an array to hold our individual pattern

// matching groups.

var arrMatches = null;

// Keep looping over the regular expression matches

// until we can no longer find a match.

while (arrMatches = objPattern.exec( strData )){

// Get the delimiter that was found.

var strMatchedDelimiter = arrMatches[ 1 ];

// Check to see if the given delimiter has a length

// (is not the start of string) and if it matches

// field delimiter. If id does not, then we know

// that this delimiter is a row delimiter.

if (

strMatchedDelimiter.length &&

(strMatchedDelimiter != strDelimiter)

){

// Since we have reached a new row of data,

// add an empty row to our data array.

arrData.push( [] );

}

// Now that we have our delimiter out of the way,

// let's check to see which kind of value we

// captured (quoted or unquoted).

if (arrMatches[ 2 ]){

// We found a quoted value. When we capture

// this value, unescape any double quotes.

var strMatchedValue = arrMatches[ 2 ].replace(

new RegExp( "\"\"", "g" ),

"\""

);

} else {

// We found a non-quoted value.

var strMatchedValue = arrMatches[ 3 ];

}

// Now that we have our value string, let's add

// it to the data array.

arrData[ arrData.length - 1 ].push( strMatchedValue );

}

// Return the parsed data.

return( arrData );

};

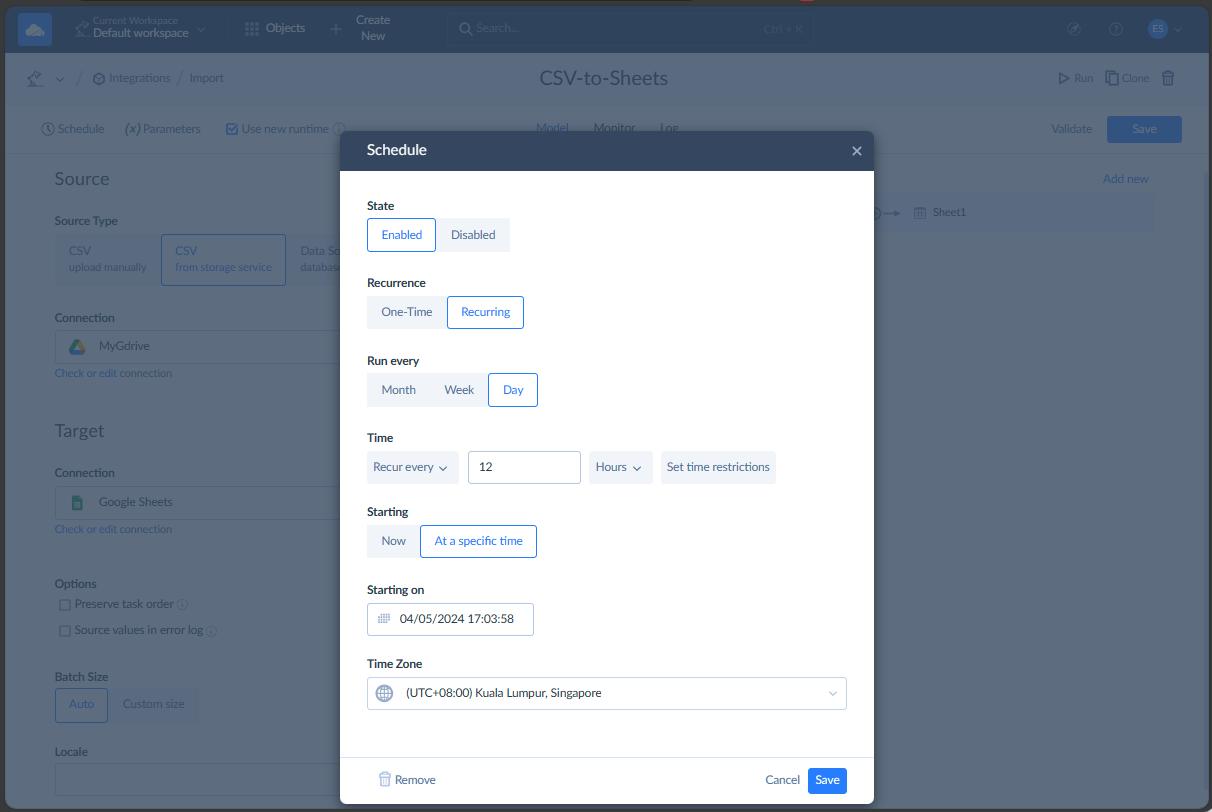

You can then create time-driven trigger in your script project to run importData() function on a regular basis (e.g. every night at 1AM), so all you have to do is put new report.csv file into the designated Drive folder, and it will be automatically processed on next scheduled run.

If you absolutely MUST work with Excel files instead of CSV, then you can use this code below. For it to work you must enable Drive API in Advanced Google Services in your script and in Developers Console (see How to Enable Advanced Services for details).

/**

* Convert Excel file to Sheets

* @param {Blob} excelFile The Excel file blob data; Required

* @param {String} filename File name on uploading drive; Required

* @param {Array} arrParents Array of folder ids to put converted file in; Optional, will default to Drive root folder

* @return {Spreadsheet} Converted Google Spreadsheet instance

**/

function convertExcel2Sheets(excelFile, filename, arrParents) {

var parents = arrParents || []; // check if optional arrParents argument was provided, default to empty array if not

if ( !parents.isArray ) parents = []; // make sure parents is an array, reset to empty array if not

// Parameters for Drive API Simple Upload request (see https://developers.google.com/drive/web/manage-uploads#simple)

var uploadParams = {

method:'post',

contentType: 'application/vnd.ms-excel', // works for both .xls and .xlsx files

contentLength: excelFile.getBytes().length,

headers: {'Authorization': 'Bearer ' + ScriptApp.getOAuthToken()},

payload: excelFile.getBytes()

};

// Upload file to Drive root folder and convert to Sheets

var uploadResponse = UrlFetchApp.fetch('https://www.googleapis.com/upload/drive/v2/files/?uploadType=media&convert=true', uploadParams);

// Parse upload&convert response data (need this to be able to get id of converted sheet)

var fileDataResponse = JSON.parse(uploadResponse.getContentText());

// Create payload (body) data for updating converted file's name and parent folder(s)

var payloadData = {

title: filename,

parents: []

};

if ( parents.length ) { // Add provided parent folder(s) id(s) to payloadData, if any

for ( var i=0; i<parents.length; i++ ) {

try {

var folder = DriveApp.getFolderById(parents[i]); // check that this folder id exists in drive and user can write to it

payloadData.parents.push({id: parents[i]});

}

catch(e){} // fail silently if no such folder id exists in Drive

}

}

// Parameters for Drive API File Update request (see https://developers.google.com/drive/v2/reference/files/update)

var updateParams = {

method:'put',

headers: {'Authorization': 'Bearer ' + ScriptApp.getOAuthToken()},

contentType: 'application/json',

payload: JSON.stringify(payloadData)

};

// Update metadata (filename and parent folder(s)) of converted sheet

UrlFetchApp.fetch('https://www.googleapis.com/drive/v2/files/'+fileDataResponse.id, updateParams);

return SpreadsheetApp.openById(fileDataResponse.id);

}

/**

* Sample use of convertExcel2Sheets() for testing

**/

function testConvertExcel2Sheets() {

var xlsId = "0B9**************OFE"; // ID of Excel file to convert

var xlsFile = DriveApp.getFileById(xlsId); // File instance of Excel file

var xlsBlob = xlsFile.getBlob(); // Blob source of Excel file for conversion

var xlsFilename = xlsFile.getName(); // File name to give to converted file; defaults to same as source file

var destFolders = []; // array of IDs of Drive folders to put converted file in; empty array = root folder

var ss = convertExcel2Sheets(xlsBlob, xlsFilename, destFolders);

Logger.log(ss.getId());

}

The above code is also available as a gist here.