TL;DR: In a Spark Standalone cluster, what are the differences between client and cluster deploy modes? How do I set which mode my application is going to run on?

We have a Spark Standalone cluster with three machines, all of them with Spark 1.6.1:

- A master machine, which also is where our application is run using

spark-submit - 2 identical worker machines

From the Spark Documentation, I read:

(...) For standalone clusters, Spark currently supports two deploy modes. In client mode, the driver is launched in the same process as the client that submits the application. In cluster mode, however, the driver is launched from one of the Worker processes inside the cluster, and the client process exits as soon as it fulfills its responsibility of submitting the application without waiting for the application to finish.

However, I don't really understand the practical differences by reading this, and I don't get what are the advantages and disadvantages of the different deploy modes.

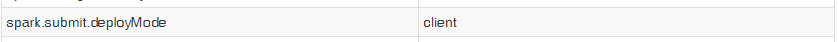

Additionally, when I start my application using start-submit, even if I set the property spark.submit.deployMode to "cluster", the Spark UI for my context shows the following entry:

So I am not able to test both modes to see the practical differences. That being said, my questions are:

1) What are the practical differences between Spark Standalone client deploy mode and cluster deploy mode? What are the pro's and con's of using each one?

2) How to I choose which one my application is going to be running on, using spark-submit?