I am using NodeJs to upload files to AWS S3. I want the client to be able to download the files securely. So I am trying to generate signed URLs, that expire after one usage. My code looks like this:

Uploading

const s3bucket = new AWS.S3({

accessKeyId: 'my-access-key-id',

secretAccessKey: 'my-secret-access-key',

Bucket: 'my-bucket-name',

})

const uploadParams = {

Body: file.data,

Bucket: 'my-bucket-name',

ContentType: file.mimetype,

Key: `files/${file.name}`,

}

s3bucket.upload(uploadParams, function (err, data) {

// ...

})

Downloading

const url = s3bucket.getSignedUrl('getObject', {

Bucket: 'my-bucket-name',

Key: 'file-key',

Expires: 300,

})

Issue

When opening the URL I get the following:

This XML file does not appear to have any style information associated with it. The document tree is shown below.

<Error>

<Code>AccessDenied</Code>

<Message>

There were headers present in the request which were not signed

</Message>

<HeadersNotSigned>host</HeadersNotSigned>

<RequestId>D63C8ED4CD8F4E5F</RequestId>

<HostId>

9M0r2M3XkRU0JLn7cv5QN3S34G8mYZEy/v16c6JFRZSzDBa2UXaMLkHoyuN7YIt/LCPNnpQLmF4=

</HostId>

</Error>

I coultn't manage to find the mistake. I would really appreciate any help :)

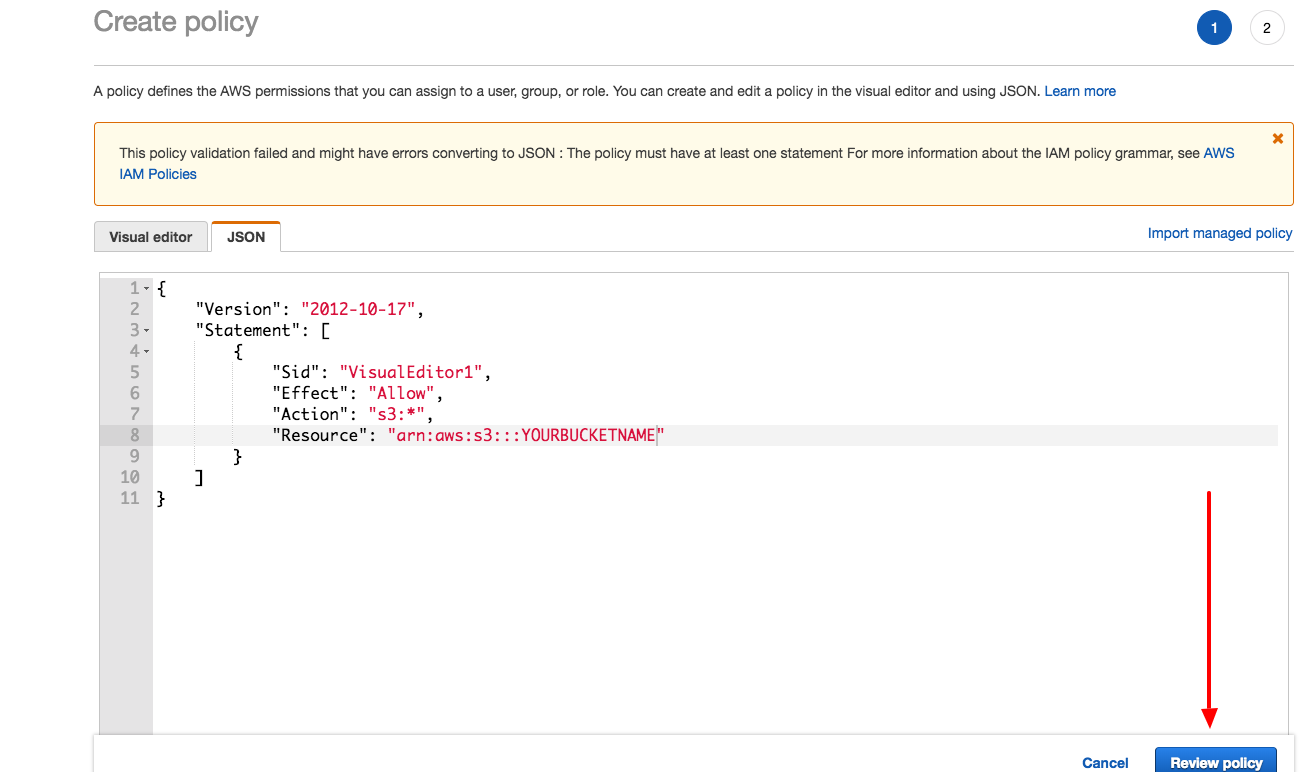

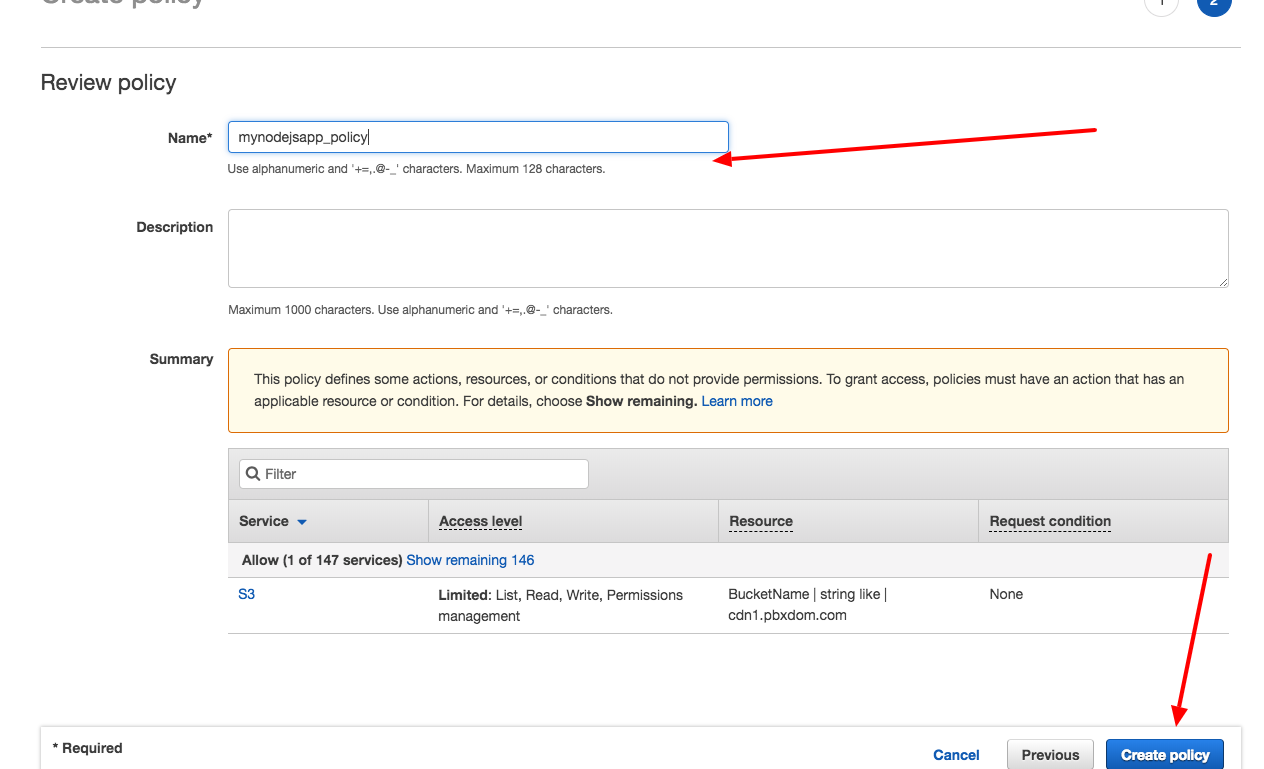

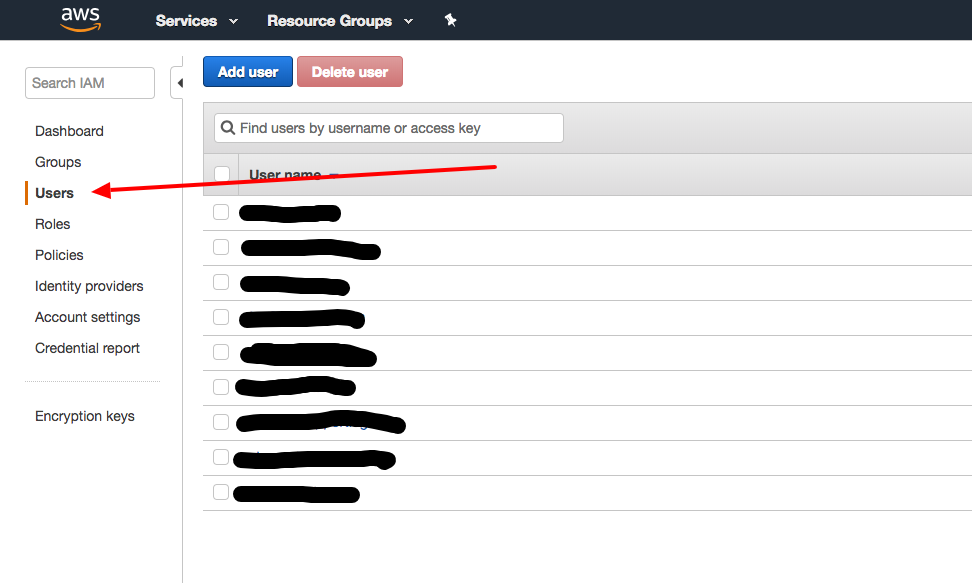

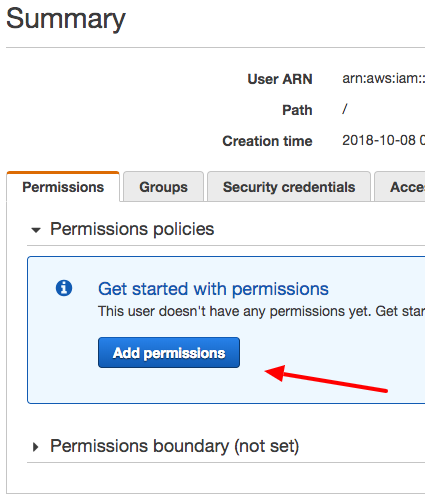

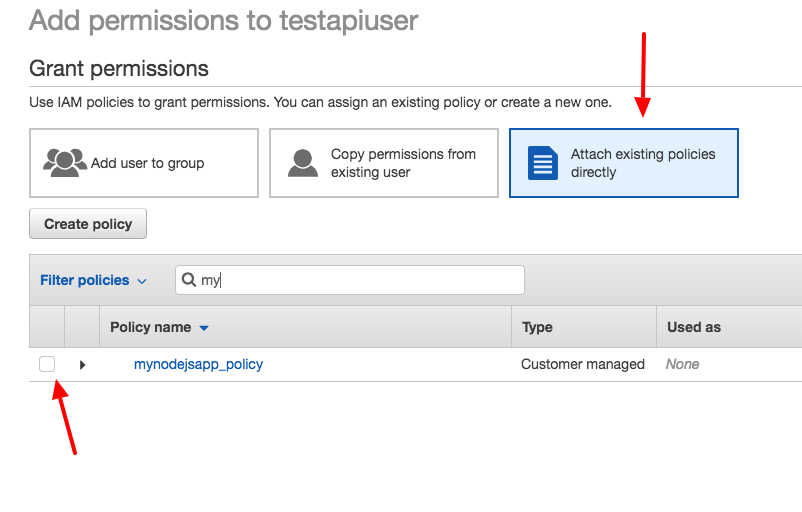

getSignedUrlare valid – WorkableAccessDeniedresponse try checking your bucket permissions and allow the user to read and view (enable read and view permissions). – Workable