I am using opencvs findContour to find the points to describe an image made up of lines (not polygons) as such:

cv::findContours(src, contours, hierarchy, cv::RETR_EXTERNAL, cv::CHAIN_APPROX_SIMPLE);.

If I understand correctly, the "cv2.connectedComponents" method gives what you are looking for. It assigns a label for each point in your image, the label is the same if points are connected. By doing this assignment there is no duplication happening. So, if your lines are one pixel wide (e.g output of an edge detector or a thinning operator) you get one point per location.

Edit:

As per the OP request, lines should be 1-pixel wide. To achieve this a thinning operation is applied before finding connected components. Steps images have been added too.

Please note that each connected component points are sorted in ascending order of y cords.

img_path = "D:/_temp/fig.png"

output_dir = 'D:/_temp/'

img = cv2.imread(img_path, cv2.IMREAD_GRAYSCALE)

_, img = cv2.threshold(img, 128, 255, cv2.THRESH_OTSU + cv2.THRESH_BINARY_INV)

total_white_pixels = cv2.countNonZero(img)

print ("Total White Pixels Before Thinning = ", total_white_pixels)

cv2.imwrite(output_dir + '1-thresholded.png', img)

#apply thinning -> each line is one-pixel wide

img = cv2.ximgproc.thinning(img)

cv2.imwrite(output_dir + '2-thinned.png', img)

total_white_pixels = cv2.countNonZero(img)

print ("Total White Pixels After Thinning = ", total_white_pixels)

no_ccs, labels = cv2.connectedComponents(img)

label_pnts_dic = {}

colored = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

i = 1 # skip label 0 as it corresponds to the backgground points

sum_of_cc_points = 0

while i < no_ccs:

label_pnts_dic[i] = np.where(labels == i) #where return tuple(list of x cords, list of y cords)

colored[label_pnts_dic[i]] = (random.randint(100, 255), random.randint(100, 255), random.randint(100, 255))

i +=1

cv2.imwrite(output_dir + '3-colored.png', colored)

print ("First ten points of label-1 cc: ")

for i in range(10):

print ("x: ", label_pnts_dic[1][1][i], "y: ", label_pnts_dic[1][0][i])

Output:

Total White Pixels Before Thinning = 6814

Total White Pixels After Thinning = 2065

First ten points of label-1 cc:

x: 312 y: 104

x: 313 y: 104

x: 314 y: 104

x: 315 y: 104

x: 316 y: 104

x: 317 y: 104

x: 318 y: 104

x: 319 y: 104

x: 320 y: 104

x: 321 y: 104

Images:

1.Thresholded

- Thinned

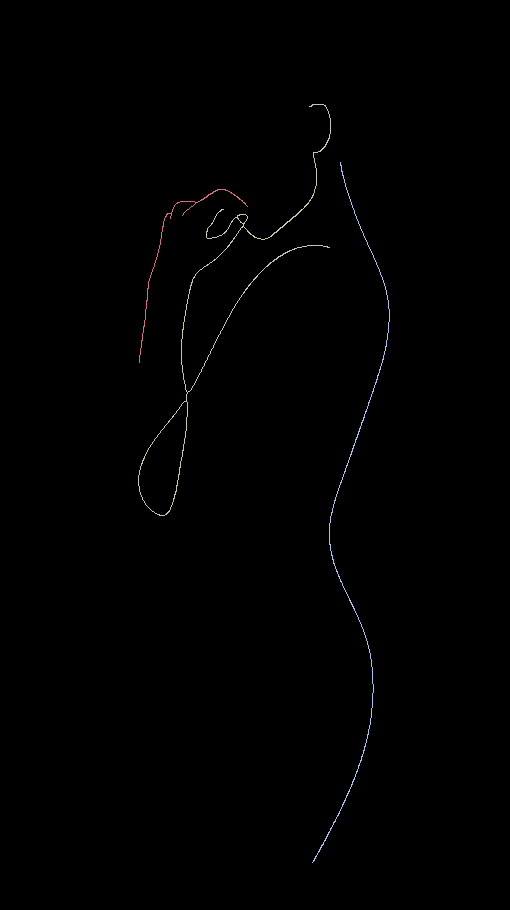

- Colored Components

Edit2:

After a discussion with OP, I understood that having a list of (scattered) points is not enough. Points should be ordered so that they could be traced. To achieve that new logic should be introduced after applying thinning to the image.

- Find extreme points (points with a single 8-connectivity neighbor)

- Find connector points (points with 3-ways connectivity)

- Find simple points (all other points)

- Start tracing from an extreme point until reaching another extreme point or a connector one.

- Extract the traveled path.

- Check whether a connector point has turned into a simple point and update its status.

- Repeat

- Check if there are any closed-loops of simple points that have not been reached from any extreme point, extract each closed-loop as an additional waypoint.

Code for extreme/connector/simple point classification

def filter_neighbors(ns):

i = 0

while i < len(ns):

j = i + 1

while j < len(ns):

if (ns[i][0] == ns[j][0] and abs(ns[i][1] - ns[j][1]) <= 1) or (ns[i][1] == ns[j][1] and abs(ns[i][0] - ns[j][0]) <= 1):

del ns[j]

break

j += 1

i += 1

def sort_points_types(pnts):

extremes = []

connections = []

simple = []

for i in range(pnts.shape[0]):

neighbors = []

for j in range (pnts.shape[0]):

if i == j: continue

if abs(pnts[i, 0] - pnts[j, 0]) <= 1 and abs(pnts[i, 1] - pnts[j, 1]) <= 1:#8-connectivity check

neighbors.append(pnts[j])

filter_neighbors(neighbors)

if len(neighbors) == 1:

extremes.append(pnts[i])

elif len(neighbors) == 2:

simple.append(pnts[i])

elif len(neighbors) > 2:

connections.append(pnts[i])

return extremes, connections, simple

img_path = "D:/_temp/fig.png"

output_dir = 'D:/_temp/'

img = cv2.imread(img_path, cv2.IMREAD_GRAYSCALE)

_, img = cv2.threshold(img, 128, 255, cv2.THRESH_OTSU + cv2.THRESH_BINARY_INV)

img = cv2.ximgproc.thinning(img)

pnts = cv2.findNonZero(img)

pnts = np.squeeze(pnts)

ext, conn, simple = sort_points_types(pnts)

for p in conn:

cv2.circle(img, (p[0], p[1]), 5, 128)

for p in ext:

cv2.circle(img, (p[0], p[1]), 5, 128)

cv2.imwrite(output_dir + "6-both.png", img)

print (len(ext), len(conn), len(simple))

Edit3:

A much more efficient implementation for classifying the points in a single pass by checking neighbors in a kernel-like way, thanks to eldesgraciado!

Note: Before calling this method the image should be padded with one pixel to avoid border checks or equivalently blackout pixels at the border.

def sort_points_types(pnts, img):

extremes = []

connections = []

simple = []

for p in pnts:

x = p[0]

y = p[1]

n = []

if img[y - 1,x] > 0: n.append((y-1, x))

if img[y - 1,x - 1] > 0: n.append((y-1, x - 1))

if img[y - 1,x + 1] > 0: n.append((y-1, x + 1))

if img[y,x - 1] > 0: n.append((y, x - 1))

if img[y,x + 1] > 0: n.append((y, x + 1))

if img[y + 1,x] > 0: n.append((y+1, x))

if img[y + 1,x - 1] > 0: n.append((y+1, x - 1))

if img[y + 1,x + 1] > 0: n.append((y+1, x + 1))

filter_neighbors(n)

if len(n) == 1:

extremes.append(p)

elif len(n) == 2:

simple.append(p)

elif len(n) > 2:

connections.append(p)

return extremes, connections, simple

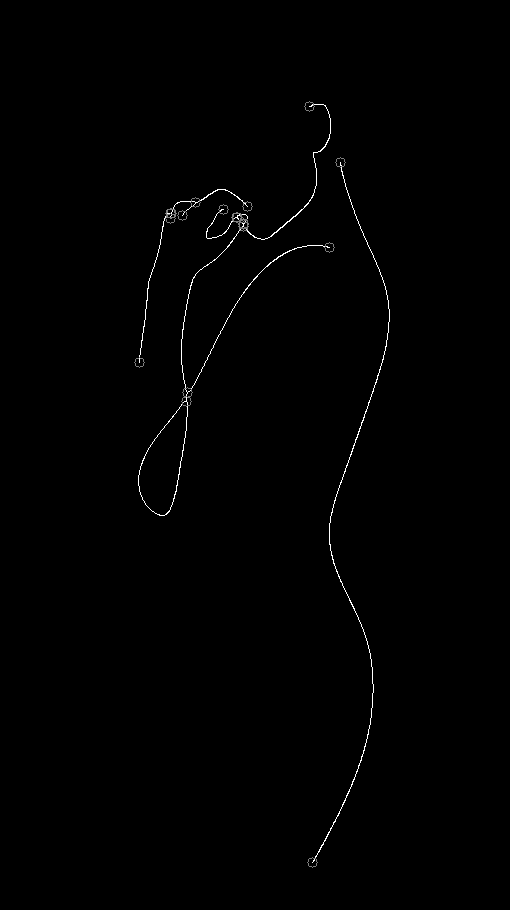

An image visualizing extreme and connector points:

© 2022 - 2024 — McMap. All rights reserved.

findContours. – MerrymanfindContoursgives very close to what I want, the only problem being that it traces back on itself. – Lesalesakcv::dilate– Lesalesak