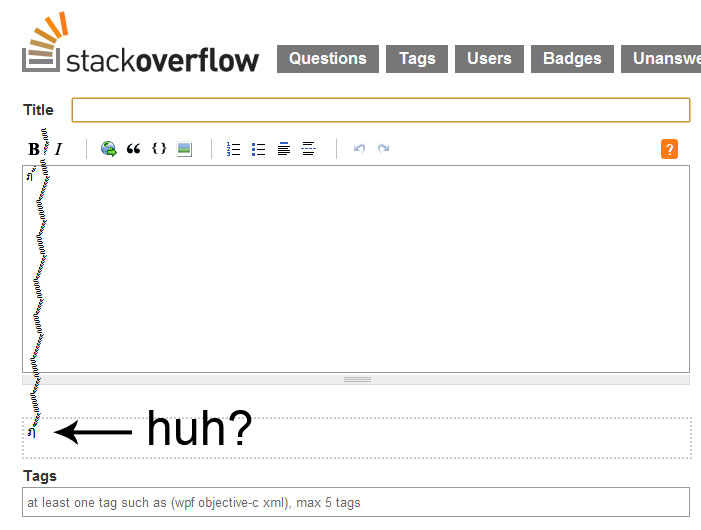

The character pictured above was tweeted a few months ago by Mikko Hyppönen, a computer security expert known for his work on computer viruses and TED talks on computer security. In respect for SO, I will only post an image of it, but you get the idea. It's obviously not something you'd want spreading around your website and freaking out visitors.

Upon further inspection, the character appears to be a letter of the Thai alphabet combined with over 87 diacritics (is there even a limit?!). This got me thinking about security, localization, and how one might handle this sort of input. My searching lead me to this question on Stack, and in turn a blog post from Michael Kaplan on stripping diacritics. In it, he demonstrates how one can decompose a string into its "base" characters (simplified here for the sake of brevity):

StringBuilder sb = new StringBuilder();

foreach (char c in "façade".Normalize(NormalizationForm.FormD))

{

if (char.GetUnicodeCategory(c) != UnicodeCategory.NonSpacingMark)

sb.Append(c);

}

Response.Write(sb.ToString()); // facade

I can see how that this is would be useful in some cases, but in terms of user input, it would be stripping out ALL diacritics. As Kaplan points out, removing the diacritics in some languages can completely change the meaning to the word. This begs the question: How does one permit some diacritics in user input/output, but exclude others extreme cases such as Mikko Hyppönen's über character?