I've created docker with kafka broker and zookeeper to start it with run script. If I do fresh start it starts normally and runs ok (Windows -> WSL -> two tmux windows, one session). If I shut down kafka or zookeeper and start it again it will connect normally.

Problem occurs when I stop docker container (docker stop my_kafka_container). Then I start with my script ./run_docker. In that script before start I delete old container docker rm my_kafka_containerand then docker run.

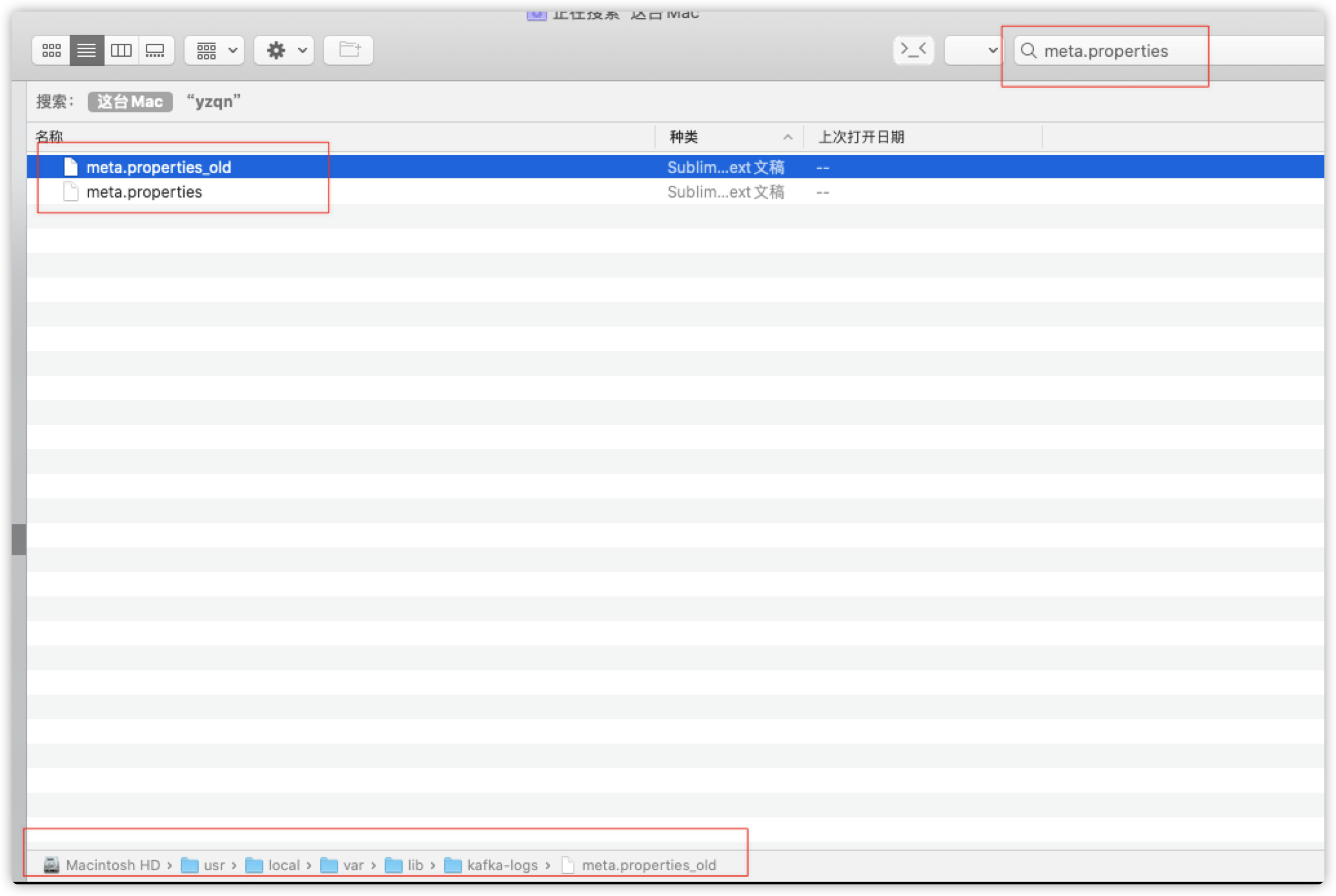

Zookeeper starts normally and in file meta.properties it has old cluster id from previous start up, but kafka broker for some reason cannot find by znode cluster/id this id and creates new one which is not that which is stored in meta.properties. And I get

ERROR Fatal error during KafkaServer startup. Prepare to shutdown (kafka.server.KafkaServer)

kafka.common.InconsistentClusterIdException: The Cluster ID m1Ze6AjGRwqarkcxJscgyQ doesn't match stored clusterId Some(1TGYcbFuRXa4Lqojs4B9Hw) in meta.properties. The broker is trying to join the wrong cluster. Configured zookeeper.connect may be wrong.

at kafka.server.KafkaServer.startup(KafkaServer.scala:220)

at kafka.server.KafkaServerStartable.startup(KafkaServerStartable.scala:44)

at kafka.Kafka$.main(Kafka.scala:84)

at kafka.Kafka.main(Kafka.scala)

[2020-01-04 15:58:43,303] INFO shutting down (kafka.server.KafkaServer)

How to avoid broker change it cluster id?